Evaluation of the Open Operating Grant Program – Final Report 2012

Acknowledgements

Special thanks to the all the participants in this evaluation – survey respondents, interview and case study participants, current and former members of the Subcommittee on Performance Measurement, CIHR and Institute management, OOGP management, Finance and ITAMS Branch managements, and members of the Evaluation Working Group, Additional thanks to the National Research Council of Canada for the cover photo.

The OOGP Evaluation Study Team:

David Peckham, MSc; Kwadwo (Nana) Bosompra, Phd; Christopher Manuel, M.Ed.

Canadian Institutes of Health Research

160 Elgin Street, 9th Floor

Address Locator 4809A

Ottawa, Ontario K1A 0W9

Canada

www.cihr-irsc.gc.ca

Table of Contents

- Executive Summary

- Evaluation Purpose, Key Findings and Conclusions

- Knowledge Creation

- Program Design and Delivery

- Knowledge Translation

- Capacity Development

- Program Relevance

- Program Background

- Evaluation Methodology

- Appendix

- References

Executive Summary

This evaluation of the Open Operating Grant Program (OOGP) takes place at a time when CIHR is proposing changes to its open suite of programs and enhancements to the peer review system. The evaluation therefore focuses on both the program performance of the existing OOGP and findings that can feed into the process of reforming CIHR's open programs.

The OOGP as it is currently designed has met its key program objectives. Findings from this evaluation demonstrate how the program has contributed to the creation and dissemination of health-related knowledge and supported high quality research.

The health research context in which the OOGP operates has however evolved since the program's inception, leading to questions about how well the current design funds excellence across the breadth of CIHR's mandate. Evidence from this evaluation shows that there are opportunities to enhance both program design and cost-effective delivery. Enacting these changes should ensure that CIHR's open suite of programs is well-equipped to meet current and future needs.

Key Findings

- The OOGP has been both attracting and funding health research excellence since 2000. The scientific impact of publications produced by OOGP-supported researchers provides evidence of how the program has outperformed benchmark comparators; case study illustrations of high impact projects demonstrate the longer-term outcomes of this funding.

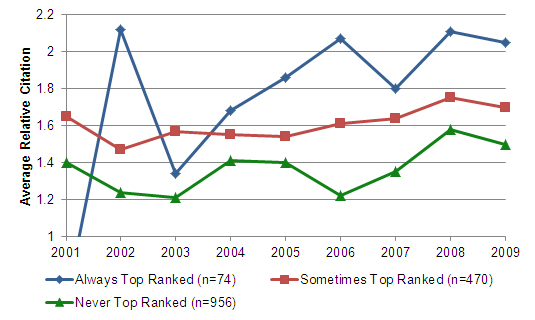

- The current system of peer review is able to select for excellence, both in terms of who receives funding, and also within committee rankings; researchers who are consistently top ranked in peer review have higher scientific impact scores.

- The program has made a significant contribution to the Canadian health research enterprise through support provided to researchers and trainees on OOGP grants.

- The OOGP is being delivered efficiently; costs per application are in-line with the limited available benchmarks from other research funders. Satisfaction with program delivery is also generally high among applicants, however data indicates that peer reviewers have a heavy workload, particularly those from the biomedical research community.

- Exploratory analysis seems to suggest that systems using independent review could result in similar outcomes to peer review committee discussions.

- The time taken by researchers to complete an OOGP application is in-line with benchmarks. However, the program's decreasing success rate results in four in five applications not being funded. Streamlining the amount of information to be submitted during the application process would likely reduce burden on applicants.

- There is some evidence to suggest that the OOGP is already being utilized as a 'programmatic program,' particularly in the biomedical community, based on the renewal behaviours of applicants, as well as case study findings.

- In keeping with CIHR's mandate, the OOGP funds projects from across all of the agency's health research pillars. However, as this evaluation demonstrates, there are variations in how research communities interact with the program. One example of this relates to differences in how researchers discuss and score applications; another is in renewal behaviours.

- The available evidence speaks to the continued need for the OOGP and the program’s alignment with the federal government and CIHR’s priorities and with federal roles and responsibilities.

Recommendations

Evidence from the evaluation strongly confirm that broad open funding is a valid and rigorous way of supporting research and that the OOGP engenders research excellence and should therefore be continued. The following recommendations are made to further enhance program design and cost effective delivery:

- Ensure that future open program designs utilize peer reviewer and applicant time as efficiently as possible; for example, in the design of the peer review system and the amount of application information required to be submitted by applicants.

- Ensure that future open program designs account for the varying application, peer review and renewal behaviours of different Pillars.

- Conduct further analyses to understand fully the potential impacts of changes to the peer review system. Studies of peer review models using experimental designs would provide a strong evidence base.

- Create measures of success for future open programs, ensuring that these are defined to be relevant for CIHR's different health research communities.

Management Response

| Recommendation | Response (Agree or Disagree) |

Management Action Plan | Responsibility | Timeline |

|---|---|---|---|---|

| 1. Ensure that future open program designs utilize peer reviewer and applicant time as efficiently as possible; for example, in the design of the peer review system and the amount of application information required to be submitted by applicants. | Agree | Agreed and in progress. The current exercise to reform the open programs involves completely reviewing application information requirements on the basis of needs for peer review or analytical information with the intention of streamlining the application requirements as well as aligning information to the applicable criteria of a new structured peer review processes. The objective is to decrease the peer review time per application. This measure of improvements in use of peer review time (per application) will be captured in the performance metrics as suggested in Recommendation #4 below. | Jane Aubin | Initial redesign of peer review and application processes will be complete by end of fiscal year 2012-2013 followed by testing and implementation by winter 2013. |

| 2. Ensure that future open program designs account for the varying application, peer review and renewal behaviours of different communities. | Agree | Agreed and in progress. One of the objectives of the open reforms is to capture excellence across different communities. Data on how excellence is assessed by different communities has been gathered and is being built into the structured review process. The open reforms are also aiming to improve accessibility, from a technical and content perspective, of future funding opportunities to all areas and modes of health research. | Jane Aubin | Initial redesign of peer review and application processes will be complete by end of fiscal year 2012-2013 followed by testing and implementation by winter 2013. |

| 3. Conduct further analyses to understand fully the potential impacts of changes to the peer review system. Studies of peer review models using experimental designs would provide a strong evidence base. | Agree | Agreed and in progress. A Research Plan is linked to the Transition and Implementation Plan of the open reforms and includes the conduct of a number of retrospective, short-term and long-term studies focusing on different aspects of peer review. While it is not certain whether comprehensive experimental designs can be used in studying peer review aspects without jeopardizing the integrity of a competition, all efforts will be made by Management in working with the Evaluation group so that studies have valid outcomes. Management intention is to keep changes to the new peer review system in the open suite as minimal as possible once developed, however ongoing research on peer review quality will be conducted and reported through the performance metrics as suggested in Recommendation #4 below. | Jane Aubin | Metrics and the Research plan will be established by the end of the fiscal year 2012-2013. The implementation of the Research plan will be ongoing. |

| 4. Create measures of success for future open programs, ensuring these are defined to be relevant for CIHR's different health research communities. | Agree | Agreed. The development of performance metrics and a system of collection and analysis is underway as part of the Research plan mentioned above. | Jane Aubin | Metrics and the Research plan will be established by the end of fiscal year 2012-2013. The implementation of the Research plan will be ongoing. |

Evaluation Purpose, Key Findings and Conclusions

Evaluation Purpose

This evaluation is designed to assess the extent to which the Open Operating Grant Program has achieved its expected outcomes in relation to its main objectives: the creation, dissemination and use of health-related knowledge, and the development and maintenance of health research capacity in all areas of health research in Canada.

The evaluation is also designed to meet CIHR's requirements to the Treasury Board Secretariat (TBS) under the 2009 Policy on Evaluation and Directive on the Evaluation Function.Footnote 1 It therefore covers specific core evaluation issues of program relevance and performance as described in the TBS policy suite.Footnote 2

In line with TBS policy and recognized best practice in evaluation, a range of methods - involving both quantitative and qualitative evidence - were used to triangulate evaluation findings.

Key Findings

Knowledge Creation

- Researchers supported by the Open Operating Grant Program produce publications with a consistently greater scientific impact than the health research average for Canada and other OECD comparators (based on the Average of Relative Citations).

- The scientific impact of OOGP-supported research publications has significantly increased between 2001-2005 and 2006-2009 (1.44 vs. 1.54, p<0.001).

- OOGP grants result in an average of 7.6 publications per grant; the number of papers per grant has increased to 8.9 after 2004. This is within the context of an overall increase in the total number of papers produced by Canadian researchers over the last decade (Archambault, 2010).

- Data on the number of publications produced per grant is a useful measure and is used globally by research funders; however, contextual factors should always be considered:

- Publication productivity in the OOGP is associated with the value and duration of grants (p<0.001). When grant duration is controlled for, annual publication averages are similar across research pillars (aside from health systems and services). Apparently differing publication behaviours between research communities may therefore partly relate to grant duration.

- The average time that elapses between receiving a grant and publishing differs between research disciplines; for example, biomedical researchers publish their first paper on average two years from the start of their grant, compared with 3.2 years for health systems and services researchers. 95% of OOGP supported papers have been published by eight years after the grant competition date.

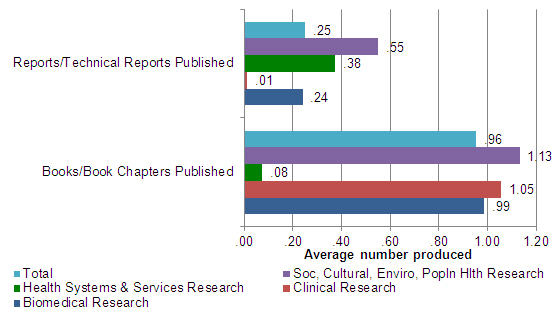

- OOGP-funded researchers produce a range of other knowledge creation outputs, including books/book chapters (average of 0.96 produced per grant) and reports (0.25 per grant). Publishing behaviour varies across research pillars; Social, cultural, environmental and population health researchers (Pillar IV) produced the greatest average number of each of these outputs (1.13 books/book chapters; 0.55 reports).

Program Design and Delivery

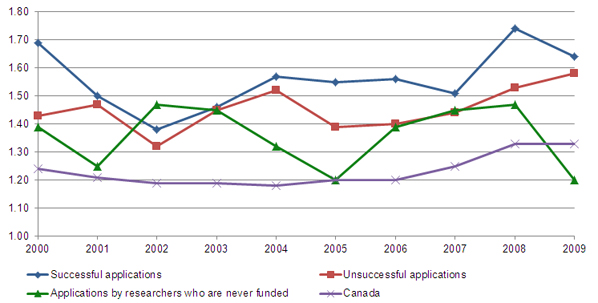

- The OOGP peer review process is successful in selecting future scientific excellence. Average of Relative Citation (ARC) scores for researchers following a successful application are higher

than for unsuccessful applications and also for those applicants who have never been funded by the OOGP.

- Researchers who are always ranked in the top 10% in OOGP competitions have higher ARC scores than those who are sometimes top ranked. Researchers who are never top ranked have lower scores than the other two groups.

- ARC scores, even for unsuccessful applicants, are above the Canadian health research average, showing that the program attracts excellence.

- Researchers give generally positive satisfaction ratings for the OOGP application and peer review processes (over 50% very/somewhat satisfied on most measures).

- The quality and consistency of peer review judgments are two areas identified for improvement. Three in four (74.1%) researchers rate the quality of peer review judgments to be the most important aspect of the process; around half (47.7%) feel this is an area for future improvement.

- A cost-efficiency analysis of the OOGP was conducted to replicate a published Australian study (Graves, Barnett & Clarke, 2011). This includes the administrative costs of processing an

application and monetized time costs for peer reviewers and applicants.

- The average delivery cost per OOGP application is $13,997. This compares to a per application cost for the Australian National Health and Medical Research Council (NHMRC) of $18,896 (all figures are converted to Canadian dollars).

- The OOGP cost per application includes: $1,307 for direct and indirect administrative costs; $1,812 in peer reviewer time; and $10,878 in applicant time. Administrative costs are comparable with both NHMRC ($1,022) and the US National Institutes of Health ($1,893).

- On average, an OOGP applicant takes around 169 hours to complete an application (comparable to the NHMRC benchmark). Peer reviewers spend around 75 hours per OOGP competition, including at-home reviewing, participating in meetings and travelling. An average of around 9 applications are reviewed per competition by each peer reviewer.

- CIHR is currently consulting on proposals for the redesign of its open suite of programs, including making changes to peer review processes and introducing a programmatic funding scheme.

Evidence from this evaluation will inform this process.

- Two independent (at-home) OOGP reviewers select many of the same applications as a peer review committee. Seventy-five percent of OOGP applications that would have been funded based

on rankings derived from the independent reviewer scores were subsequently funded at committee.

- Among those applications ranked in the top 5% at peer review committee, 95% would have been funded based on their independent reviewer rankings.

- Sensitivity and specificity analysis to assess the predictive value of independent review on final committee decisions also broadly confirms these findings.

- Exploratory bibliometric analyses show that researchers who submit applications that are ranked as successful by both independent reviewers and at peer review committee discussions

have the highest subsequent ARC scores.

- There is however no significant difference in ARC scores between researchers with applications selected by independent reviewers (and not at committee) and those selected by committees (but not by independent reviewers). This may suggest that the peer review committee is not necessarily a more reliable selector of future excellence than the independent reviewers.

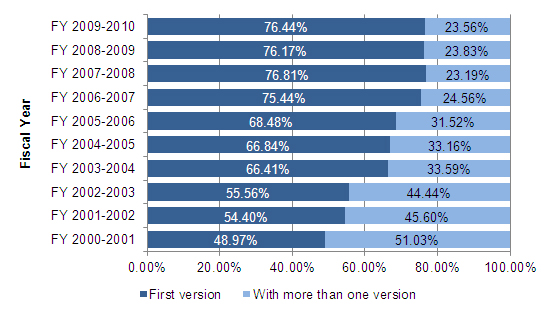

- Data on renewal of grant applications suggests that the OOGP is already being used as a 'programmatic' funding stream by some researchers, particularly those in the biomedical community; between 2000 and 2010, an annual average of between 59% (2000) and 24% (2010) of applications had been funded at least once previously. The case study findings support and illustrate this evidence of 'programmatic funding' in more detail.

- Two independent (at-home) OOGP reviewers select many of the same applications as a peer review committee. Seventy-five percent of OOGP applications that would have been funded based

on rankings derived from the independent reviewer scores were subsequently funded at committee.

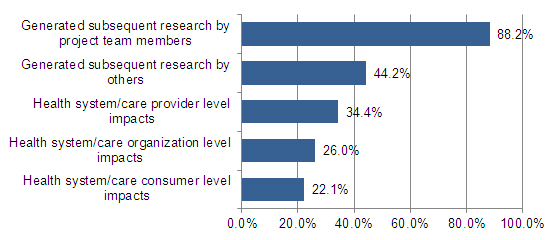

Knowledge Translation

- With regard to the commercialization aspect of knowledge translation, around one in five OOGP funded researchers state that their research resulted in a commercializable output. This includes one in ten who produced a new patent and the same proportion who had an intellectual property claim resulting from their OOGP grant.

- Case studies of high impact longer-term outcomes of commercializable research illustrate the positive effects of OOGP funding in more detail. The impacts of these programs of research

were demonstrated on patients, health care providers, researchers, students, the health care system and society at large.

- The high impact value of this research often occurs over long periods. One researcher's work on sensory control built on 40 years of work; another, relating to cartilage regeneration, took over ten years from laboratory research to translation into human application.

- Case study researchers identified areas where they felt CIHR could provide more support, including: providing sufficient funds for more intensive knowledge translation strategies; navigating complicated intellectual property issues; and support in establishing technology transfer.

- OOGP funding is also contributing to CIHR's knowledge translation mandate more broadly. One in three (34.4%) OOGP-funded researchers reported that their research resulted in impacts at the health system/care provider level, and one in four (26%) report impacts on health system/care organizations.

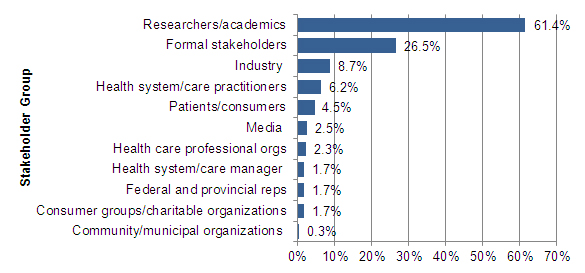

- Apart from researchers/academics and to some extent stakeholders formally listed on the grant application, other potential user groups, such as health system/care practitioners, patients or industry are not frequently involved in the conduct of OOGP-funded research.

Capacity Development

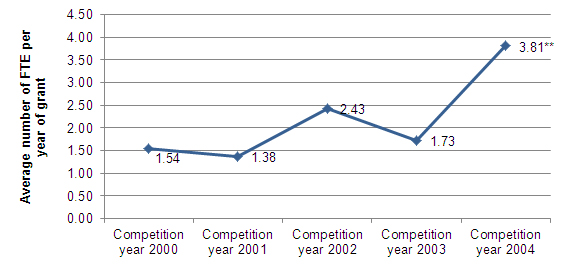

- An average of 8.61 research staff and trainees are trained on each OOGP grant. Using available data to infer the total number trained for all grants, this is estimated at 81,175 OOGP research staff and trainees between 2000 and 2010.

- The number of trainees involved in a grant and the full-time equivalent (FTE) of involvement can vary significantly between research pillars. An average of 13.62 trainees were involved in each grant for Pillar IV, however this equates to only 4.81 FTEs. By contrast, biomedical trainees tend to be associated with only one grant: 7.93 trainees per grant and 7.65 FTEs.

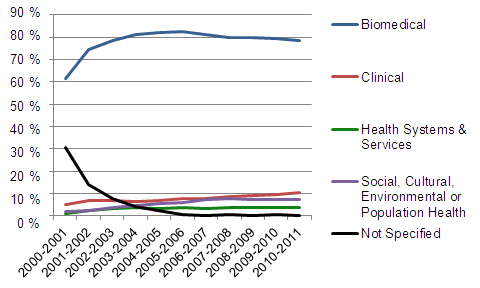

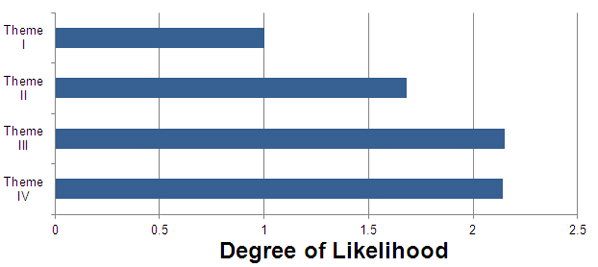

- The OOGP continues to fund mainly biomedical researchers (around 80% of all grants), a proportion that has been consistent since 2002-2003. A stated

objective of CIHR's Governing Council is to remove barriers and create opportunities for other research pillars in open programs. A range of barriers and challenges were identified for

researchers in Pillars III/IV applying to the OOGP:

- Lower average peer review scores and higher proportion of applications rated as non-fundable. (score of below 3.5 out of 5). Peer reviewers from these research communities give

lower average scores to applications, tend to put more emphasis on research methods than track record, and disagree with each other more often in their ratings than those reviewing

biomedical research.

- While OOGP funding decisions are made on rankings and not scores, potential impacts of lower scoring include not being eligible for some strategic 'priority announcements' from the OOGP.

- Lower rate of renewal applications and success. Pillar III/IV applicants are less likely than biomedical researchers to submit renewal applications; they also have a reduced likelihood of success. Evidence from representatives of this community suggests that these researchers typically take a more project-based than programmatic approach to submitting OOGP research applications and may also be unaware that renewals are permitted in the OOGP.

- Cross-disciplinary projects. It can be more difficult to find appropriate reviewers for applications that cut across disciplines or methodologies, and also for smaller research communities. Under the current OOGP system of 'standing committees,' some disciplines and fields of research are not explicitly mentioned in the committee mandates which may deter applicants from applying.

- Lower average peer review scores and higher proportion of applications rated as non-fundable. (score of below 3.5 out of 5). Peer reviewers from these research communities give

lower average scores to applications, tend to put more emphasis on research methods than track record, and disagree with each other more often in their ratings than those reviewing

biomedical research.

Program Relevance

Evidence from the evaluation speaks to the continued need for the OOGP and the program's alignment with the federal government and CIHR's priorities and with federal roles and responsibilities.

- The program contributes directly to the fulfillment of CIHR's mandate (Bill C-13, April 13, 2000) and aligns with the federal government's priorities as spelt out in the 2007 Science and Technology Strategy (Industry Canada, 2007 & 2009).

- Primary stakeholders are of the opinion that the OOGP is vital for maintaining a world-class research enterprise in Canada.

- The most recent federal budgets continue to affirm the government's commitment to supporting advanced research and "health research of national importance" and the role of Canada's three primary funding agencies in implementing this (Government of Canada, 2011 & 2012).

Conclusions

- The OOGP has been both attracting and funding excellent health researchers since 2000. The scientific impact of publications produced by OOGP-supported researchers provides evidence of how the program outperforms benchmark comparators; case study illustrations of high impact projects demonstrate the longer-term outcomes of this funding.

- The current system of peer review is able to select for excellence, both in terms of who receives funding and also within committee rankings; researchers who are consistently top ranked in peer review have higher scientific impact scores. Qualitative evidence shows that researchers view receiving an OOGP grant as a mark of quality and a validation of their area of research.

- The program has made a significant contribution to the Canadian health research enterprise through support provided to researchers and trainees on OOGP grants.

- The OOGP is being delivered efficiently; costs per application are in-line with the limited available benchmarks from other research funders. Satisfaction with program delivery is also generally high among applicants.

- The current system of peer review is, however, placing a heavy burden on reviewers, particularly those from the biomedical research community. While the program is being efficiently delivered, this could be further improved by reducing the peer reviewer workload. 'Virtual peer review' to reduce the time taken to travel to and attend committee meetings is one example of how changes to the peer review process could increase program efficiency while maintaining the quality of peer review.

- Exploratory evidence from 'natural experiments' conducted with OOGP data seems to suggest that while committee peer review is still considered the 'gold standard' by research funders, systems using independent review could result in similar outcomes. While the initial evaluation evidence points in this direction, it is insufficient to draw a firm conclusion and further research in this area is clearly required.

- The time taken by researchers to complete an OOGP application is in-line with benchmarks. However, the program's decreasing success rate results in four in five applications not being funded. Streamlining the amount of application information that should be submitted at various points during the process would likely pay considerable dividends, both for researchers and also for cost-efficiency per application, given that researcher time is the largest cost component.

- There is some evidence to suggest that the OOGP is already being utilized as a 'programmatic program' based on the renewal behaviours of applicants (particularly in Pillar I), as well as case study data to illustrate this. It is important to note however that there is far less evidence for this among applicants from communities such as those in Pillar IV (social, cultural, environmental and population health).

- In keeping with CIHR's mandate, the OOGP funds projects from all four of the agency's health research pillars. However, as this evaluation demonstrates, there are variations in how research communities interact with the program. One example of this relates to differences in how researchers discuss and score applications; another is in renewal behaviours.

- The amount and quality of available data to evaluate the OOGP has significantly improved since the last evaluation was conducted in 2005. However, the program still lacks a defined set of performance measures against which to assess progress. The Research Reporting System is designed to collect this data, but future consideration should be given as to which measures are most meaningful and relevant, particularly to inform program improvement. As one example, findings show that a measure on the number of publications per grant is subject to a series of potential confounds.

Knowledge Creation

Evaluation questions

- Have publications by OOGP-funded researchers had a greater scientific impact than those of health researchers in Canada and other OECD countries?

- Has the scientific impact of OOGP-funded publications increased, decreased or remained the same since 2005?

- Has the production of OOGP research outputs per grant increased, decreased or remained the same since 2005?

Introduction

The creation of knowledge is central to the program theory of the Open Operating Grant Program. The program allows for researchers to apply with their 'best ideas' from across health research, which, if funded, may result in a wide and diverse range of research outcomes from publications to patents.

There is, of course, no single 'right way' of measuring knowledge creation in relation to research funding programs. Bibliometric analysis is one frequently used approach; academic papers published in widely circulated journals facilitate access to the latest scientific discoveries and advances and are seen as some of the most tangible outcomes of academic research (Goudin, 2005; Larivière et al., 2006; Moed, 2005; NSERC, 2007). Bibliometric analysis of these publications is used to measure, among other things, the volume of a researcher's publications and the relative frequency with which they are cited as a proxy for an article's scientific impact. In this evaluation, the Average of Relative Citations (ARC) is used as a measure of 'scientific impact.'Footnote 3

Critics of bibliometric analysis contend that estimates of publication quality based on citations can be misleading and that citation practices differ across disciplines and sometimes between sub-fields in the same discipline (Ismail et al., 2009). This is a particularly salient issue for CIHR and the OOGP, with a mandate to fund across all areas of health research, including research disciplines where outputs such as books or book chapters may be a more useful and accurate measure of knowledge creation. In light of this, measures of other outputs are also used in this evaluation to assess knowledge creation as a result of the program. A case study approach is also taken to assess highly impactful research conducted as a result of OOGP funding.

It should be noted that the bibliometric analyses in this report are based on data for publications produced by OOGP researchers while supported by these grants. While this method is commonly accepted based on an assumption that these grants are a significant contribution to research output (e.g. Campbell et al, 2010), an outright attribution between grant and publication bibliometric data cannot be made. With further development of CIHR's Research Reporting System, where researchers list publications produced as a result of the grant that can then be linked directly to bibliometric data, this type of analysis should become available for future evaluations.

Have publications by OOGP-funded researchers had a greater scientific impact than those of health researchers in Canada and other OECD countries?

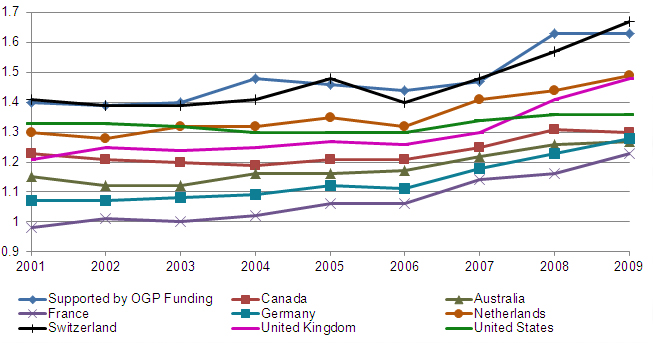

As shown in Figure 1-1, publications produced by OOGP-funded researchers while supported by an OOGP grant have a consistently higher scientific impact (based on ARC) than the average for Canadian health researchers. The analysis also shows that for the period 2001-2009, OOGP-supported papers were cited more often than health research papers from other comparable Organization for Economic Co-operation and Development (OECD) countries (Figure 1-1).

Figure 1-1: Impact of supported papers produced by OOGP-funded researchers vs. OECD health research comparators (2001-2009)

Source: Bibliometric data drawn from Canadian Bibliometric Database built by OST using Thomson Reuters' Web of Science (OOGP sample n=1,500)

It should be noted that the overall average of relative citations for Canada is comprised of all Canadian health researchers, including those funded by the OOGP. The OECD comparators are based on all health researchers within each country, rather than on individual funding agencies or programs. Given the differing mandates for health research funding in agencies such as the National Institutes of Health in the United States or the Medical Research Councils of the United Kingdom or Australia, direct comparisons between agencies could prove problematic. However one potential area for future evaluations to address would be to assess the feasibility of deriving agency or even program benchmarks based on matching a sub-set of data that is directly comparable (e.g. in biomedical research).

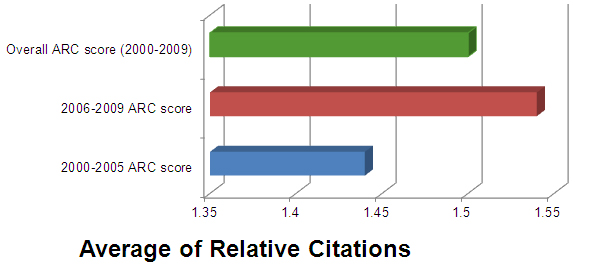

Has the scientific impact of OOGP-funded publications increased, decreased or remained the same since 2005?

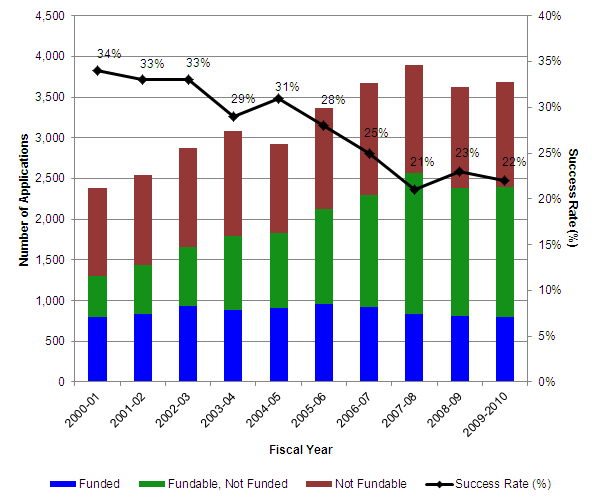

As shown in Figure 1-2, the scientific impact of supported papers produced by OOGP funded researchers has significantly increased between the periods 2001-2005 and 2006-2009 (1.44 for 2001-2005, 1.54 for 2006-2009 (p<0.001))Footnote 4.

One potential factor in this increase relates to an increasingly competitive environment for applying for OOGP funding. Success rates based on the number of applicants funded compared with the number of applications have decreased by 12 percentage points from 2000-2001 (34%) to 2009-2010 (22%). CIHR's investment in the program has doubled over this period ($201.2m in 2000-2001 to $419.1m in 2010-2011), but the OOGP has attracted an increasing number of applications (under 2,500 in 2000-2001 to over 4,500 in 2010-2011).

Figure 1-2: Scientific impact of OOGP-supported research papers (ARC)

Source: Bibliometric data drawn from Canadian Bibliometric Database built by OST using Thomson Reuters' Web of Science (OOGP sample n=1,500)

Feedback from a recent Canadian health researcher-initiated petition concerned about declining success ratesFootnote 5 identifies a range of undesirable consequences of higher application pressure from a researcher perspective. These include the loss of highly qualified personnel due to inconsistent funding, a danger to the research "pipeline" producing the next generation of health researchers, the loss of international competiveness, difficulty in conducting peer review effectively, and spending more time preparing unsuccessful applications.

Has the production of OOGP research outputs per grant increased, decreased or remained the same since 2005?

The number of journal publications produced as a result of an OOGP grant provides a further measure of knowledge creation. There are of course significant limitations as to how these data can be used and interpreted; simply producing a peer-reviewed publication gives no indication of its quality. However, when considered alongside bibliometric analyses, this measure provides useful basic data on the outputs that result from investment in the program, as well as some insight into the publishing behaviours of the different parts of CIHR's health research communities in the OOGP.

As displayed in Table 1-1, available data from CIHR's Research Reporting System (RRS)Footnote 6 shows that OOGP-funded researchers published an average of 7.6 papers per grant. The data also suggests that the overall production of OOGP-funded knowledge outputs, as measured by journal articles, has increased since 2004 (p<0.05). It should however be mentioned that this observed increase may be attributable to an overall increase in journal productivity observed globally (Archambault, 2010). Data on Canadian publication trends suggests that the total number of papers published by Canadian researchers has steadily climbed from approximately 27,000 in 2000 to approximately 37,500 papers in 2008 (Archambault, 2010).

| Mean | N | Standard Deviation | Sum | |

|---|---|---|---|---|

| Pre 2004 Grants | 7.2 | 553 | 8.7 | 3,965 |

| Post 2004 Grants | 8.9 | 153 | 8.8 | 1,364 |

| All Grants* | 7.6 | 706 | 8.8 | 5,329 |

|

Source: Research Reporting System, 2008 Pilot (N=565); Current Research Reporting System 2011-2012 (N=141)

|

||||

Further analysis shows that journal article production is moderately correlated with the value and duration of the grants awarded (r=0.42, n=706, p<0.001 for both independent variables). Additionally, the value and duration of grants are strongly correlated with each other: longer grants tend to have more money (r=0.71, n=706, p<0.001). Therefore, it seems as if the duration of a grant has an important relationship with the number of publications produced. However, grant duration is not consistent throughout the four pillars. Biomedical researchers have the longest grant durations on average (3.4 years) compared with the other three pillars (3.0, 2.3 and 2.8 years for Pillars II, III and IV respectively); these differences are statistically significant (p<0.001).Footnote 7

The importance of a grant's duration and the differences in duration by pillar may have an impact on the overall reported productivity for each pillar; those with longer grant durations conduct research over a longer period of time which can then lead to having more findings to publish. Biomedical and clinical researchers have longer grants than the other pillars, and also report a higher number of publications. This difference in publication output has typically been attributed to differences in publishing behaviour between those in the biomedical community and those in the social sciences. The average number of publications by pillar as reported in the RRS are: Pillar I – 8.07; Pillar II – 6.86; Pillar III – 2.93; and Pillar IV – 6.57 and the overall was 7.55 (p<0.01).

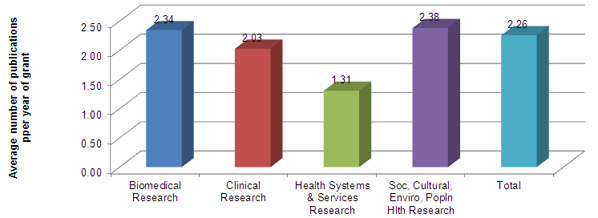

To account for the differences in grant duration between pillars, the number of journal articles was normalized by grant duration.Footnote 8 Normalization was arrived at by dividing the number of journal articles reported by the duration of the grant. The results suggest that the publication productivity for most researchers is very similar when duration of grant is controlled for. The differences between pillars approached significance (p=0.06), likely due to the effect of the different publication behavior of Pillar III researchers (Figure 1-2).Footnote 9

Figure 1-3: Journal article productivity per year of grant duration by pillar of respondent

Source: Research Reporting System, Pilot (N=596) and Current (N=141)

The significance of this analysis from an OOGP evaluation perspective is that it shows how assessing productivity by simply counting publications can be misleading. Future performance measurement of the program should take account of these and other potential confounds when reporting on this measure of knowledge creation.

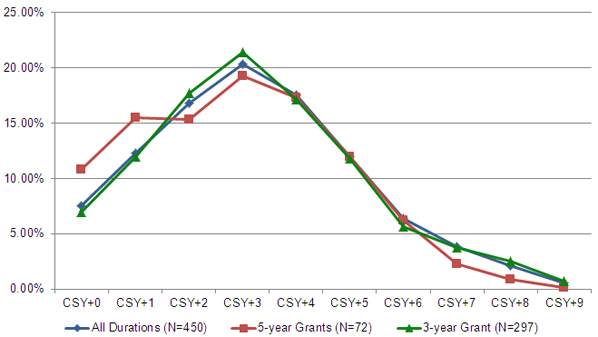

Publication peaks three years after competition year

Accurately measuring OOGP research outputs through data collection tools like the Research Reporting System (RRS) relies on understanding the publication behavior of researchers. Data has been collected on OOGP grants that had authority to use funds expiry dates from January 1, 2000 up to July 31, 2008. This allows for analyses of a longer duration between competition start year (CSY) and the publishing year of subsequent publications linked to these grants.

Figure 1-3 shows the publication behaviour of OOGP funded researchers following their competition year. This data is analyzed by length of grant (3 or 5 years) to assess potential differences in publication behaviour based on duration of funding. Both grant durations reported that approximately 95% of their related journal publication outputs had already been published by eight years after competition start year (CSY+8). The peak publication period for both grant durations occurred in CSY+3.

Further analyses of this data show that the average time to publish the first journal article from the start of a grant was 2.18 years, with significant variation across pillar. Pillar I researchers published their first paper, on average, 2 years after the start of their grant, followed by Pillar IV researchers after 2.6 years, Pillar II researchers after 3 years, and Pillar III researchers after 3.2 years (p<0.001).

Figure 1-4: Publication behavior by grant duration - When do supported researchers publish?

Source: Research Reporting System, Pilot and Current (N=492).

Again, the significance of this analysis is that performance measurement for this and other similar programs should be sensitive to such differences in publishing behaviour. Collecting data on publications at a single point in time after the end of a grant may result in undercounting among certain communities.

Books, book chapters and reports resulting from OOGP grants

The use of bibliometric data to measure knowledge creation may disadvantage researchers from areas that do not traditionally use journal articles as their primary dissemination medium. For these researchers, books/book chapters or reports may be considered as their more significant evidence of creating and disseminating knowledge. It is therefore important to measure these other knowledge outputs.

As can be seen in Figure 1-5, data for these outputs reveal two trends. First, it appears that Pillar III researchers produce fewer books/book chapters than their peers in the other three pillars. Conversely, it seems that Pillar III researchers produce, on average, more reports than their peers in Pillars I and II. These observed differences in books/book chapters and report production behavior are not however statistically significant, due to small sample sizes of researchers reporting having produced any of these outputs.

Figure 1-5: Books/book chapters and reports published as a result of OOGP grants

Source: Research Reporting System, Pilot (N=596) and Current (N=141)

Program Design and Delivery

Evaluation questions

- Is the OOGP peer review process able to identify and select future scientific excellence?

- How satisfied are OOGP applicants with the delivery of the application, peer review and post-award processes?

- Is the OOGP being delivered in a cost efficient manner?

- Is the current project-based OOGP funding model an appropriate design for CIHR and the federal government to support health research?

- What alternative designs could be considered?

Introduction

This evaluation takes place at a time when CIHR is considering wide-ranging options for the redesign of its open programs, including the OOGP. At the time this evaluation took place, the agency had entered into a series of consultations with the health research community to obtain their feedback on the future direction of CIHR's investigator-driven programs.

This evaluation of the Open Operating Grant Program provides evidence to feed into the agency's program redesign process. Through evaluating existing data from the OOGP, the evaluation can provide a further body of evidence for the decisions that are to be made on program redesign, as well as benchmarks against which the success of these future changes can be measured.

This section of the report relating to program design and delivery can therefore be divided into two parts:

- Analysis of the existing design and delivery of the OOGP – the extent to which the current peer review process identifies and selects future scientific excellence, satisfaction with program delivery and a cost efficiency analysis of program delivery.

- Alternative designs for the OOGP – findings that can feed into CIHR's program redesign process, including a research program funding scheme and potential implications of taking alternative approaches to conducting peer review.

Is the OOGP peer review process able to identify and select future scientific excellence?

A key element of the program design of the Open Operating Grant Program is that the peer review process should be able to select applications from applicants who have the most excellent research ideas. An indication of whether this process is working as anticipated is if selected applications and particularly those that were highly ranked in peer review committees lead to publications with higher scientific impact (measured using the Average of Relative Citations) than unselected applications. This approach to assessing peer review has been taken by other funders, including a study for the Alberta Ingenuity Fund (Alberta Ingenuity Fund, 2008).

The scientific impact of publications of successful OOGP applicants was well above those of unsuccessful applicants and applicants who have never been funded by the OOGP (Figure 2-1). For the period 2000-2009, successful applicants had an ARC of 1.54 compared with the Canadian average of 1.24. Supporting the hypothesis that a highly competitive OOGP attracts excellence, unsuccessful applicants also had an ARC score well above the Canadian health research average (ARC of 1.45), with even those applicants who have never been successful in obtaining OOGP funding showing above average scores (ARC of 1.36).

Figure 2-1: Impact of applicants for two years following competition by application status and Canadian papers in health fields by publication year (2000-2009) (ARCs)

Source: Bibliometric data drawn from Canadian Bibliometric Database built by OST using Thomson Reuters' Web of Science (OOGP sample n=1,500)

OOGP funding decisions are determined by percentile rankings of applications based on an algorithm involving averages across committee ratings and the number of applications reviewed by that committee. If the peer review process works well, it would be expected that better ranked applications should subsequently result in stronger scientific impact scores than lesser ranked applications. Bibliometric analysis (Figure 2-2) showed that papers produced by researchers who were always ranked in the top 10 percentile (top ranked) of their peer review committee when applying to the OOGP had a stronger scientific impact (ARC of 1.91) than those who were sometimes top ranked (ARC of 1.64) or never top ranked (1.38).

Figure 2-2: Average Relative Citations of supported papers of funded researchers by peer review committee percentile ranking and publication year (2001-2009) (ARCs)

Source: Bibliometric data drawn from Canadian Bibliometric Database built by OST using Thomson Reuters' Web of Science (OOGP sample n=1,500)

The evidence from this analysis therefore provides support to the hypothesis that OOGP peer review committees are selecting the 'best research ideas' as measured by resulting outcomes - subsequent publications and their impact.

How satisfied are OOGP applicants with the delivery of the application, peer review and post-award processes?

Levels of satisfaction with the application and peer review processes provide a researcher perspective on the efficacy of program delivery by CIHR. These findings can be used to identify areas of future improvement for delivering the OOGP. Footnote 10

Table 2-1 shows data on elements relating to the application and peer review processes: 1) researcher satisfaction with each element of the process; 2) the extent to which researchers identify each aspect as important; 3) whether respondents see each element as an area for improvement; and 4) whether they feel that the delivery of this element has got better or worse over the last five years.

As might be expected, levels of satisfaction are higher with the more straightforward 'transactional' aspects of program delivery, such as submission of applications, the application instructions or timeliness of posting results. By contrast, the more complex processes involved in peer review have lower satisfaction ratings, and are generally viewed as more important.

Considering these findings in the context of other benchmarks, the OOGP's scores compare favorably with those reported in the recent summative evaluation of the Standard Research Grants (SRG) program of the Social Sciences and Humanities Research Council (SSHRC, 2010). Clarity of application instructions: 67% for the OOGP vs. 61% for SRG; and ease of submission of application, 72% for the OOGP vs. 52% for SRG.Footnote 11 The data also showed that successful applicants tended to be more satisfied than unsuccessful applicants, a finding mirrored in the SRG evaluation and more generally in client satisfaction surveys.

| Stage | Element | % Very or Somewhat Satisfied | Most important aspect | Area for improvement | % worse in last five years |

|---|---|---|---|---|---|

| Application Process | ResearchNet's - capabilities for supporting CIHR's application process | 72.8% | 7.1% | 1.2% | 4.1% |

| ResearchNet's - ease of submission of application | 72.7% | 21.6% | 4.9% | 13.0% | |

| Completeness of the application instructions | 69.6% | 7.0% | 1.3% | 7.0% | |

| Reasonableness of the information that you are required to provide | 68.1% | 14.5% | 4.3% | 11.7% | |

| Clarity of the application instructions | 67.7% | 16.3% | 3.9% | 8.3% | |

| ResearchNet's - effort required to complete application | 64.2% | 17.5% | 5.7% | 10.4% | |

| Timeliness of posting results | 61.6% | 8.5% | 4.1% | 8.2% | |

| Fairness of policies relating to applications to CIHR | 57.2% | 28.1% | 10.5% | 16.7% | |

| Time available to submit an application following the launch of a funding opportunity | 55.6% | 13.8% | 3.7% | 9.5% | |

| Usefulness of written feedback from the peer review process | 48.9% | 41% | 12.7% | 22.1% | |

| Peer Review Process | Clarity of the rating system | 43.3% | 11.1% | 5.1% | 15% |

| Clarity of the evaluation criteria | 42.6% | 20.2% | 8.9% | 15.8% | |

| Quality of peer review judgments | 38.9% | 74.1% | 47.7% | 32.6% | |

| Consistency of peer review judgments | 26.2% | 53.6% | 25.6% | 34.5% | |

| Reasonableness of policies relating to the use of grant funds | 49% | Not asked* | 36% | 12% | |

| Post Award Administration1 | Coherence of policies on the use of funds among CIHR programs | 43% | Not asked* | 21% | 7% |

| Understanding of how the reports are used by CIHR | 23% | Not asked* | 21% | 8% | |

|

Source: 2011 IRP Report Ipsos Reid Survey (data filtered by researchers who have applied to the OOGP n=1,909).

|

|||||

Areas for improvement

While these findings can be considered as broadly positive, when looking to make program delivery improvements it is useful to focus on elements that meet the following conditions:

- Lower levels of applicant satisfaction.

- Identified as important by applicants.

- Identified as areas for improvement by applicants.

- Perceived to have got worse over the last five years.

The shaded rows in Table 2-1 show two elements which meet all of the above criteria, both of which relate to peer review: the quality of peer review judgments; and, the consistency of peer review judgments.

The quality of peer review judgments particularly stands out on these measures. It is ranked by applicants as the most important element in the application process, with around three in four rating it as important (74.1%). Just under one in two applicants (47.7%) state that it is an area for improvement.

It is recommended that further study should be conducted to explore how applicants are rating 'quality' in this context. This could for example relate to aspects of delivering the processes involved in peer review or to an assessment of which applications are selected; if applicants feel that the strongest applications were not selected, this could be considered as evidence of a lack of 'quality'. Analysis of the findings for these two elements by CIHR pillar reveals few differences across pillars.

Is the OOGP being delivered in a cost-efficent manner?

To assess cost-efficiency in delivering the OOGP, we replicate in this evaluation a peer-reviewed published study conducted by researchers with data from the National Health and Medical Research Council (NHMRC) of Australia (Graves, Barnett & Clarke, 2011).

A key innovation used in this evaluation based on Graves et al.'s study is not to simply consider the administrative costs to CIHR of delivering the OOGP, but also to calculate the costs of the program to applicants and peer reviewers. As is noted, CIHR's proposed program reforms are aimed to reduce the amount of time spent by researchers in preparing applications and also to make peer review more efficient. This study quantifies the financial implications of time spent by researchers and peer reviewers.

The analysis conducted also improves on the study conducted by Graves et al. in that data from a larger sample survey of peer reviewers and researchers is included in the calculations shown in Table 2-2. This should give greater confidence in the validity and generalizability of these findings. We take the 'ingredient approach' to cost analysis which is based on the notion that every program (e.g. the OOGP) uses ingredients that have a value or cost (Levin & McEwan, 2001).

As can be seen in the detailed breakdown provided in Table 2-2, the average cost per OOGP application, including administrative costs (direct and indirect), applicant and reviewer 'costs' (monetized time) is $13,997. This compares with an average cost for the Australian NHMRC comparison of $18,896.

As can be seen in Table 2-3, the overall direct and indirect administrative cost per grant application ($1,307) is in fact comparable with those obtained for the Australian NHMRC ($1,022) and the US National Institutes of Health ($1,893).

| Cost Items | Open Operating Grant Program | Australian National Health and Medical Research Council | Notes |

|---|---|---|---|

| Applicants | |||

| Average number of hours to complete an application (a) | 168.6 | 160-240 hours (20–30 eight-hour days) | CIHR Open Reforms Survey February-March 2012 (N=378) |

| Average hourly wage (b) | $64.52 | $67.17-$100.76 |

Weighted average of academic salaries was calculated with data from Statistics Canada 2010/11. The Australian study gives only gross figures. The hourly wage was calculated by the evaluation unit using the cost per application and dividing it by the minimum and maximum length of time it took to complete an application. |

| Total number of program applications (annually) (c) | 4636 | 2705 | 2338 applications in Sept 2010 & 2298 in March 2011 OOGP competitions |

| Total cost to applicants (d) (a*b*c=d) | $50,430,742 | $43,610,114 | A$40.85 million, converted into Can$ using XE Quick Cross Rates on Feb. 17, 2012. |

| Applicant cost per grant application (e) (d/c=e) | $10,878 | $16,122 | |

| Peer Reviewers | |||

| Average number of hours spent (at home) per reviewer independently reviewing all applications (f) | 43.93 | Not available | Survey of OOGP Peer Review Committee Chairs, SOs & Reviewers January 2012 (N=457)* |

| Average number of hours spent (at home) per reviewer per application | 5.25 | 4 | Survey of OOGP Peer Review Committee Chairs, SOs & Reviewers January 2012 (N=457)* |

| Average number of hours spent per reviewer participating in committee meetings (g) | 23.82 | 46 | Survey of OOGP Peer Review Committee Chairs, SOs & Reviewers January 2012 (N=457)* |

| Average number of hours spent per reviewer travelling to committee meeting (h) | 7.15 | Not available | Survey of OOGP Peer Review Committee Chairs, SOs & Reviewers January 2012 (N=457)* |

| Total hours spent per reviewer in review process including travel (j) (f+g+h=j) | 74.9 | Not available | Survey of OOGP Peer Review Committee Chairs, SOs & Reviewers January 2012 (N=457)* |

| Average hourly wage (k) | $64.52 | Not available | Weighted average of academic salaries was calculated with data from Statistics Canada 2010/11 |

| Total annual cost to reviewers (m) (j*k*1738=m) | $8,398,968 | $4,739,471 | CIHR runs two competitions annually; the March 2011 review meetings involved 861 reviewers while the November, 2011 meetings involved 877 reviewers for a total of 1,738 reviewers. Note that reviewers often participate in both competitions. Therefore, total number of reviewers does not represent unique reviewers. |

| Reviewer cost per grant application (n) (m/4636=n) | $1,812 | $1,752 | |

| Agency-Related Delivery Costs (administrative costs) | |||

| Peer review-related costs (travel, honoraria, hotels, meeting rooms, courier services) (p) | $1,994,317 | Not available | Data from CIHR Finance |

| Personnel costs (KCP, PPP, ITAMS Branches) (q) | $3,553,433 | Not available | Data from CIHR Finance and other groups: PPP; KCP; and ITAMS |

| Non-salary direct overheads, facilities, materials, supplies (r) | $513,407 | Not available | Data from CIHR Finance and other groups: PPP; KCP; and ITAMS |

| Total agency-related administrative costs (s) (p+q+r=s) | $6,061,157 | $2,764,516 | Disparities between the total administrative costs relate to the NHMRC running one competition per year compared with two for the OOGP. A$2.59 million converted into Can$ using XE Quick Cross Rates on Feb. 17, 2012. |

| Agency-related costs per grant application (t) (s/c=t) | $1,307 | $1,022 | |

| TOTAL | |||

| Full annual cost of funding exercise (u) (d+m+s=u) | $64,890,867 | $51,114,101 | |

| Full cost per grant application (v) (u/c=v); also (v=e+n+t) | $13,997 | $18,896 | |

|

|||

| Cost Proportions | CIHR | NHMRC (Australia) | NIH (United States)* |

|---|---|---|---|

| Reviewer costs (travel, hotel, per diem) | $1,994,317 | N/A | $37,624,717** |

| Staff, space, other costs | $4,066,840 | N/A | $71,273,149** |

| Total direct costs | $6,061,157 | $2,764,516¥ | $108,902,306** |

| Number of applications | 4636 | 2705 | 57531 |

| Agency-related delivery cost per grant application | $1,307 | $1,022 | $1,893** |

|

|||

Is the current project-based OOGP funding model an appropriate design for CIHR and the federal government to support health research?

Project-based and program-based funding models are both used by research funding agencies worldwide to support research excellence. Project-based funding supports ideas: "a defined piece of research with a beginning, middle, and end point" (CIHR, 2012a). Project-based funding has been successfully implemented by the National Institutes of Health (e.g., NIH Research Project Grant Program – R01), and the Gates Foundation (e.g., Grand Challenges in Global Health competition) (Grand Challenges in Global Health, 2011; Ioannidis, 2011; Azoulay, Graff-Zivin & Manso, 2009; Jacob & Lefgren, 2007).

Programmatic grants support researchers by funding: "a broad program of research over a number of years, usually at a fixed rate, but sometimes varying in relation to the type of research and the costs involved" (CIHR, 2012a). Several funding agencies, such as the Wellcome Trust in the UK and the Howard Hughes Medical Institute in the US, have successfully implemented programmatic funding schemes with positive results. Both models have their merits and there is no evidence to suggest that one is necessarily 'better' than the other.

The current OOGP originates from the CIHR's predecessor, the Medical Research Council, and uses a project-based funding approach to support research. While health research and approaches to research funding have evolved in Canada and across the world, only relatively minor changes have been made to the OOGP (for example to methodologies for ranking applications in peer review committee). The agency has generally chosen to respond to new opportunities and challenges by creating a range of other programs, for example in the areas of knowledge translation and commercialization. However, while the OOGP itself has not significantly changed, the communities it serves have done so, and applicant behaviour has also evolved.

Applicants frequently renew OOGP grants

The extent to which applicants renew grants can be seen as one measure of the OOGP funding longer-term 'programmatic' grants, rather than shorter projects. One important caveat to consider here is that renewal behaviour is not consistent across all pillars of research; those in CIHR's heath systems and services and population health pillars (Pillars III and IV) are far less likely to apply for renewals and successfully have grants renewed than others, at least in part due to the nature of their research community. Notwithstanding this limitation, as the OOGP continues to fund largely biomedical research (around 80% of grant holders) this makes grant renewals one acceptable proxy measure for 'programmatic funding' behaviour.

Analysis of data on successful and unsuccessful renewal applications submitted to the OOGP from 2000-2010 shows that over this period, between 51% and 23.6% of approved applications had been previously funded at least once (Figure 2-5). If one or more renewals of a successful application is taken as an indication of programmatic funding, then the data appear to confirm the existence of programmatic funding "behavior" in the OOGP among some researchers.

Figure 2-3: Previously funded status of OOGP renewal applications with FRNs, by Competition (2000-2010).

First version means not previously funded; more than one version means previously funded at least once. Data from 2000-2003 may be incomplete due to database conversion issues from the MRC to CIHR era and should be used with caution.

Source: CIHR Electronic Information System data for the OOGP (2000-2010) (N=37,604) provided by the CIHR Performance Measurement and Data Production Unit.

While the data shows a gradual decline from the 51% renewals that occurred in the March 2000 competition to under 24% in the September 2010 competition, the continued existence of programmatic funding "behavior" in one out of every five applications underlines its continued importance. There is a case to be made that these applicants are already operating in a "programmatic funding mode," applying and re-applying for the same research, without enjoying the benefits of ongoing stable programmatic funding. For these applicants, longer program grants would likely reduce the time spent on applying for funding, freeing up time to concentrate on their research program.

Evidence provided later in this report from the case studies of highly impactful OOGP funded research also illustrates the existence of programmatic funding within the OOGP's project based model and how this operates from a researcher's perspective. Some of the principal case-study participants directly supported the concept of programmatic funding, citing that:

"Writing funding grants was a time-consuming process and writing research grants on a frequent basis was taxing on [their] time and focus".

Examples of programmatic funding from the case studies include:

- Dr. Caroline Hoemann's research on hybrid chitosan blood clots: Defining Therapeutic Inflammation in Articular Cartilage Repair, is on its first renewal, and Dr. Hoemann is a co-PI on a grant on its second renewal (Mechanisms and Optimisation of Marrow Stimulated Cartilage Repair, funded from 2006-2015).

- Dr. Daniela O'Neill's research on the development and understanding of children's minds and its relation to children's communication development and the development of the Language Use Inventory (LUI) was made possible through support provided by the OOGP. Dr. O'Neill has received a total of four OOGP grants related to the LUI, with one of these being renewed once.

Case study participants agreed that regardless of funding being project or program based, their research labs needed uninterrupted funding to ensure continuity and retention of HQP and that:

"Continuity of grants through renewal processes and the ability to access operating grants were important attributes of the CIHR funding mechanism."

Generally, in competitions for programmatic grants, it would be expected that the track record of researchers plays an important role as the committee needs to have confidence that the applicant can deliver on a program of research. In the context of the OOGP, the behaviour of peer reviewers can provide further evidence around de facto programmatic funding that may be taking place. A content analysis of reviewer comments submitted by OOGP peer review committee members and scientific officers between 2004 and 2008 concluded that "track record has become increasingly important in judging the merit of grant proposals" (CIHR Evaluation Unit, 2009).

The above evidence appears to suggest that some applicants and reviewers are behaving as if programmatic funding exists within the OOGP. If this is the case, it would seem to lend support to CIHR's proposals to use both types of funding mechanisms in its open competitions (CIHR, 2012a). The current context presents an opportunity to introduce changes to support both project-based and programmatic funding. It should be noted, however, that indications of the use of track record as a criterion varies across committees and that CIHR has not formally stipulated its inclusion or assigned any weight to it in the current OOGP application review process.

What alternative designs could be considered – peer review?

CIHR has recently been consulting with stakeholders on the redesign of its open suite of programs including alternative models of delivering peer review. The potential implications of these alternative designs are considered in this section, in the context of the OOGP's future design and delivery.

In its consultations on enhancements to peer review, CIHR is exploring design elements that would reduce the overall time a reviewer spends reviewing, discussing, and providing feedback on an application. A multi-phased competition process that involves a two-stage screening process prior to face-to-face review is being considered, together with structured review criteria and conducting screening reviews and conversations in a 'virtual space.' The agency aims to make more judicious use of face-to-face committee meetings as a mechanism to integrate the results of remote reviews and determine the final recommendation for funding (CIHR, 2012a). This should improve efficiency and economy from an agency, applicant and reviewer perspective.

It is worth noting that despite the fact that peer review is the primary vehicle used by many major funding agencies worldwide to assess applications, relatively little is known about how it impacts the quality of funded research (Graves et al., 2011). A recent Cochrane study of peer review (Demicheli & di Pietrantonj, 2007) recommended greater examination of the efficiency and effectiveness of the peer review process as used by research funding organizations.

Assessing outcomes of applications selected by independent review compared with face-to-face discussion

In addressing the evaluation question on the OOGP's current peer review processes and to inform future designs, we focus here on two pertinent lines of enquiry:

- Assessing relationships between rankings of independent reviewer scores (submitted prior to peer review committee meetings) and rankings of face-to-face committee scores under the current OOGP peer review design.

- A bibliometric analysis of the scientific impact of researchers (measured by the Average of Relative Citations) to assess the 'quality' of what was funded by face-to-face committees compared with what would have been funded based only on independent reviewer scores.

In a redesigned peer review system that relies in its initial stages on independent review of funding applications, it is necessary to have confidence that those initially selected to proceed without committee discussion are the most meritorious. Without this, there is a risk that promising applications would be screened out. This type of redesign would also require evidence that selection using methods other than the existing form of face-to-face peer review will not have a detrimental effect on the outcomes of funded research. In short, CIHR needs to be sure that its open programs will continue to fund excellence.

To understand the analysis conducted, it is first necessary to briefly describe the current OOGP peer review process. This involves three main stages of selecting applications:

- Review scores (at-home scores) are provided by at least two reviewers working independently of each other.

- A 'consensus score,' agreed to by the two independent reviewers after discussion by the full review committee (15 members on average, ranging from 6 to 27 members).

- A final committee score representing the average of the scores of all committee members.

The first line of enquiry therefore focuses on using application scoring data from stages 1 and 3 in a 'natural experiment' that compares rankings derived from the independent assessment scores of applications by reviewers with the final rankings provided by the peer review committee after discussion.Footnote 12

If there is a high degree of congruency between the rankings derived from the independent reviewers' scores and the final committee scores, it can be hypothesized that the initial review of applications produces much the same outcome as a face-to-face discussion. A further analysis assesses the sensitivity and specificity of a hypothetical independent reviewer funding model using committee rankings as the 'gold standard' against which they can be compared.

The second line of enquiry involves a bibliometric analysis based on the scientific impact of publications produced by researchers following their application to the OOGP (using the Average of Relative Citations). This is designed to compare the impact scores of OOGP applicants selected at face-to-face peer review committee only, at the independent review stage only, at both stages or at neither stage.

For both of these lines of enquiry, several important limitations and caveats must be acknowledged. To highlight some of the more important ones:

- Independent review scores are given by reviewers who then bring these to peer review committees to discuss them; these two samples are not therefore 'independent' of each other, in that the final committee scores are influenced by the initial reviewers.

- CIHR's proposed redesign would include more than two reviewers at the initial stage, so we should expect increased confidence in their assessments.

- Third, the bibliometric data analyzed are based on linking impact scores to researchers, rather than applications, and furthermore covers only a short duration. It is problematic to tease out the effect of publications based on OOGP research from those based on other projects, as well as the fact that prior publication record is one of the criteria used by independent reviewers and committees to score applications.

- Reverse causality is another limitation, since funding of the project will in theory influence subsequent publication success. It is important to note though that, while intuition would suggest that receiving funding is likely to make a researcher more prolific, one study of NIH's R01 grants suggests that this is only marginally true (Jacob & Lefgren, 2007). The study found that receiving an R01 grant only resulted in an increase of approximately one article over a five-year period per funded researcher, controlling for pre-existing conditions.

Notwithstanding these limitations, given the global paucity of evidence on peer review, our analyses begin to address some of the questions that many funding agencies around the world are asking. It is evident that further research is needed, either within the context of future evaluations of CIHR's Open Operating Grant Program and its replacement, or as part of an agency research program to understand the impact of these changes.

Assessing relationships between independent reviewer scores and committee scores

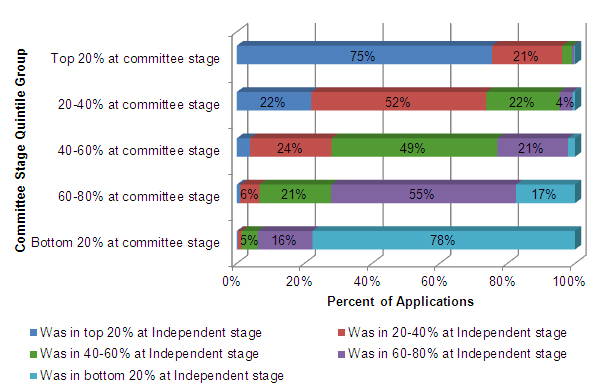

Approximately 75% of the OOGP applications that were funded at the committee stage would also have been funded based on their initial independent review ranking. As shown in Figure 2-5, there is concordance between independent review and committee scores for the most excellent applications (top 20%) and for those ranked lowest (bottom 20%). As other studies have also shown, the greater variability in the three middle groupings reflects greater difficulty in determining merit for proposals that fall between the two extremes (e.g. Cole et. al. 1981; Martin and Irvine, 1983; Langfeldt, 2001; Cicchetti, 1991).

Figure 2-4: Concordance between independent and committee stage assessments

Source: Electronic Information System OOGP data, 2005-2010 (N=21,266) provided by the CIHR Performance Measurement and Data Production Unit.

Figure 2-5 provides further support to the hypothesis that it is easier to identify the most excellent applications. Among those applications ranked in the top 5% at committee stage, approximately 95% originated in the top 20% of applications at the independent review stage. Simply put, almost all of these applications would have been funded by the independent reviewers and not screened out. This level of agreement reflects the opinions of veteran peer reviewers interviewed in a recent article in Nature (Powell, 2010). Again, the interviewed reviewers suggested that it was relatively easy to identify the strongest proposals.

Figure 2-5: Origins of top 5% of committee stage applications

Source: Electronic Information System OOGP data, 2005-2010 (N=21,266) provided by the CIHR Performance Measurement and Data Production Unit.

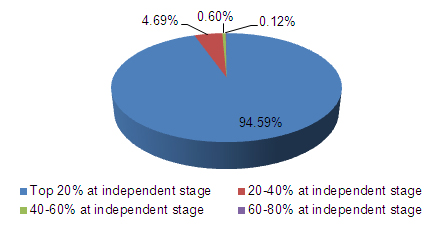

Predictive value of independent reviewer scores

The results described above were further corroborated by analysis of sensitivity and specificity. Sensitivity and specificity are statistical measures commonly used to assess the performance of diagnostic and screening tests. These concepts can be used in the present context to assess the predictive accuracy of the independent reviewers' scores (at-home model) in relation to the "true state" or gold standard, in this case, the full committee score.Footnote 13 Sensitivity and specificity tell us how accurate the "test" is in predicting "correct"results.

Using Table 2-5 as an illustration, sensitivity seeks to answer the following question: out of all those who were funded by the full committee (a+c), what proportion was accurately predicted by the at-home model? It is computed as a/(a+c).

Specificity, on the other hand, addresses the following question: out of all proposals not funded by the full committee (b+d), what proportion was accurately predicted by the at-home model? This is computed as d/(b+d).

| True State or "Gold Standard" (Full committee) |

|||

|---|---|---|---|

| Funded (+) | Not funded (-) | ||

| "Screening Test" At-home model |

Funded (+) | True positives a=3399 |

False positives b=1129 |

| Not-funded (-) | False negatives c=1052 |

True negatives d=15,686 |

|

Sensitivity=0.764, 95% confidence interval [0.751 to 0.776]; Specificity=0.933 [0.929 to 0.937].Footnote 14

Positive Predictive Value (PPV) = 0.751 [0.738 to 0.763]Footnote 15; Negative Predictive Value (NPV)= 0.937 [0.933 to 0.941]. Footnote 16

The sensitivity and specificity scores computed from the data in Table 2-5 are presented below the table. These results confirm that 76% of the applications funded at the committee stage would have been funded based on the initial independent review (i.e. the at-home model), while 93% of applications that were not funded at the committee stage would not have been funded by the at-home model. The kappa statistic, an overall statistic of chance-corrected agreement, for these data is 0.69 (95% CI 0.68-0.70), which is somewhat less than the sensitivity and PPV. This reflects the fact that most applications to the OOGP do not get funded, and thus a "bogus test" in which a reviewer predicted no funding for all applications would be correct 75% of the time (assuming a 25% overall success rate) just by chance.Footnote 17

Ideally, a test would be both 100% sensitive and specific, but in reality there is always a trade-off between the two properties.Footnote 18 The best balance between the two indices depends on the consequences of missing out on excellent proposals versus wrongly funding unworthy proposals.

The current result of high specificity (0.93) with a sensitivity of 0.76 suggests that if a proposal is ruled "in" by the at-home model, reliance on independent reviewers without face-to-face committee review could "miss" up to one-fourth of deserving proposals but is highly unlikely to be funding poor quality proposals.

It is worth reiterating that the two independent reviewers' scores are not independent of the full committee's score, since the independent reviewers are likely to have read the application in great detail and thus affect the opinions of the other committee members. This undoubtedly biases all the concordance indices upwards.

The implications of this analysis for this evaluation and for future program design are that even if only two independent reviewers are used to screen proposals at an initial stage of peer review, they are likely to select excellent applications. It is likely that using a greater number of reviewers at a screening stage would provide greater confidence that excellence is being selected (i.e., increase the sensitivity of the independent reviewers' average ratings). It is therefore recommended that CIHR conduct further analyses on the impact of the number of reviewers on funding decisions if peer review re-designs are implemented.

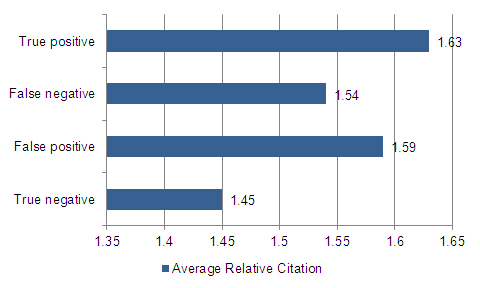

Bibliometric analysis of scientific impact of researchers

In this second line of enquiry, preliminary analysis was conducted to assess the scientific impact of researchers who have submitted OOGP publications in relation to outcomes of peer reviewFootnote 19. The research question investigated is whether those researchers with applications selected by OOGP face-to-face peer review committees have greater subsequent scientific impact than those who would have hypothetically been selected if only the independent reviewer rankings had been used. Table 2-6 below describes four groupings of researchers, based on face-to-face peer review being the 'gold standard' of selection used as comparison.

We would expect those researchers categorized as true positives (selected for funding based on both independent reviewer and committee rankings) to have the highest subsequent scientific impact. If independent review rankings are indeed a potentially reliable means of selecting excellence, we would also expect the false positives (those selected at independent review only) to have higher impact than the true negatives (applications not funded by either the committee or independent reviewers).

| Group of applications /researchers | Independent reviewers – funded Y/N | Peer review committee – funded Y/N | Description |

|---|---|---|---|

| True negative (n= 15,686) |

N | N | Applications that would not have been funded by independent reviewer rankings and that were not funded by face-to-face peer review committee |

| False negative (n= 1052) |

N | Y | Applications that would not have been funded based only on independent reviewer rankings but which were funded by face-to-face peer review committee |

| False positive (n= 1129) | Y | N | Applications that were not funded by face-to-face peer review committee but which would have been funded based only on independent reviewer rankings i.e. if no subsequent face-to-face discussion had taken place |

| True positive (n= 3399) | Y | Y | Applications that would have been funded by independent reviewers and which were funded by face-to-face peer review committee |

As shown in Figure 2-7, on average the true positives (selected both by independent reviewers and at committee) have a significantly higher average of relative citations compared with the other three groups (p<0.05). The true negatives (not selected at either stage) have ARC scores significantly below the other three groups (p<0.05). This first finding again supports the view that the OOGP's peer review process selects excellence.

It can however, also be observed that the false positives (applications selected for funding by the independent reviewers but not by committee) and false negatives (applications selected by committee but not by independent reviewers) are not significantly different statistically.

Based on this preliminary analysis, there is no evidence to suggest that the independent review scores provided by two reviewers are a less reliable means of selection than committee discussion scores when assessing projects likely to result in future scientific excellence.