Fall 2013 Knowledge Synthesis Pilot: Final Report

January 2015

Table of Contents

- Executive Summary

- Introduction

- Competition Overview

- Methods

- Summary of Results

- Appendix 1: Survey Demographics

- Appendix 2: Detailed Survey Results

Executive Summary

CIHR has been developing a new Open Suite of Programs and Peer Review Processes that include a number of novel design elements. In order to ensure that the new elements are appropriate and well-implemented, CIHR is piloting them using current open competitions such as the Knowledge Synthesis program. Participants of the Fall 2013 Knowledge Synthesis pilot (research administrators, applicants, and reviewers) were surveyed following their participation in the pilot to capture their thoughts regarding the new processes and design elements. The responses were compiled and are summarized briefly below.

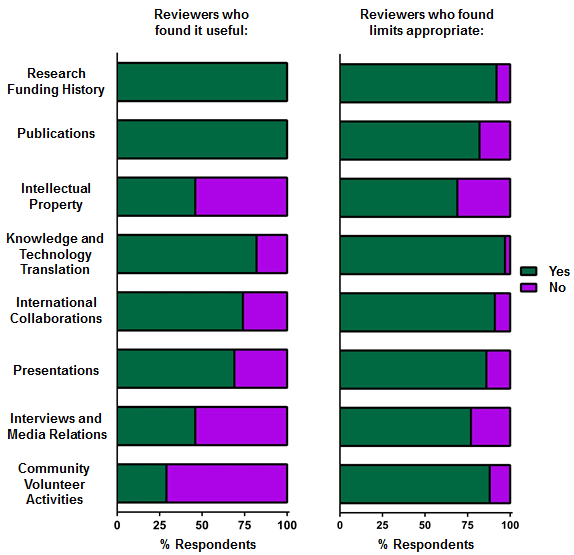

Effectiveness of the Structured Application Process

Applicants and research administrators found the structured application form to be fairly easy to use; however, those who were resubmitting previous applications found it difficult to fit their old application into the new format. Over 50% of applicants found the character limit in the structured application to be limiting; however, the majority of reviewers found the character limits to be sufficient, stating that some applicants were more adept than others at including relevant information in their application. Reviewers found sections of the CIHR Academic CV that was used with this competition to be irrelevant, namely the Interviews/Media Relations and Community Volunteer Activities. This feedback will be taken into consideration in the development of the Project Scheme Biosketch which will replace the CIHR Academic CV for Project Scheme pilots/competitions moving forward.

Impact on Reviewer Workload

The majority of Stage 1 reviewers found the workload to be acceptable, and the average amount of time it took reviewers to both read an application and write a review was less in this pilot competition compared to the previous Knowledge Synthesis competition (Spring 2013).

The majority of Stage 2 reviewers found the review workload to be manageable, take less time, and be easier than previous competitions. Stage 2 reviewers spent one day (8 hours) at a face-to-face meeting compared to a 3-day committee meeting as in previous competitions.

Clarity of the Adjudication Criteria and Scale

Both applicants and Stage 1 reviewers had difficulty distinguishing the "Quality of the Idea" from the "Importance of the Idea". Slightly more than 50% of applicants agreed that the adjudication criteria should be equally weighted. For those who disagreed, the trend was to put more weight in the approach section at the expense of the Quality/ Importance of the Idea. Stage 1 reviewers found that the descriptors for the adjudication scale were clear and useful, and that the adjudication scale range was sufficient to allow for the description of meaningful differences between applications. The majority of Stage 1 reviewers indicated that they used the full range of the scale (A-E) when assessing their applications; however, fewer than 25% of the Stage 2 reviewers agreed, and a quick analysis of the percentage of A-E ratings that were given out indicate that over 70% of the ratings given out were A or B ratings. This made it difficult for Stage 2 reviewers to differentiate between similarly-ranked applications. Many applicants and Stage 1 reviewers found that the adjudication criteria allowed applicants to effectively convey their integrated knowledge translation (IKT) approach. Approximately two thirds of applicants found that they could convey their IKT approach as well as in previous competitions.

Effectiveness of the Structured Review Process

Stage 1 - The majority of reviewers were satisfied with the reviewer worksheet, although some reviewers would have liked more space to provide feedback, especially in the Approach section. Slightly more than 50% of reviewers read other reviewer's preliminary reviews of the same application; however, doing so did not often influence reviewer assessment of the application. Only a quarter of reviewers participated in an online discussion. The main reason for not participating was because their reviews were not complete in time. Approximately 50% of Stage 2 reviewers indicated that Stage 1 reviewers did not provide sufficient feedback to justify the ratings given, and this made it difficult to assess the applications that were assigned to them.

Stage 2 – All reviewers consulted both the applications and the Stage 1 review material, and indicated that they felt required to do both. Some reviewers felt uncomfortable being forced to put a certain number of applications into a "yes – to fund" bin when they did not believe that they should be funded (or vice versa), while others thought it was an appropriate task. The majority of reviewers read other reviewers comments/binning decisions; however, they did not often influence reviewer assessment of the application. The majority of reviewers indicated that the face-to-face committee meeting is required, and that the focus of discussion at the face-to-face meeting should be applications that are close to the funding cut-off line. Reviewers found the instructions at the meeting to be useful, and they found the voting tool to be easy to use.

Experience with ResearchNet

Both Stage 1 and Stage 2 reviewers found ResearchNet easy to use, and were able to effectively use the new elements that were built in ResearchNet to support the new design elements. Some additional instructions for Stage 1 reviewers within the peer review manual (e.g. step-by-step instructions) would be helpful for future competitions.

Overall Satisfaction with the Project Scheme Design

Overall, both Stage 1 and Stage 2 reviewers were satisfied with the new review processes. Applicant satisfaction in many aspects generally depended on their success rate in the competition, with those who were funded being the most satisfied, followed by those who were not funded, but who were successful at Stage 1, and the least satisfied applicants were those who were not successful following Stage 1.

Limitations and Future Directions

There are certain limitations to consider regarding this study, most notably is the sample size. While the survey response rates were high, the small overall number of applicants (n=75), research administrators (n=45) and reviewers (Stage 1: n=49; Stage 2: n=18) will limit the reliability and validity of the completed analysis. Over time, a much larger sample size will be collected as further studies are completed.

Overall, the results of this study indicate that many of the design elements that have been developed for the Project and Foundation scheme were well-received by research administrators, applicants, and reviewers of the Fall 2013 Knowledge Synthesis competition. A number of areas for improvement were identified by study participants, and many of the suggested improvements have already been implemented for subsequent pilot studies.

Introduction

The success of the transition to the new Open Suite of Programs and peer review processes rests largely on CIHR's ability to efficiently and effectively pilot and test the functionality of peer review design elements in advance of their full implementation. CIHR will be piloting the new design elements (described in Table 1 in the Methods section below) that have been developed in order to ensure that they function appropriately, that they allow the important aspects of current applications to be effectively captured (e.g., integrated knowledge translation), that the new peer review process is robust and fair, and that the new application/review processes reduce both applicant and reviewer burden. Pilot participants (research administrators, applicants, and reviewers) will be surveyed following each step of the application and review processes of each pilot.

The results of the surveys completed for the 2013 Knowledge Synthesis competition are described in this report. As each of the pilot studies is completed, it is CIHR's intention to make findings available to the research community in order to contribute to the body of literature on peer review and program design.

Piloting peer review design elements will allow CIHR to adjust and refine processes and systems in order to best support applicants and reviewers. In order to identify areas for improvement, CIHR is collecting feedback from applicants, institutions, and peer reviewers through surveys.

Note: When conducting pilots within existing competitions, CIHR will ensure that the quality of the peer review process is not compromised and that every application receives a fair, transparent, and high-quality review.

Note: CIHR is also using data collected from this pilot to analyze reviewer behaviour, including how often reviewers modify their reviews after viewing other reviewer's reviews/participate in the asynchronous online discussion, and how often reviewers adjust their rank list of applications. These analyses are being conducted separately, and the results will be made available at a later date.

Competition Overview

The Knowledge Synthesis program is an open program with an integrated knowledge translation (IKT) component. The program is designed to increase the uptake/application of synthesized knowledge in decision-making by: 1) supporting partnerships between researchers and knowledge users in order to produce scoping reviews and syntheses that respond to the information needs of knowledge users in all areas of health, and 2) to extend the benefits of knowledge synthesis to new kinds of questions relevant to knowledge users and areas of research that have not traditionally been synthesized.

The Knowledge Synthesis program was chosen for this pilot as it is an operating grant program with many similar components to the Open Operating Grants Program (OOGP). The program also allowed for a more controlled environment as it has a more manageable number of applications and reviewers than the OOGP. Finally, the program is open to applications in any of CIHR's four themes: biomedical, clinical, health services and policy research, as well as population and public health. For this competition, 79 applications were reviewed by 49 Stage 1 reviewers and the top 39 applications were moved forward to Stage 2 where the reviews were reconciled by 18 Stage 2 reviewers.

Methods

Pilot Information

Within this first pilot, a number of program design elements remained unchanged. The design elements tested in this pilot are described in Table 1 and fall into two broad categories:

- Program objectives and eligibility criteria

- Application-reviewer assignment process

| Design Element | Objective | Pilot Description |

|---|---|---|

| Structured Application & Review (Including New Adjudication Criteria) |

|

|

| Increased # of Reviewers per Application (5 reviewers/ applications in both Stage 1 and Stage 2) |

|

|

| Multi-Stage Review |

|

|

| Remote Review & Online Discussion |

|

|

| New Rating Scale & Ranking System |

|

|

| Face-to-Face Meeting |

|

|

Note: The quality of individual reviews was not assessed during this pilot.

Survey Process

The objective of the surveys was to assess the participant's perception and experience within the peer review process with respect to the design elements tested. Surveys were developed using an online survey software called Fluid Survey. Four different surveys were developed to coincide with each stage of the Pilot process and for the purposes of this analysis, was broken down into the following four stages:

- Application Submission

- Applicants were surveyed

- Research Administrators were surveyed

- Stage 1 Review

- Stage 1 reviewers were surveyed

- Stage 2 Review

- Stage 2 reviewers were surveyed

- Receipt of Competition Results

- Applicants were surveyed

Applicants and Research Administrators were surveyed following the submission of applications to CIHR. The focus of this survey was on the applicant's perception of, and experience with; the new Structured Application process, the new adjudication criteria (including whether or not integrated knowledge translation elements can be effectively captured using the adjudication criteria), and the structured application form (including character limits).

Stage 1 reviewers were surveyed following the submission of their reviews to CIHR. The focus of this survey was on the Stage 1 reviewer's perception of, and experience with; the Stage 1 Structured Review process, reviewer workload, the Structured Application form (including the value of the allowable attachments), the new adjudication criteria (including whether or not integrated knowledge translation elements can be effectively captured using the adjudication criteria), and the various elements of the Stage1 review process (adjudication scale, adjudication worksheet, rating and ranking process, and the online discussion).

Stage 2 reviewers were surveyed following the face-to-face committee meeting. The focus of this survey was on the Stage 2 reviewer's perception of, and experience with; the Stage 2 review process, reviewer workload, the quality of Stage 1 reviews, pre-meeting activities (including reading the pre-meeting reviewer comments and the binning process), and various aspects of the face-to-face meeting (including validating the list of applications, voting process, and the use of the funding cut-off line).

Applicants were again surveyed following the receipt of the competition results. The focus of this survey was on the applicant's perspective of, and experience with the Structured Review process (Stage 1 and Stage 2), the quality of the reviews received, and overall satisfaction with the review process.

The data presented in this report includes data from returned survey reports that were both completed and not completed.

Limitations

It is important to note the limitations to this pilot.

Generalizability: The Knowledge Synthesis program is an operating grant program; however, there are some notable program design differences when compared to the other open grant programs (e.g., the OOGP). Notably, the program requires the involvement of a knowledge-user and IKT approach. Additionally, the majority of applications received to this program are categorized as pillar 3 and 4. These considerations may limit the generalizability of the results.

Non-Blinded Pilot: Survey answers may be influenced by the fact that participants knew that they were involved in an experiment and that some respondents were applying for funding from CIHR.

Sample Size: While the survey response rates were high and exceeded targets, the small overall number of applicants, research administrators and reviewers will limit the statistical analysis that is possible. Over time, a much higher sample size will be collected. Until then, the results of this report should be examined with the small sample size in mind.

Nevertheless, the overall results of this survey are positive within the context of this competition. A number of areas for improvement were identified by survey respondents, and many of them have already been implemented within the subsequent pilots.

Summary of Results

The results of this study indicate that, overall, the majority of both applicants and reviewers feel that the new application and peer review processes tested in this pilot were as good as and/or the same as the current processes. The results also indicate a number of modifications and updates to the design elements can be made to enhance the application and/or review process experience for participants in future pilots.

The results of this pilot will be summarized in the next sections, and will focus on:

- The effectiveness of the structured application process;

- The impact on reviewer workload;

- The clarity of the adjudication criteria and scale;

- The effectiveness of the structured review process;

- The experience with ResearchNet; and

- The overall satisfaction with the Project Scheme design.

A description of the pilot participants (survey participant demographics) can be found in Appendix 1. The detailed survey results can be found in Appendix 2.

1. Effectiveness of the Structured Application Process

Within this section, applicant and research administrator experiences with the new structured application process and the Project Scheme design elements will be discussed.

Overall Impression of the New Structured Application Process

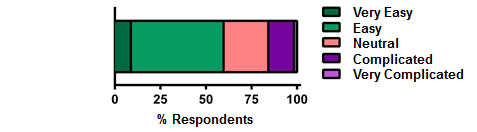

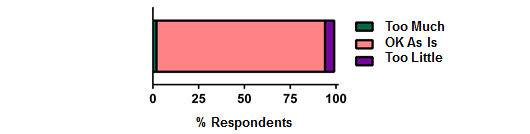

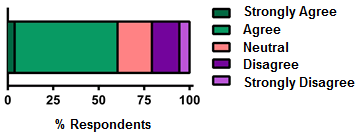

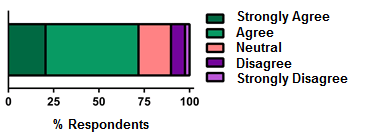

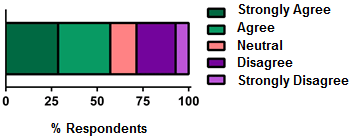

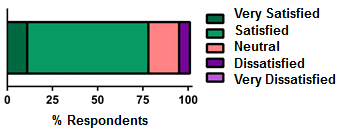

Overall, a small majority of applicants found the structured application form easy to use. Additionally, small majorities of both applicants and research administrators found the structured application format intuitive and easy to use, and were relatively satisfied with the structured application process (Figure 1).

Applicants and research administrators provided comments regarding the structured application form. Many comments indicated that the structured application form was easy to use. Common concerns raised by applicants were that the structured application limited the flow of ideas and broke up the narrative that applicants feel is essential to grant proposals, and the formatting limitations within ResearchNet (Table 1).

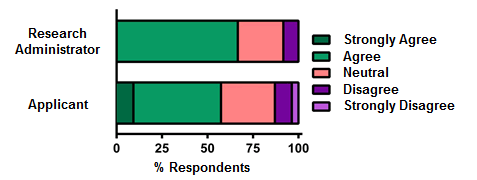

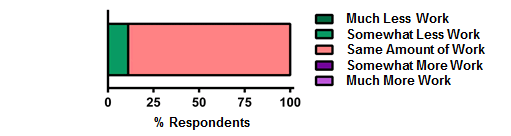

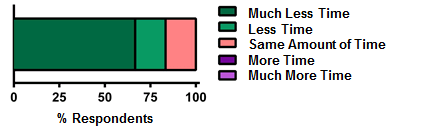

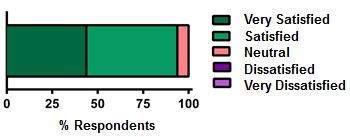

Respondents who had previously submitted an application to a Knowledge Synthesis competition were asked to compare the structured application process to previous experiences (Figure 2). The majority of respondents found the structured application to be about the same amount of work; however, they were split on the ease of its use. Accordingly, approximately one third of applicants described the structured application form as easier to use compared to the previous application, one third described the experience as the same, and one third felt it was more difficult to use. Overall, applicants found the submission process to be just as good as or better than in previous competitions, while the majority of research administrators indicated that they felt no change in the submission process compared to previous competitions (Figure 2).

For applicants who were re-submitting an application from a previous Knowledge Synthesis competition, a major concern was re-writing their application to fit it within the confines of the structured application form. This was especially difficult for the Approach section as there was considerably less space available to include the same amount of methodological information as in previous applications. There was also some concern regarding the Budget section as some applicants felt that they did not have the space required to adequately justify their expenses (Table 2).

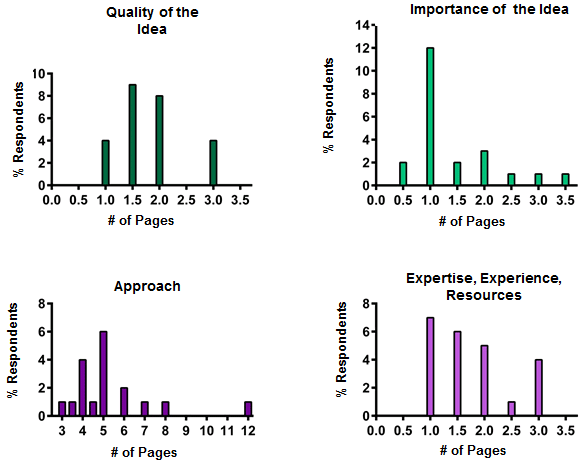

Character Limits in the Structured Application Form

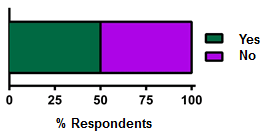

Character limits were put in place for each section of the structured application form and applicants were asked whether the character limits were adequate in order to respond to each adjudication criteria. More than 50% of applicants did not believe that they had sufficient space to include the relevant information required for their applications (Figure 3A). When asked what the ideal page limit should be, applicants were highly variable in their responses; however, the average of all responses for all sections when summed was roughly equal to 11 pages – the same length as the previous application (Figure 3B).

There was confusion initially regarding the character limits as many applicants were not aware that the character limits included spaces. This caused a significant amount of frustration as applicants were forced to cut additional characters when inserting their text into the structured application form in ResearchNet. Overall, applicants indicated that they would be satisfied with the character limits imposed in the structured application if the reviewers were satisfied with the decreased amount of detail provided in the application (Table 3).

The large majority of Stage 1 reviewers agreed that the character limits in the structured application form allowed applicants to include all the information relevant to the grant (Figure 4). It should be noted, however, that some reviewer comments to applicants indicated that there was not sufficient detail included with the application. This may indicate that there were certain applicants who were not adept at using the character limit as efficiently as they could have, or that the character limits themselves were not sufficient to allow the amount of detail reviewers were expecting. This difference will be assessed in future pilots.

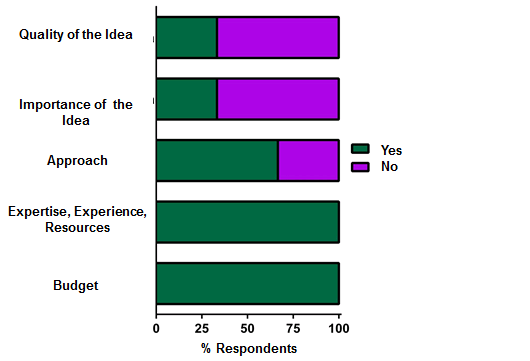

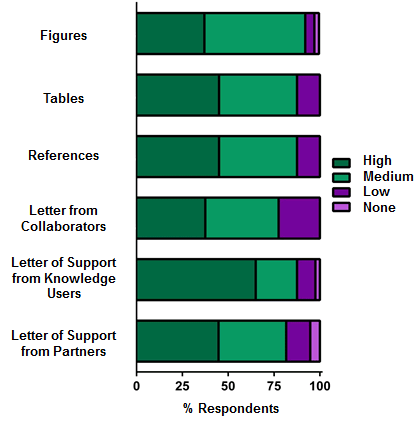

Value of Allowable Attachments to Stage 1 Reviewers

As part of the new structured application format, applicants were only permitted to attach certain items to their applications (figures, tables, references, CVs, Letters of Collaboration, and Letters of Support from Knowledge Users or Partners). Overall, all allowable attachments were deemed to have high or medium value to reviewers (Figure 5A). Some reviewers did not believe that CIHR should limit the type of attachments, while other reviewers indicated that certain methodological attachments should be allowed (e.g. search strategies, pilot searches, review protocol, etc.) as reviewing a Knowledge Synthesis application without this information is difficult. Finally, some reviewers suggested that limiting the number of letters of support to only the most relevant/ compelling would reduce reviewer burden (Table 4).

Value of CIHR Academic and Knowledge-User CVs

Stage 1 reviewers found certain components of the CV useful when conducting their reviews, namely the research funding history, publication, knowledge and technology translation, international collaborations, and presentation sections. The majority of reviewers found the limits in the CV to be appropriate (Figure 5B). Some reviewers highlighted the value of having all of the CVs in the same format, but noted that the presentation required improvement.

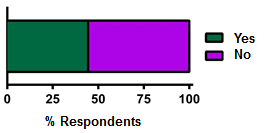

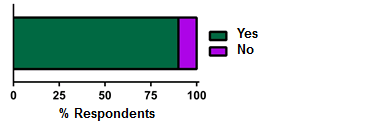

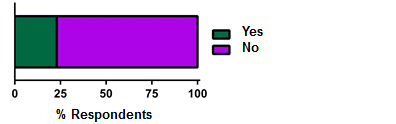

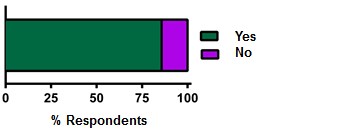

Problems Encountered in the Completion of the Structured Application

Applicants were given the opportunity to inform CIHR of any non-technical problems they experienced throughout the structured application process. Only one quarter of applicants indicated that they experienced problems while completing their applications (Figure 6).

Most of the problems encountered had to do with the character limits being too restrictive, especially when applicants were not aware that the limits included spaces (Table 5). Additionally, applicants felt that they should be able to embed figures in the structured application form in ResearchNet. There were also some comments regarding formatting issues in the structured application form and the resulting PDF (e.g. no use of colour, random spaces removed, underlining too dark, etc.).

2. Impact on Reviewer Workload

Within this section, reviewer experiences with respect to workload of both Stage 1 and 2 reviewers will be discussed.

Stage 1 Reviewer Workload

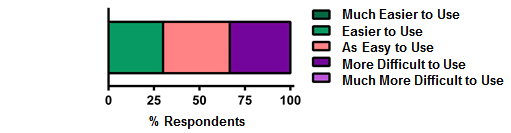

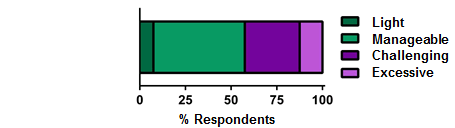

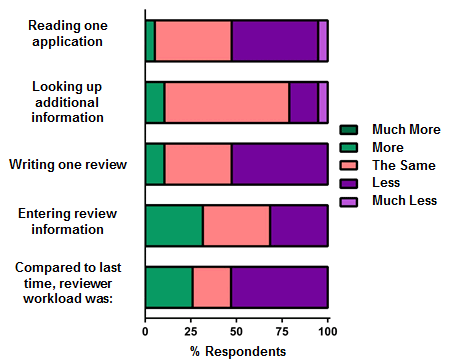

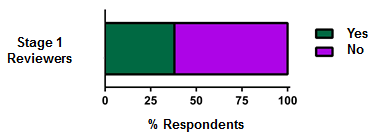

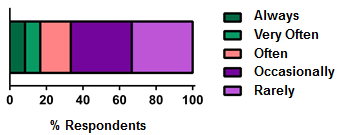

Overall, the majority of Stage 1 reviewers indicated that the reviewer workload was manageable or challenging (Figure 7A). Reviewers were asked to approximate the amount of time spent on various review activities, and these numbers were compared to values collected from reviewers following the Spring 2013 Knowledge Synthesis competition (Figure 7B and Table 6). Compared to reviewers of the Spring 2013 competition, it took Stage 1 reviewers less time to both read one application (1.53+/-0.14 hours for Stage 1 reviewers vs. 2.21+/-0.25 hours for Spring 2013 reviewers) and write the review for one application (0.95+/-0.12 hours for Stage 1 reviewers vs. 1.66+/-0.23 hours for Spring 2013 reviewers). This decreased time to complete certain review activities is promising, and it will be continuously monitored throughout the Project Scheme pilots. There was no calculation of significant differences in completion time or comparison of reviewer workload and number of applications assigned as the usefulness and comparability of this preliminary data is still limited.

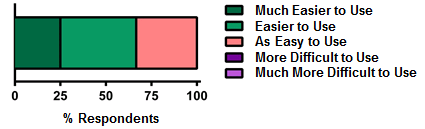

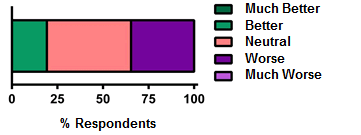

Of those reviewers who had previously reviewed for a Knowledge Synthesis competition, just over 50% indicated that the workload was less, and only 25% of reviewers felt that it was more work (Figure 7C). Compared to a previous review experience, reviewers found that entering review information in ResearchNet and looking up additional information related to the applications online were about the same amount of work. Additionally, this cohort of reviewers found that, on average, it was less work to read applications and write reviews.

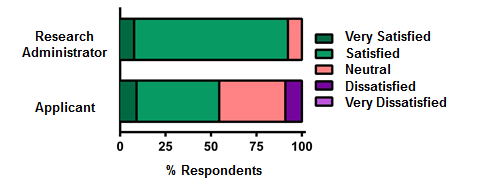

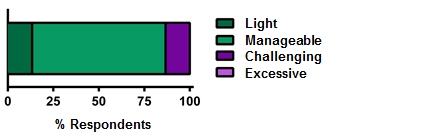

Stage 2 Reviewer Workload

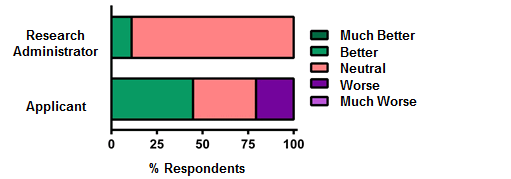

The majority of Stage 2 reviewers indicated that the workload was light or manageable (Figure 8A). Of those reviewers who had previously reviewed for a Knowledge Synthesis competition, over 80% indicated that the workload was less when compared to a previous competition (Figure 8B). Compared to previous review experiences, reviewers found that the Stage 2 review process was either easier than or just as easy as it had been for previous competitions (Figure 8C). Compared to previous Knowledge Synthesis competitions, reviewers found the applications to be more concise and more easily digestible (Table 7 and 8).

Taking into account only the face-to-face (committee) meeting, the amount of time spent at the meeting compared to previous knowledge synthesis competitions was significantly less. That is, Stage 2 reviewers spent considerably less time discussing applications at the committee/face-to-face meeting than reviewers who participated in the Spring 2013 Knowledge Synthesis competition (Figure 8D). This may be due to the new Stage 2 review process which focuses the conversation at the meeting to only those applications that are close to the funding cut-off.

Stage 2 reviewers provided numerous suggestions regarding the Stage 2 review process, some of which, if implemented, may further decrease reviewer burden. Stage 2 reviewers were hesitant to perform their reviews without referencing the application. This was especially true because the background of the Stage 1 reviewer was not known. Stage 2 reviewers indicated that they may feel less compelled to always consult the application material if they were aware who the Stage 1 reviewers were. Knowing the name and background of the Stage 1 reviewer may, in fact, alleviate the second reason Stage 2 reviewers felt compelled to reference the application material: the quality of the Stage 1 reviews. Many Stage 2 reviewers indicated that the review comments received from Stage 1 reviewers were often not sufficient to justify the rating (A-E) given, or were otherwise not helpful. In these instances, the responsible course of action of the Stage 2 reviewers was to re-review the application material, requiring additional review time. Communicating to Stage 1 reviewers that one of the main purposes of writing the strengths and weaknesses of each adjudication criterion is to justify the ratings chosen not only for applicants, but also for the Stage 2 reviewers who are tasked with integrating the 5 reviews of each application and making a funding decision based on those reviews may help to increase the quality of the Stage 1 written reviews (Table 7, 8, and 19).

3. Clarity of the Adjudication Criteria and Adjudication Scale

Within this section, reviewer experiences with respect to the adjudication criteria and scale of both Stage 1 and 2 reviewers will be discussed.

Adjudication Criteria

A point of concern regarding the new adjudication criteria was that the distinction between "Quality of Idea" and "Importance of Idea" would not be intuitively clear for applicants. In the survey, both applicants and Stage 1 Reviewers were asked to describe whether the distinction between the two adjudication criteria was clear to them when preparing or reviewing applications. Approximately half of the applicants surveyed indicated that the distinction was not clear (Figure 9). Stage 1 Reviewers also had difficulty deciphering the distinction between Quality and Importance of the Idea. Reviewers also indicated that it was clear to them that the applicants had not understood the distinction between the two adjudication criteria either given that the information provided to reviewers was similar and repetitive in both sections. The main suggestion from both applicants and reviewers was to combine the two criteria. Some reviewers indicated that the Interpretation Guidelines were helpful in deciphering the differences between the Quality and Importance of the Idea; however, others indicated that, while helpful, this document used language that was not intuitive because there was a considerable amount of overlap in the descriptions of the two criteria (Table 9).

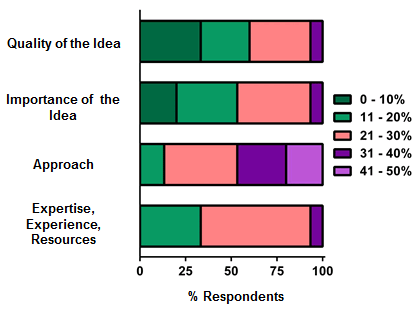

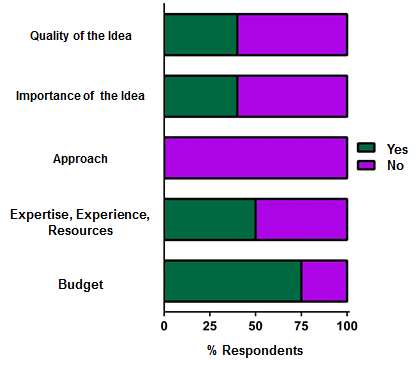

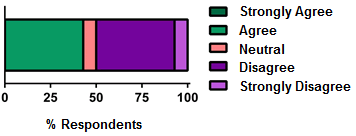

Currently, each adjudication criterion is weighted equally (25% / criterion). Stage 1 reviewers disagreed as to whether or not the adjudication criteria should be weighted equally. When asked what the appropriate weighting of the adjudication criteria should be, the majority of reviewers who disagreed with the current weighting indicated that more weighting should be given to the Approach section at the expense of the Quality of Idea and Importance of Idea sections, the main argument being that if a project is not methodologically sound, the idea, though potentially important and interesting, cannot be compelling as the project will not succeed in answering the question (Figure 10 and Table 10).

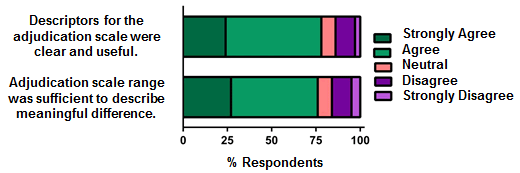

Adjudication Scale

The majority of Stage 1 reviewers indicated that the descriptors for the adjudication scale (A-E) were clear and useful. Additionally, the majority of Stage 1 reviewers indicated that the adjudication scale range was sufficient to describe meaningful differences between applications (Figure 11).

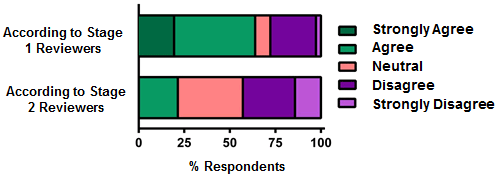

Stage 1 reviewers were asked if they had used the full range of the adjudication scale (A-E) and the majority of Stage 1 reviewer indicated that they had used the full range. Interestingly, when Stage 2 reviewers were asked whether Stage 1 reviewer used the full range of the adjudication scale, less than 25% of Stage 2 reviewers indicated that this was true (Figure 12A). It is important to note that Stage 2 reviewers were only assigned the top 39 of the total 79 applications, and this is likely contributing to the response to this question. In order to empirically determine whether the full range of the adjudication scale was used, the total number of each rating (A-E) was counted, and the percentage of each rating was calculated. It is evident that the majority of ratings given out were on the top end of the scale as 73.45% of ratings either given out were either A ratings (average of 36.01% across all adjudication criteria) or B ratings (average of 37.44% across all adjudication criteria) (Figure 12B).

It is possible that using the letters A-E is too reminiscent of scholastic letter grades, and Stage 1 reviewers had a difficult time giving out many ratings that were below a B grade. Indeed, in the comments received from Stage 1 reviewers, some indicated having difficulty using the bottom half of the scale because either the applications did not meet the criteria for C, D, or E ratings, or because the criteria did not allow for taking finer difference between applications into account (i.e. there are 5 criteria, and reviewers see this as 20% differences, which are too large to account for finer differences between applications). On the other hand, some reviewers believed that the scale could be shorter – ABC or ABCD. Others indicated that it was difficult to distinguish between the letters because they did not reference the adjudication requirements. In this case, it might be useful to reviewers to have examples of under which type of circumstance the different letter ratings should be given (Table 11).

One of the purposes for assigning ratings for each adjudication criterion is inform the ranking process. For this reason, it is important that Stage 1 reviewers be able to effectively differentiate between the qualities of each application. Additional feedback received from Stage 1 and Stage 2 reviewers indicated that there might be some benefit to adding more granularity at the top of the scale (e.g. within the A and B ratings). Additionally, it was suggested that the actual letters themselves should be changed to remove any reference to letter grades. CIHR will be considering these suggestions for future competitions (Table 11).

Integrated Knowledge Translation

An important component of this pilot was to ensure that the new structured application process and adjudication criteria could successfully capture the essential components of an integrated knowledge translation approach.

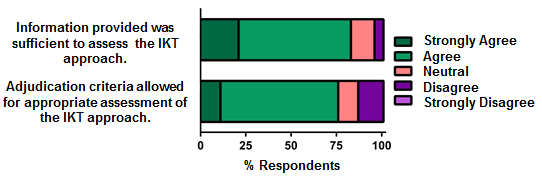

A small majority of applicants indicated that, overall, the adjudication criteria did allow them to sufficiently convey their integrated knowledge translation approach within the structured application (Figure 14A). For applicants who had previously submitted an application to a Knowledge Synthesis competition, approximately one third of applicants indicated that the adjudication criteria and structured application made it more difficult to include the integrated knowledge translation approach; however, two thirds indicated that it was about the same or easier to include the approach in their structured applications (Figure 14B).

There were general concerns from applicants regarding the lack of clarity surrounding where to include knowledge translation (KT) information, and that there was insufficient space in the structured application to include the KT approach in any of the sections. Many applicants suggested that there should be a separate section in the structured application for the integrated KT approach. Also important to note is that some applicants felt that KT was becoming less important to CIHR because the KT approach was buried within the structured application form (Table 12).

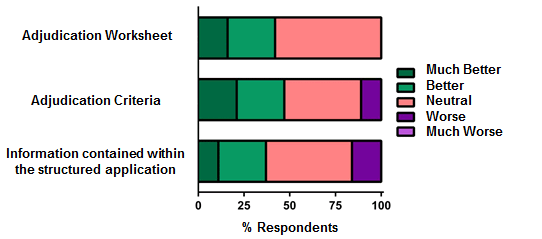

The majority of reviewers agreed that the information provided by applicants was sufficient to assess the integrated KT approach, and that the adjudication criteria allowed reviewers to appropriately assess the integrated KT approach (Figure 15A). The majority of reviewers who had previously reviewed for a Knowledge Synthesis competition indicated that the adjudication worksheet, the adjudication criteria, and the information provided by the applicants in the structured application made it either easier similar compared to a previous experience to assess and provide feedback to applicants regarding their integrated KT approach (Figure 15B). Some reviewers would have liked to have a separate section for KT as there were multiple sections in the application/adjudication criteria where the information could have been placed, and this made it difficult to review (Table 13).

4. Effectiveness of the Structured Review Process

Within this section, reviewer experiences with the new structured review process and the Project Scheme design elements of both Stage 1 and Stage 2 reviewers will be discussed.

Stage 1 Review Process

Adjudication Worksheet

Stage 1 reviewers were required to give a rating for each section of the application, and to provide both strengths and weaknesses for each section to justify their ratings using the adjudication worksheet in ResearchNet. Overall, Stage 1 reviewers found the adjudication worksheet easy to work with (Figure 16A). CIHR imposed a character limit in the adjudication worksheet (1750 characters for strengths and 1750 characters for weaknesses for each section of the worksheet). The majority of reviewers indicated that the character limit was sufficient to provide appropriate feedback to applicants (Figure 16B); however, according to those reviewers who did not believe that there was sufficient space to provide comments, this was especially true of the Approach section, which, in their opinion, should allow up to three pages for reviewer comments (Figure 16C and Table 14). In fact, some reviewers resorted to continuing their comments regarding the Approach section in different sections that had additional characters left over (e.g. the budget section). Some reviewers indicated that they found the requirement to provide both strengths and weaknesses for each criterion to be inconvenient and repetitive, and would have preferred providing a justification for each rating, and then providing an overall assessment of strengths and weaknesses on the whole application. Additionally, some reviewers disagreed that there should be a character limit for reviewer comments. Others met in the middle and suggested that there should be an overall character limit for all sections (as opposed to one limit for each section) because reviewers may have more to say in one section than another (Table 15).

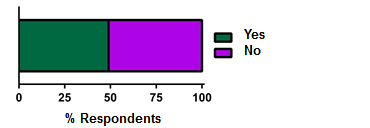

Reading Preliminary Reviews

As there is no face-to-face meeting for Stage 1 reviewers, CIHR developed a process whereby reviewers could read the preliminary reviews of other reviews who were assigned the same application. Less than 50% of reviewers read the other reviewer's Stage 1 preliminary reviews (Figure 17A), and the reading of other's reviews did not often influence a reviewer's assessment of the application (Figure 17B). The majority of reviewers indicated that they did not read the preliminary reviews because either they did not have time, or they were late submitting their preliminary reviews to CIHR (Table 16). Other reviewers indicated that they either did not want to be influenced by other reviewers, or that they did not know how to access the preliminary reviews of other reviewers. Those reviewers who did read other's preliminary review spent up to 2 hours doing so.

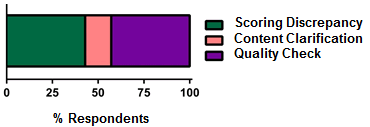

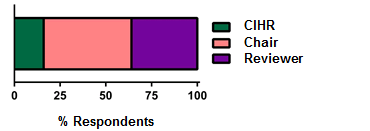

Online Discussion

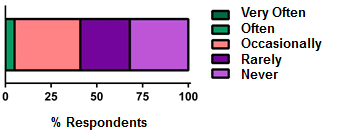

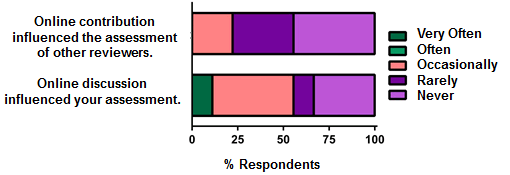

As there is no face-to-face meeting for Stage 1 reviewers, CIHR developed a process whereby reviewers could communicate with other reviews who were assigned the same application using an asynchronous online discussion tool. Approximately 25% of reviewers participated in an online discussion (Figure 18A). The main reasons given for those who did not participate included that the reviews were not completed in time, that they did not have time to participate, that there were no discussions available in which to participate, or that there was nothing to discuss (Figure 18B and Table 17).

Of the 10 reviewers who participated in an online discussion, 7 reviewers initiated a discussion (Figure 19A). Of the 7 who initiated an online discussion, three discussions were initiated to discuss scoring discrepancies, three were initiated to quality check reviews, and one was initiated for a content clarification (Figure 19B). Stage 1 reviewers indicated that either a virtual Chair or the reviewers (at their discretion) should initiate the online discussion (Figure 19C), and that specific criteria should be used to determine whether an online discussion should take place (Figure 19D). Most reviewers indicated that they thought an online discussion should be initiated when there are large discrepancies in ratings or comments (Table 18).

Reviewers who had participated in the online discussion indicated that they did not feel that their contribution influenced the assessment of other reviewers; however, more than 50% mentioned that online discussions with other reviewers often or occasionally influenced their assessment of the application (Figure 20).

Stage 1 Reviews (from Stage 2 reviewers)

The Stage 2 review process requires reviewers to read the Stage 1 reviewer ratings and comments, and integrate the reviews of the 5 Stage 1 reviewers. In order for this to be possible, it is imperative that the Stage 1 review material be of very high quality.

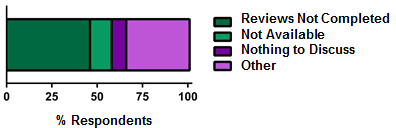

For this competition, 100% of reviewers consulted both the Stage 1 reviews and the applications for all applications assigned to them (Figure 22A). Less than 50% of reviewers found that the Stage 1 reviewers provided clear justifications to support their ratings (Figure 21). This is of concern as Stage 2 reviewers will feel obliged to continue to review applications in addition to the Stage 1 reviews until such time as the Stage 1 reviews are of significantly higher quality. It is probably for this reason that more than 50% of Stage 2 reviewers indicated that reading both the application and Stage 1 reviews are necessary to properly review the application (Figure 22B). The majority of complaints from Stage 2 reviewers were that the Stage 1 reviewers provided very little justification for their ratings (as though they were unaware that other reviewer would be making funding recommendations based on their comments), and that the Stage 1 reviewers did not use the full range of the adjudication scale, which made it difficult to interpret subtle differences between applications (Table 19).

Stage 2 Review Process

Reviewing Stage 1 Reviews

For the Fall 2013 Knowledge Synthesis competition, each Stage 2 reviewer was assigned approximately 12–13 applications. As previously mentioned, Stage 2 reviewers were required to read the Stage 1 reviews in order to make funding recommendations. Reviewers also had access to the application material, and were free to consult the applications as necessary. An interesting outcome of the pilot revealed that Stage 2 reviewers felt very uncomfortable making decisions regarding the applications they were assigned without consulting the applications themselves. 100% of Stage 2 reviewers consulted both the applications and the Stage 1 reviews (Figure 22A). Additionally, over 50% of Stage 2 reviewers believed that reading both the application material and the Stage 1 reviews is a necessary function of the Stage 2 reviewer (Figure 22B).

The Stage 2 reviewer's discomfort with relying solely on the Stage 1 reviews to make decisions is understandable. It was noted by Stage 2 reviewers that they would feel more comfortable relying on the Stage 1 reviews if they knew the background of the reviewer (Table 19). This and other methods to ease Stage 2 reviewers into the new review process will be investigated for future pilots.

In order for the new process to function effectively, the Stage 1 reviews must be of consistently high quality. Stage 1 reviewers must clearly justify their ratings, point out relevant pieces of the application, and indicate when they have some discomfort about evaluating certain aspects of the application due to knowledge limitations. This is to ensure that Stage 2 reviewers can make informed decisions regarding the overall quality of each application. As was noted in the previous section, many Stage 2 reviewers indicated that there were very few comments from reviewers and/or that the comments provided were insufficient to justify the rating that was given. Furthermore, as the full range of the adjudication scale was not used by the Stage 1 reviewers, many applications ended up with ratings of A or B, making it very difficult to differentiate between high-quality and medium quality applications (Table 19). Methods to both increase the quality of the Stage 1 reviewers comments and to encourage Stage 1 reviewers to use the full range of the adjudication scale have been previously discussed, and, as can be seen here, are necessary in order to ensure an effective Stage 2 review process.

Binning Process

Stage 2 reviewer opinion regarding whether the number of yes/no allocations for the binning process were appropriate were evenly split (Figure 23). Some reviewers indicated that it was too early to determine what the appropriate number of allocations should be; however, many reviewers suggested that it would be more appropriate to allow reviewers a range of yes/no allocations as opposed to an absolute number (Table 20). It is important to note that Reviewers are limited in the number of "yes" and "no" allocations they are permitted as this is determined by the funding available for the competition.

Pre-Meeting Reviewer Comments

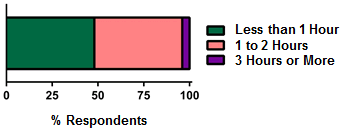

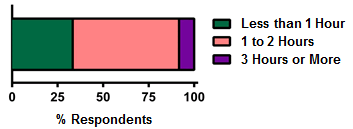

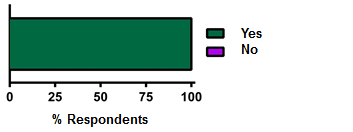

The pre-meeting reviewer comments that are submitted to CIHR are made available for other Stage 2 reviewers to read in order to prepare for the face-to-face meeting. The majority of reviewers read the pre-meeting comments (Figure 24A); however, most reviewers indicated that reading the comments and/or viewing the binning decisions of other reviewers did not often influence reviewer assessment (Figure 24B). For those reviewers who did not read the pre-meeting comments, the main reason for not reading the comments was because they were unaware that they had access to them. Reviewers spent, on average, 1-2 hours reading other reviewer's comments (Figure 24C). Additionally, 100% of reviewers indicated that the character limit for pre-meeting comments was appropriate (Figure 24D).

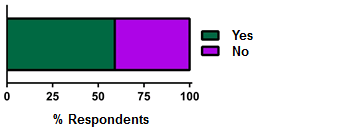

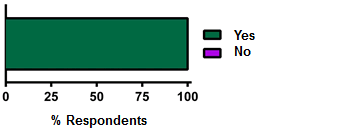

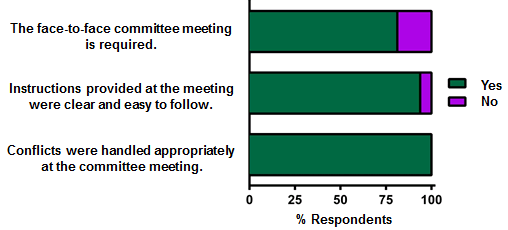

Face-to-Face Meeting

The majority of Stage 2 reviewers thought that the face-to-face meeting is required for the new review process (Figure 25); however, some indicated that, over time, it might not be required (Table 21). Some were torn because they see the value of the face-to-face meeting, but find it difficult to reconcile the value of the meeting with the time and financial investment of a one-day meeting. Overall, reviewers were very satisfied with the instructions provided at the meeting, and with the way that conflicts were handled at the meeting (Figure 25).

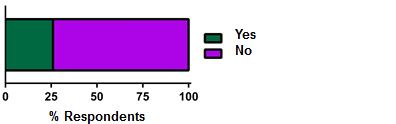

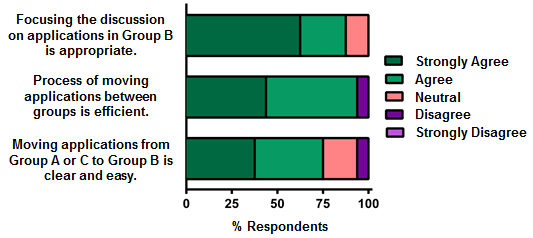

At the face-to-face meeting, Stage 2 reviewers are presented with applications that have been placed into one of 3 groups based on the outcome of the binning process: Group A (applications to fund without discussion), Group B (applications to discuss at the meeting), and Group C (applications that will not be funded and will not be discussed at the meeting). The first task of reviewers at the meeting is to validate that the applications are in the appropriate group, and to make adjustments as required. The majority of reviewers agreed that focusing the meeting discussion on the Group B applications is appropriate (Figure 26) as it resulted in more time for more useful discussions regarding the applications that had a high chance of being funded; however, some also indicated that the number of applications in Group B should be increased (Table 22). Reviewers also agreed that the process of moving applications between groups was clear and efficient (Figure 26). Some reviewers suggested that it would be a good idea to perform some sort of calibration exercise before applications are moved (Table 22).

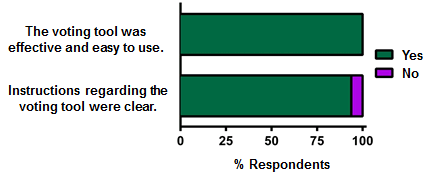

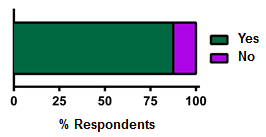

Reviewers used a voting tool to indicate whether they thought the applications should be moved to a different group. All reviewers agreed that the voting tool was effective and easy to use, and the majority of reviewers found the instructions regarding the voting tool to be clear (Figure 27). Some reviewers mentioned that the test run was very helpful.

A funding cut-off line was displayed for reviewers on the list of applications to help reviewers focus their discussions. The majority (over 80%) of reviewers found that the funding cut-off line did help to inform their discussions (Figure 28).

5. Experience with ResearchNet

Within this section, both Stage 1 and Stage 2 reviewer experiences with ReseachNet will be discussed.

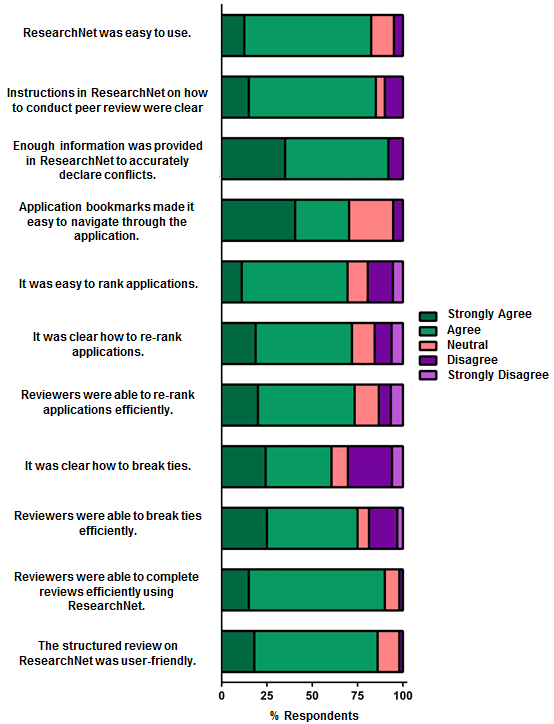

Stage 1 Review Process and the Use of ResearchNet

Overall, Stage 1 reviewers found ResearchNet user-friendly and easy to use (Figure 29). The ranking and re-ranking processes were clear and easy to complete in ResearchNet, as was the process for breaking ties. Reviewers indicated that having an auto-save functionality in ResearchNet would be extremely helpful, as would presenting the adjudication scale both above and below the forms reviewers are completing. Additionally, many reviewers indicated that links to resources should be more easily identifiable and all in one place. A suggestion was made that the links to all resource material should be included as a sidebar in ResearchNet that shows up on every page (Table 23).

Reviewers felt that the instructions in ResearchNet were clear, and that there was enough information in the system to accurately declare conflicts (Figure 29). Some reviewers indicated that it would be helpful if the instructions could be put on multiple pages to serve as a reminder for reviewers while they conduct their reviews. Additionally, multiple reviewers indicated that it was not clear from the instructions that comments regarding both the strengths and weaknesses of each adjudication criterion were required (Table 23).

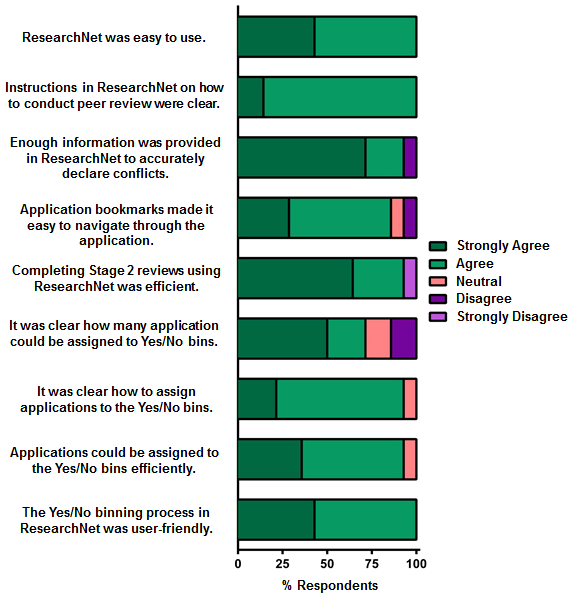

Stage 2 Review Process and the Use of ResearchNet

Overall, Stage 2 reviewers found ResearchNet user-friendly, and further indicated that completing their Stage 2 reviews using ResearchNet was efficient (Figure 30). The application binning process was clear and easy to complete in ResearchNet. Reviewers indicated that it would have been helpful to have both review and application documents downloadable in the same place. Additionally, many reviewers indicated that ResearchNet should allow for the viewing of multiple screens simultaneously to eliminate the need to consistently move to different pages. Reviewers indicated that they would have liked to have seen the list of applicants in order from highest to lowest score rather than alphabetically. In order to reduce Stage 2 reviewer burden, a suggestion was made that Stage 1 reviewer comments should be inter-woven such that all reviewer comments relating to the Quality of Idea (etc.) are in the same place in the document (Table 24).

Reviewers felt that the instructions in ResearchNet were clear, and that there was enough information in the system to accurately declare conflicts (Figure 30). Some reviewers pointed out that the character limits for Stage 2 reviewers was not indicated in the instructions. Also, some requested that the binning instructions (in particular, the number of "yes" and "no" bins available) be placed in ResearchNet as a reminder to reviewers. Additionally, some reviewers thought it would be important to know the Stage 1 review process, and how CIHR decided which applications moved to Stage 2 (Table 24).

6. Overall Satisfaction with the Project Scheme Design

Within this section, the overall satisfaction experienced by both applicants and reviewers (Stage 1 and Stage 2) of the new process and the Project Scheme design elements will be discussed.

Reviewer Satisfaction with the Stage 1 Review Process

The majority of Stage 1 reviewers were satisfied with the new structured review process (Figure 31). Reviewers indicated that the process could be improved if CIHR could ensure that reviewers submitted their reviews in a timely manner. Additionally, some felt that the instructions should be improved, and that CIHR could accomplish this goal by providing step-by-step instructions for Stage 1 reviewers. Some reviewers also suggested that it would be extremely beneficial if ResearchNet could allow the view of multiple applications simultaneously (Table 25).

Reviewer Satisfaction with the Stage 2 Review Process

More than 90% of Stage 2 reviewers were satisfied with the Stage 2 review process (Figure 32). Many reviewers commented that they enjoyed participating in the review process, and that the new process was well thought-out, fair, more efficient, and an improvement from the current peer review method (Table 26). Suggestions from some reviewers indicated that the application material, Stage 1 reviewer material, and the input of information for Stage 2 should be accessible at the same time in the same location. It was unclear to some reviewers what the evaluation criteria were for Stage 2 reviewers, indicating that it might be important to develop and/or provide some sort of instructional document regarding evaluation criteria for Stage 2 reviewers. There were many comments regarding the Stage 1 reviews, namely that it would have been helpful to know the background of the Stage 1 reviewers, and that the quality of Stage 1 reviewer comments needed to be significantly improved (Table 26).

Applicant Satisfaction with the Review Process

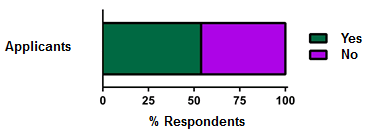

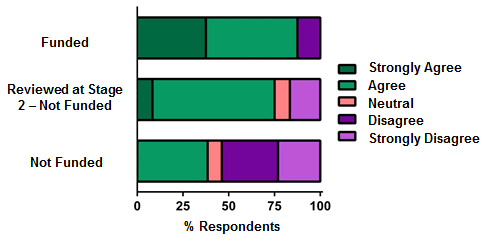

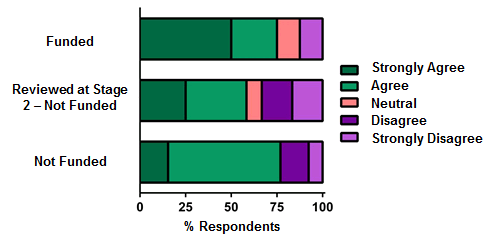

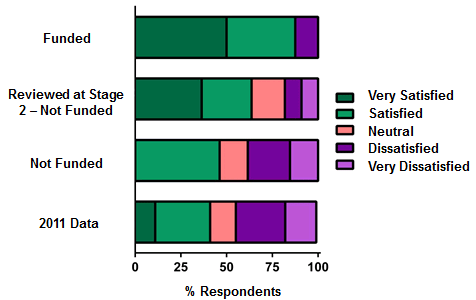

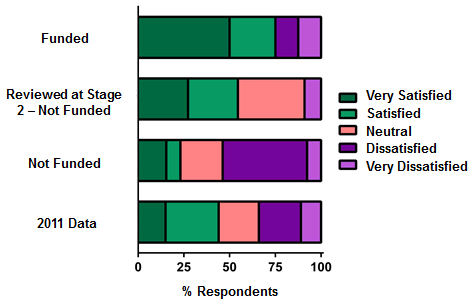

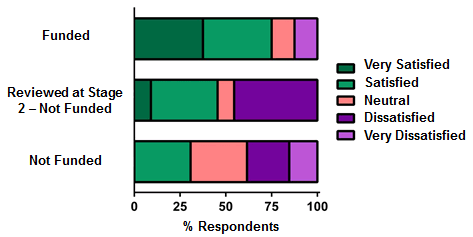

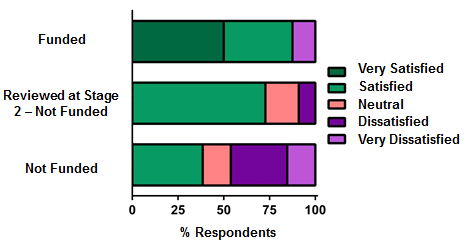

Applicant responses were divided into 3 groups: applicants who were funded (Funded), applicants whose applications moved to Stage 2 review but who ultimately were not funded (Stage 2 – Not Funded), and applicants who were deemed unsuccessful following Stage 1 review (Not Funded).

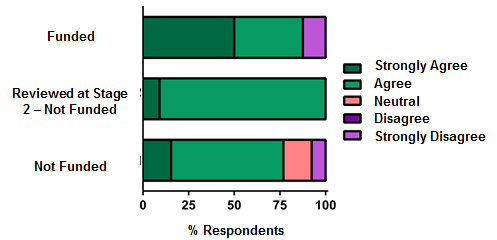

The majority of funded and Stage 2 – not funded applicants indicated that their reviews were consistent in that the rating given and the written justification for the rating matched (Figure 33A). More than 50% of the applicants whose applications did not move to Stage 2 review indicated that the reviews that they received were not consistent (Figure 33A). Some applicants indicated that they were concerned with the validity of the new peer review process because there was a significant amount of variability between reviewers. There were several comments that indicated that the ratings were not consistent with the justifications, or if they were, they did not match the ranking (Table 27).

A point of concern was that some applicants indicated that the reviewer comments they received were not constructive and were overly negative/critical, which left some wondering whether the Stage 1 reviewers knew that their comments would be shared with the applicants (Figure 33B and Table 28). The majority of all applicants indicated that the structured review process was beneficial in that a specific rating and comments to justify the rating are provided for each adjudication criterion (Figure 33C). Points of concern for applicants were again that some reviewers provided very few comments to justify their ratings, and that it was difficult to know which suggestions should be followed as some reviewers had divergent views on applications (Table 29).

The majority of funded applicants agreed that the structured review process was fair and transparent (Figure 33D). Almost half of the Stage 2 – not funded applicants indicated that the review process was fair and transparent, and an additional 1/3 neither agreed nor disagreed (neutral). Slightly more than 1/3 of applicants who were not funded indicated that they did not believe that the review process was fair or transparent; however, over 50% of non-funded applicants either agreed or felt neutral regarding the fairness of the review process. Applicants are very anxious to know how the 5 reviews are weighted against each other, and how CIHR deals with outlier reviews (Table 30).

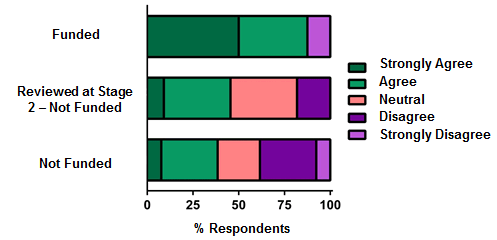

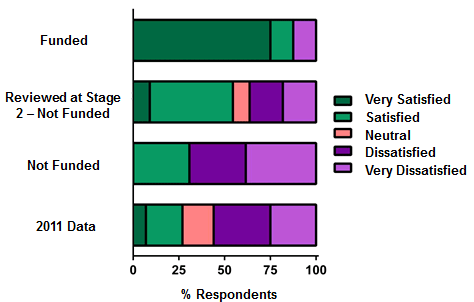

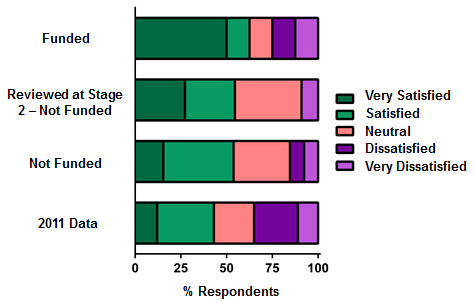

Applicants indicated their level of satisfaction with various aspects of the review process and with the overall review experience (Figure 34). Applicants who were funded indicated a high level of satisfaction regarding the consistency of peer review comments. Not surprisingly, this level of satisfaction decreased in applicants whose applications made it to Stage 2 review, but who were not ultimately funded, and decreased further still in applicants who were not successful following Stage 1 (Figure 34A). Both funded and Stage 2 – not funded applicants were highly satisfied with the quality of the peer review comments they received. Only about half of the applicants who were not funded were satisfied with the quality of the review comments that they received (Figure 34C).

Only about 50% of all applicants were satisfied with the clarity of the adjudication criteria (Figure 34B). Interestingly, the level of satisfaction with the clarity of the rating system was highly dependent on the applicant group. Approximately 75% of the funded applicants were satisfied with the clarity of the rating system, whereas only about 50% of the Stage 2 – not funded and less than 25% of non-funded applicants were satisfied with the clarity of the rating system (Figure 34D). Similarly, the level of applicant satisfaction with the confidence they have with the review process is also dependent on the applicant group. Accordingly, applicants who were funded had the most confidence in the process, applicants who were not funded had the least confidence in the process, and the Stage 2 – not funded applicants were in the middle (Figure 34E).

Overall, both funded applicants and those applicants who made it to Stage 2 but were not ultimately funded were satisfied with the new structured review process (Figure 35). Those applicants who were deemed unsuccessful following Stage 1 review had split levels of satisfaction with the review process. That is, approximately 50% of non-funded applicants were generally satisfied with the review process and approximately 50% were not. Applicants indicated a number of ways to improve the review experience from an applicant's perspective (Table 31). As many reviewers were concerned by the lack of consistency between reviewer assessments of the same application, applicants suggested that there should be an arbitration process for applications with divergent reviews or that CIHR should be more transparent regarding how reviewer ratings/rankings are integrated following the Stage 1 review. Additionally, applicants were interested in understanding the review process better. For example, there was some concern about the applications that made it to Stage 2 but were not discussed nor funded (Group C applications) and the rationale behind this. Applicants also thought that it should be communicated to applicants why their application did not make it to Stage 2 review. For those applicants interested in resubmission, they believed that it should be communicated whether the reviewers would be the same in the following competition. It is also important to applicants that CIHR be more specific about what information should go in each section of the structured application so that both applicants and reviewers interpret the sections in a similar manner.

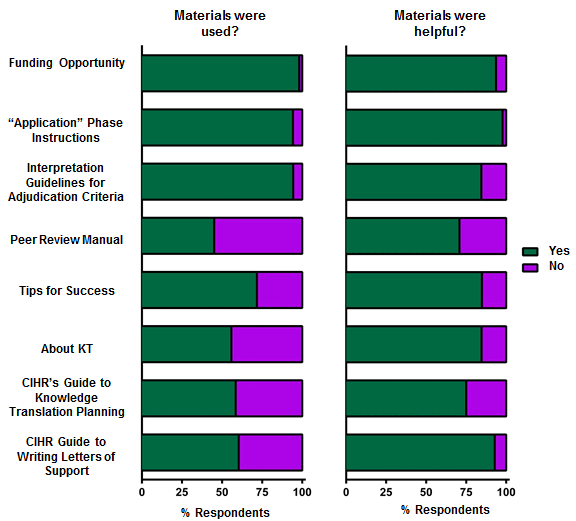

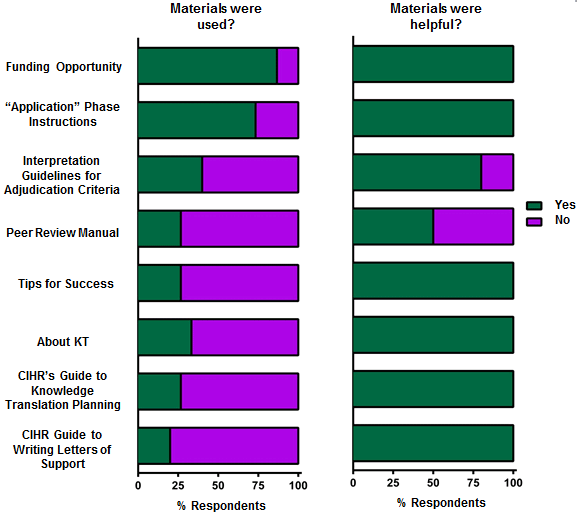

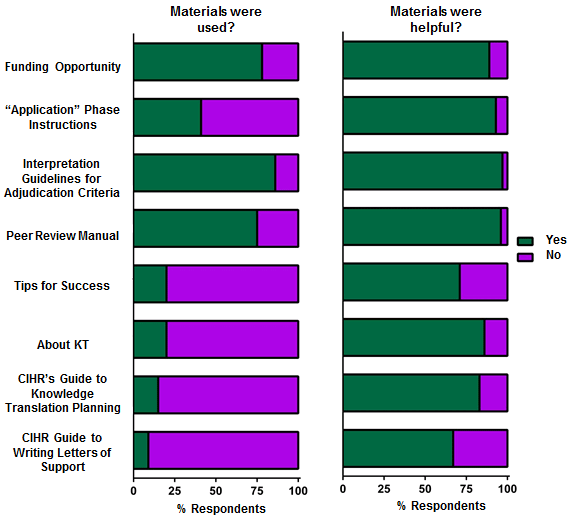

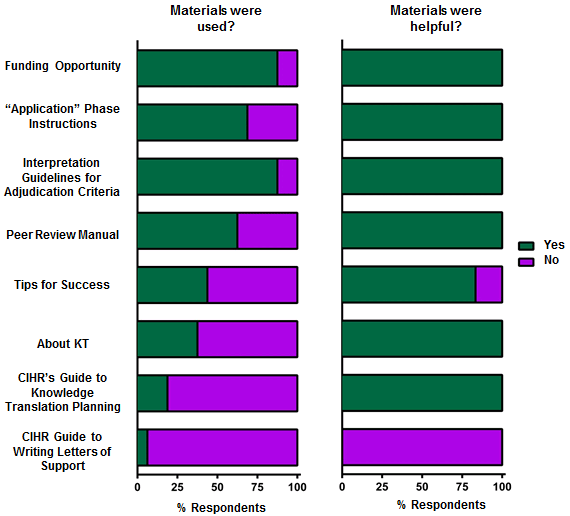

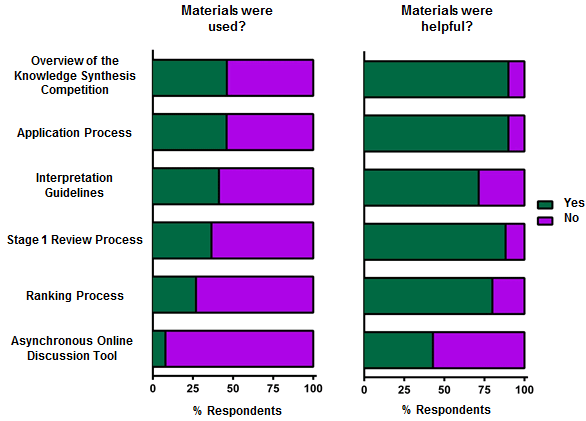

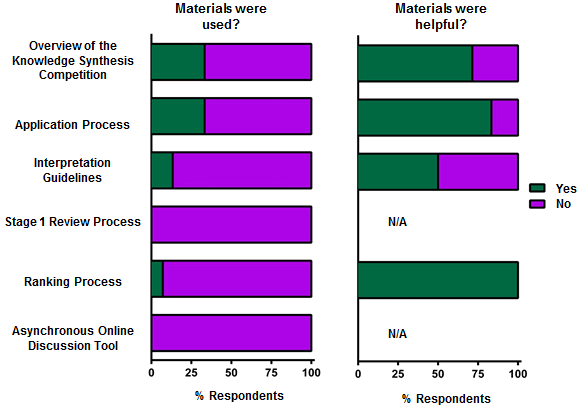

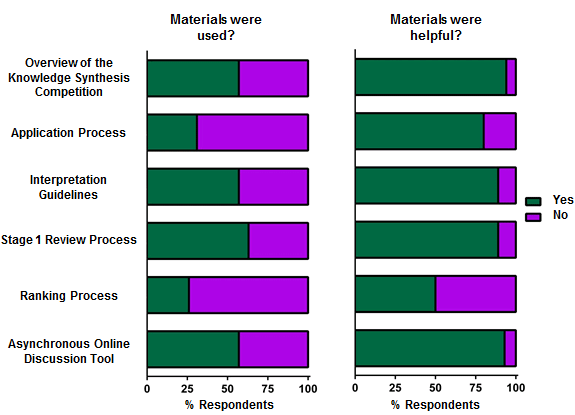

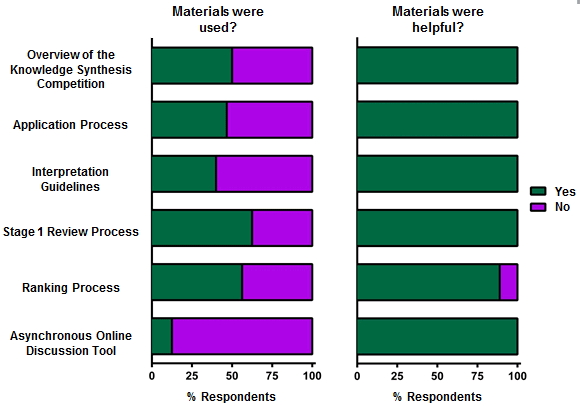

Documentation and Learning Lessons Prepared for the Knowledge Synthesis Pilot

CIHR developed a number of documents to assist the Fall 2013 pilot participants (Applicants, Research Administrators, Stage 1 Reviewers, and Stage 2 Reviewers) in completing their required tasks. Throughout the survey process, feedback regarding the extent to which the materials were used and their level of perceived helpfulness was collected and while the materials used varies by role in the competition, the majority of respondents who used the material found it useful (Figure 36 and Figure 37). Respondents did note that the number of materials should be reduced and should all be found in the same place (Table 32 and 33).

Conclusions and Considerations for Future Pilots

The Fall 2013 Knowledge Synthesis competition was used as CIHR's first full Project Scheme pilot. Though the sample size was very small and skewed towards Pillar 3 and/or 4 researchers, it was a good first test of the new Project Scheme design elements and peer review process. Overall there were many positive elements that will serve as the foundation for subsequent pilots.

Given the limitations of this pilot, results must be interpreted with caution: positive/negative responses do not necessarily mean that the design is/is not appropriate. Applicants, research administrators, and reviewers are getting used to the new technology and processes, and it may therefore take time before any improvements are measurable. CIHR is also still learning and making improvements and refinements based on the feedback received from the research community. Results will continue to inform the refinement to the implementation of the design elements and/or improvements to the training approach. Pilots will be repeated within other competitions.

Based on the results presented in this report a number of recommendations are being considered and assessed for future pilots. Note that not all of the recommendations will be implemented in a subsequent pilot, and may instead be deferred until there is more data to support the assessment:

ResearchNet

- An auto-save functionality should be built that saves applicants/reviewers work before it disconnects due to inactivity.

- The ability to include figures within the structured application should be investigated.

Character Limits

- Making the character limits greater should be considered for each section of the structured application.

- The character limit for reviewers should be re-examined.

- Using word limits as opposed to character limits should be considered as word limits might be more appropriate, and would reduce the use of acronyms and other jargon.

Adjudication Criteria

- The distinction between "Quality of Idea" and "Importance of Idea" is not clear. CIHR should either re-write the interpretation of the two adjudication criteria, ensuring to remove all overlapping statements, or consider merging the two sections into one section called "Background".

- The adjudication criteria should not be weighted equally. More weight should be allocated to the Approach section at the expense of the Quality and/or Importance of Idea criteria.

Stage 1 – Structured Review Process

- Instructions should be provided to applicants on how to deal with divergent opinions from reviewers when updating their application for resubmission.

- Once this process is solidified, it will be important to share with the research community how the ranking from 5 reviewers in consolidated (i.e. how it is decided which applications move to Stage 2 review). This will be especially important for any funding organization that wishes to adopt CIHR's new peer review process because the methods for integrating reviews and dealing with outlier reviews is a difficult question to address.

- Stage 1 reviewers should be trained that their reviews are being used by Stage 2 reviewers to make funding decisions, and therefore, need to be of high quality.

- CIHR should explore using Stage 2 reviewers to provide feedback on the quality of reviews of Stage 1 reviewers.

Stage 1 – Attachments

- Allowing methodological attachments that are not tables/figures should be considered as there is a significantly reduced space to include all methodological information.

Stage 1 – Adjudication Scale

- Alternatives to the current adjudication scale (ABCDE) should be considered as Stage 1 reviewers feel uncomfortable using the full range of the scale, which in turn, makes it very difficult for anyone to distinguish between applications. Some suggestions have been included below:

- Use different letters for the scale (e.g. LMNOP). It is possible that academics find the letter ratings too similar to letter grades and feel uncomfortable giving out C's, D's, or E's.

- Use a scale with finer increments at the top end of the scale. Having 5 letters represents 20% differences between the ratings and many differences are much more subtle. A+/A/A-/B+/B/B-/C was a scale suggested by a Stage 1 reviewer.

Stage 1 – Online Discussion

- CIHR should determine whether it should be mandatory to participate in an online discussion, and under what set of circumstances.

- CIHR should solidify the role of the virtual chair/moderator, and ensure that there are people who can fulfill this role for future competitions.

- CIHR could develop a decision tree for when online discussion should take place that either a chair or reviewer could use to determine whether an online discussion should be initiated.

Stage 2 – Application Binning

- Absolute number of yes/no allocations should not be given to reviewers as it forces some applications into the inappropriate bin. Instead, reviewers should be given a range of yes/no allocations to use at their discretion.

Overall – Pilot Documentation

- There is a considerable amount of documentation and training available to pilot participants. The documentation should be evaluated to see if it is all necessary. The risk of having too much documentation is that participants will stop reading, and may miss essential documents.

- Link for all documentation and lessons created for each pilot should be kept in one place so that participants can always find what they need.

Recommendations for Further Analyses

The Research Plan developed for the reforms of CIHR's open programs describes a host of analyses that will take place throughout the implementation phase of the reforms in order to ensure that the modifications made to the current programs are functioning appropriately. In terms of the current study, the following additional analyses are being proposed:

- Applicants indicated that Stage 1 reviewers were not consistent in their reviews (the rating of an adjudication criterion did not match the written justification). CIHR will investigate the extent to which this is true. If this is a significant issue over a larger sample size, further investigation will be conducted to ascertain the reasons for the discrepancies.

- Some applicants and Stage 2 reviewers found Stage 1 reviewer comments to be insufficient to justify the ratings given. Using copies of the Stage 1 reviews, CIHR will determine whether this is true, and will make recommendations for how to improve the quality of Stage 1 reviews.

- In order to assess whether the multi-stage approach to review is valid, CIHR will investigate how often, and by how much, applications change ranked position between Stage 1, Pre-Stage 2, and the final outcome.

Appendix 1: Survey Demographics

Survey Participants

Research Administrators

Response Rate

The survey was sent to 32 research administrators. Sixteen submitted survey responses, and 15 completed the entire survey (response rate = 46.9%). All respondents resided in Canada and completed the survey in English.

Respondent Demographics

Administrators classified themselves as either "Research Administrator" (93.4%) or "Research Facilitator" (6.6%).

Previous Experience with Knowledge Synthesis Competitions and Peer Review

Nine research administrators (56.3%) declared having submitted an application to a Knowledge Synthesis Grant competition in the past.

Applicants (after submitting the application)

Response Rate

The survey was sent to the 77 applicants who submitted applications to the Fall 2013 Knowledge Synthesis competition. Sixty applicants submitted survey responses, and 53 completed the entire survey (response rate = 68.8%). All except one of the respondents resided in Canada (98.3%), and 91.7% completed the survey in English.

Respondent Demographics

Applicants classified themselves as an "Early career scientist" (35.6%), "Mid-career scientist" (25.4%), "Senior scientist" (35.6%), or "Other" (3.4%: 1 Knowledge User and 1 Co-applicant). Applicants indicated that their present position was a "Professor" (28.8%), an "Associate Professor" (18.6%), an "Assistant Professor" (28.8%), a "Scientist" (10.2%), a "Researcher" (6.8%), or "Other" (6.8%: 1 Clinician, 1 Senior Scientist, 1 Postdoctoral Fellow, and 1 Research Administrator).

Most applicants indicated that their primary research domain was "Health systems/services" (44.1%), followed by "Clinical" (32.2%), "Social, cultural, environmental and population health" (22%) and "Biomedical" (1.7%).

Previous Experience with Knowledge Synthesis Competitions and Peer Review

Thirty-four applicants (57.6%) indicated that they had submitted an application to a Knowledge Synthesis Grant competition in the past. In this current competition, 67.6% of applicants submitted a new application, while 32.4% submitted a revised application.

Thirty-two applicants (54.2%) have served as CIHR peer reviewers in the past as members of the "Knowledge Synthesis Committee" (14%), "Grants Committee" (48.8%), "Awards Committee" (16.3%), or "Other" (20.9%: 4 Planning/Dissemination Grants Committee, 1 New Investigator Grant Committee, 1 Knowledge Translation Grant Committee, 1 Banting PDF Fellowship Committee, and 1 respondent responded "not applicable").

Stage 1 Reviewers

Response Rate

The survey was sent to the 49 reviewers who participated in the Stage 1 review. Forty reviewers submitted survey responses, and 36 completed the entire survey (response rate = 81.6%). All except two of the respondents resided in Canada (95%), and 92.5% completed the survey in English.

Respondent Demographics

Reviewers classified themselves as an "Early career scientist" (20%), a "Mid-career scientist" (30%), a "Senior scientist" (22.5%), a "Knowledge user" (22.5%), or "Other" (5%: 1 Clinical Scientist and 1 Research Administrator). Reviewers indicated that their present position is as a "Professor" (17.5%), an "Associate Professor" (7.5%), an "Assistant Professor" (32.5%), a "Researcher" (10%), a "Clinician" (2.5%), a "Senior Scientist" (2.5%), a "Scientist" (2.5%), or "Other" (25%: 4 Administrators, 4 Executive Managers, 1 Research Librarian, and 1 Health Funder).

Most respondents indicated that "Health systems/services" (41%) was their primary research domain, followed by "Clinical" (25.6%), "Social, cultural, environmental and population health" (25.6%), and "Biomedical" (7.7%).

Previous Peer Review Experience

37 reviewers (92.5%) indicated that had previous experience with ResearchNet. Eighty percent (80%) of respondents had served as CIHR peer reviewers prior to this competition as members of a "Grants Committee" (78.4%), an Awards Committee (13.5%), or "Other" (8.1%: 1 CIHR Standing Ethics Committee, 1 Team Grant Committee, and 1 Knowledge Translation Grant Committee). Just under half (47.5%) of respondents had previously reviewed applications submitted to a Knowledge Synthesis Grant competition.

Stage 2 Reviewers

Response Rate

The survey was sent to the 18 reviewers who participated in the Stage 2 review. Seventeen reviewers submitted survey responses, and 16 completed the entire survey (response rate = 88.9%). All respondents resided in Canada, and 94.1% completed the survey in English.

Respondent Demographics

Two of the reviewers served as "Chair/Scientific Officer", and 15 as "Final Assessment Stage committee members". The reviewers classified themselves as a "Mid-career scientist" (20%), a "Senior scientist" (20%), a "Knowledge user" (53.3%), or a "Clinical investigator" (6.7%). Reviewers indicated their present position as a "Professor" (20%), an "Assistant Professor" (20%), a "Researcher" (13.3%), a "Scientist" (6.7%), a "Knowledge User" (33.3%), or "Other" (6.7%: 1 "Executive Director").

Most survey participants indicated "Health systems/services" (50%) as their primary research domain, followed by "Social, cultural, environmental and population health" (28.6%) and "Clinical" (21.4%). No reviewer indicated a primary affiliation with the "Biomedical" research domain.

Previous Peer Review Experience

All 15 reviewers indicated that they had served as CIHR peer reviewers in past competitions, and that they had previous experience with ResearchNet. Respondents indicated that they had previously participated in a "Grant Peer Review Committee" (65%), a "Grant Merit Review Committee" (5%), an "Awards Committee" (10%), or "Other" (20%: 2 Knowledge Translation Grant Committee, 1 Governing Council-IAB Advisory Board, and 1 "Other Agency Award Committee"). The vast majority (80%) of survey participants had previously reviewed applications submitted to a Knowledge Synthesis Grant competition.

Applicants (after receiving competition results)

Response Rate

The survey was sent to the 77 applicants who submitted applications to the Fall 2013 Knowledge Synthesis competition. Thirty seven applicants submitted survey responses, and 31 completed the entire survey (response rate = 49.3%). The majority of respondents (85.3%) were among those who had previously participated in the first applicant survey.

Respondent Demographics

The majority of respondents (91.9%) resided in Canada, while 3 applicants were located in Australia, Germany, or the U.S.A. The majority (91.9%) of participants completed the survey in English.

Applicants classified themselves as an "Early career scientist" (42.9%), a "Mid-career scientist" (25.7%), a "Senior scientist" (28.6%), or a "Reviewer" (2.8%). Applicants indicated that their present position is as a "Professor" (20.6%), "Associate Professor" (26.5%), "Assistant Professor" (32.4%), "Research Scientist" (14.7%), "Research Administrator" (2.9%) or "Research Fellow" (2.9%).

Most survey participants indicated "Health systems/services" (35.3%) or "Clinical" (35.3%) as their primary research domain. This was followed closely by "Social, cultural, environmental and population health" (23.5%) and "Biomedical" (5.9%) research domains.

Previous Experience with Knowledge Synthesis Competitions and Peer Review

The majority of applicants (73.5%) indicated that they had submitted an application to a previous Knowledge Synthesis grant competition. In the current competition, 58.3% of applicants submitted a new application, while 41.7% submitted a revised application.

Over half of the applicants (57.6%) had previously served as CIHR peer reviewers on a variety of different committees: Knowledge Synthesis Committee (4.5%), Grants Peer Review Committee (54.6%), Awards Committee (22.7%), or "Other" (18.2%: Meeting and Planning Grant Review Committee, Knowledge Translation Grants Committee, Special Projects Grant Review Committee, and Randomized Control Trials Review Committee).

Prior Knowledge Synthesis Competitions

Data collected from previous Knowledge Synthesis competitions were used for comparison purposes in a number of sections. Data from the following competitions were used: Fall 2011 competition, Spring 2012 competition, Fall 2012 competition, and Spring 2013 competition.

Appendix 2: Detailed Survey Results

Structured Application Process

Figure 1-A. Describe the ease of use of the structured application form.

Figure 1-B. The structured application format was intuitive and easy to use.

Figure 1-C. Level of satisfaction with the structured application process:

Figure 1. Applicant and Research Administrator Impression of the Structured Application Process. Applicants to the Fall 2013 Knowledge Synthesis competition (n=60 respondents) and Research Administrators (n=15 respondents) involved in the application process were surveyed following the application submission. Applicants (n=54) were asked to indicate the perceived ease of use of the structured application form (A). Applicants (n=54) and Research Administrators (n=13) were asked to indicate whether they thought the structured application format was intuitive (B) and their degree of satisfaction with the application process (C).

| Comments Regarding the Structured Application Form |

|---|

|

Figure 2-A. Compared to last time, completing the structured application form was:

Figure 2-B. Compared to last time, submitting the structured application form was:

Figure 2-C. Compared to a previous Knowledge Synthesis competition, submitting an application using the structure application format was:

Figure 2. Use of Structured Application Process Compared to Previous Review Applications to the Knowledge Synthesis competition. Applicants to the Fall 2013 Knowledge Synthesis competition (n=60 respondents) and Research Administrators (n=15 respondents) involved in the application process were surveyed following the application submission. Applicants (n=32) were asked to describe the ease of use (A) and the amount of work required to apply (B) compared to previous applications to the Knowledge Synthesis competition. Applicants (n=29) and Research Administrators (n=9) were invited to indicate their level of satisfaction with the structured application process (C).

| Structured Application Format vs. Traditional Application Method |

|---|

|

Figure 3-A. Character limit was adequate to respond to each adjudication criterion?

Figure 3-B. Ideal page limits according to applicants:

Figure 3. Character Limits of the Structured Application Form. Applicants to the Fall 2013 Knowledge Synthesis competition (n=60 respondents) were surveyed following the application submission. Applicants (n=54) were requested to indicate whether the set page limit was adequate to respond to each adjudication criterion using the structured application form (A), and if the character limit was insufficient, to indicate the ideal limit (B).

| Applicant Comments Related to Character Limits |

|---|

|

Figure 4-A. Character limit was adequate to respond to each adjudication criterion?

Figure 4-B. Character limits allowed for sufficient information to be included by the applicant?

Figure 4. Stage 1 Reviewer Reactions to the Structured Application – Character Limits. Stage 1 reviewers of the Fall 2013 Knowledge Synthesis competition (n=40 respondents) were surveyed following the submission of the Stage 1 reviews. Respondents indicated whether there was sufficient information provided by applicants in the structured application to appropriately assess each adjudication criterion (n=40) (A) and specified sections of the structured application that could use more space (n=3) (B).

Figure 5-A. Value level of allowable attachments:

Figure 5-B.

Figure 5. Stage 1 Reviewer Reactions to the Structured Application – Allowable Attachments. Stage 1 reviewers of the Fall 2013 Knowledge Synthesis competition (n=40 respondents) were surveyed following the submission of the Stage 1 reviews. Respondents indicated the value level (high, medium, low, none) of the allowable attachments reviewed as part of the application (n=40) (A), and of certain sections of the applicant CVs (n=34) (B).

| Suggestions Regarding Other Types of Useful Application Attachments |

|---|

|

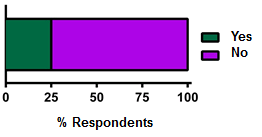

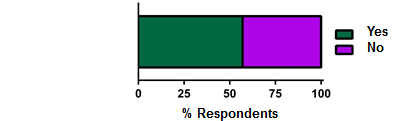

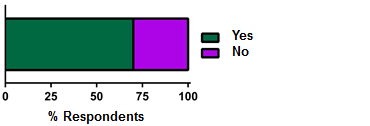

Figure 6. Did applicants experience problems completing the structured application form?

Figure 6. Non-Technical Problems Encountered in Completing the Structured Application Form. Applicants to the Fall 2013 Knowledge Synthesis competition (n=60 respondents) were surveyed following the application submission. Applicants (n=56) indicated whether they had problems completing the structured application form by choosing either "Yes" or "No".

| Problems Encountered in the Completion of the Structured Application Form |

|---|

|

Reviewer Workload

Figure 7-A. Workload assigned to Stage 1 reviewers was:

Figure 7-B. Compared to a previous Knowledge Synthesis review experience, the workload of the following review activities was:

Figure 7-C.

| Spring 2013 Knowledge Synthesis Competition (Mean +/- SEM) |

Fall 2013 Knowledge Synthesis Competition (Mean +/- SEM) |

|

|---|---|---|