Overview of the Reforms to CIHR’s Open Suite of Programs: Peer Review Expert Panel

November 2016

Table of contents

- Objective

- Origins for the design, including rationale for the changes (PREP Q1, 2)

- Overview of the Design (Panel Q1)

- Foundation and Project Grant Program Implementation Process (Panel Qs 2, 3, 4)

- Description of evidence to date (Panel Qs1, 2)

- Overview of areas for growth and ongoing monitoring (PREP Q4, 6)

Figures

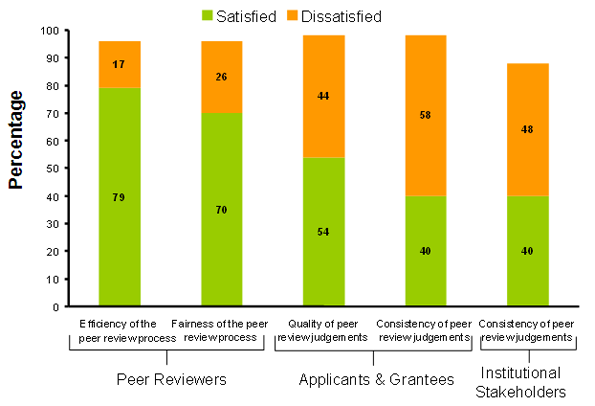

| Figure 1 | Stakeholder Satisfaction - Peer Review (Percent of respondents who provided an opinion), Results from 2010 IPSOS Reid Study |

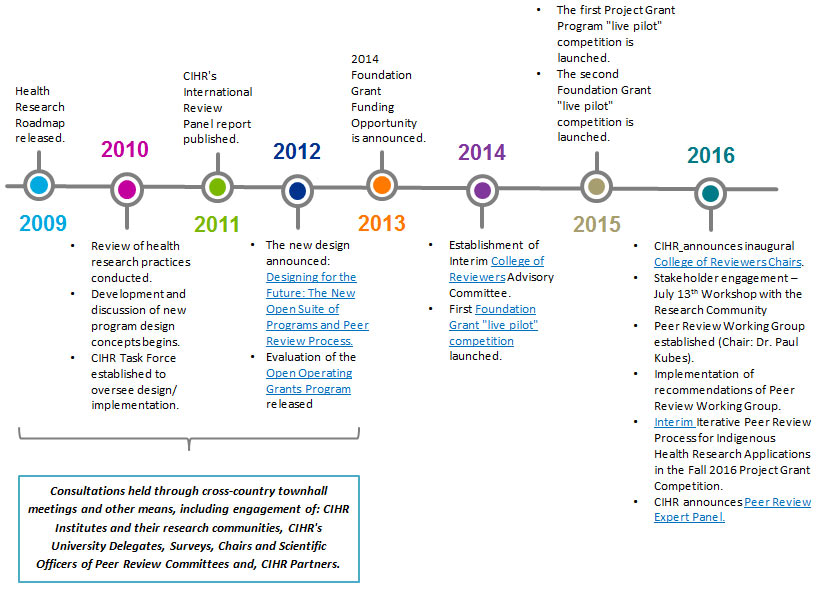

| Figure 2 | Timeline of CIHR Reforms process |

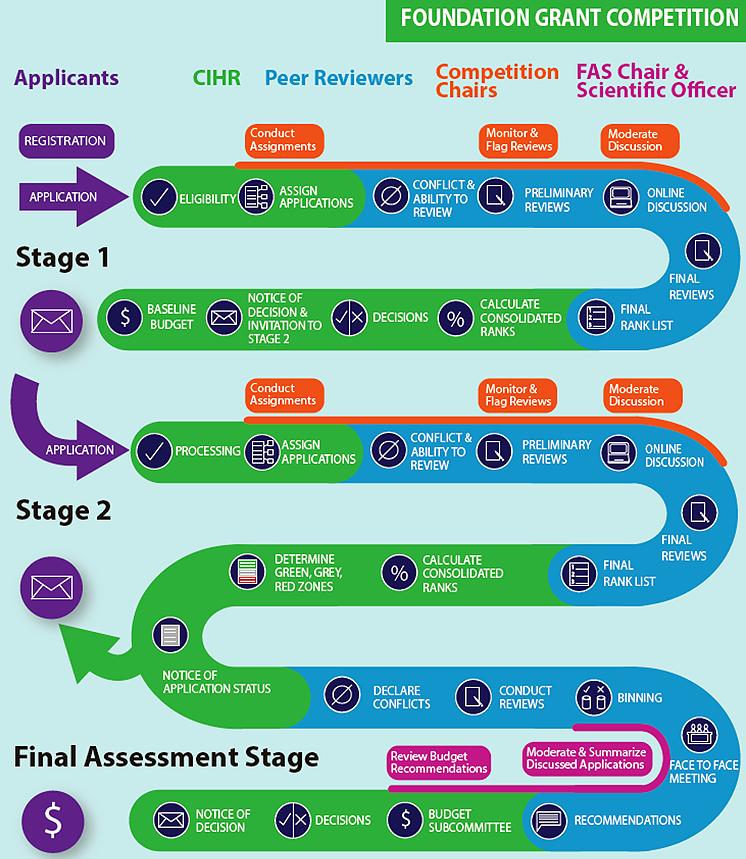

| Figure 3 | Schema of Foundation Grant Program Competition Cycle |

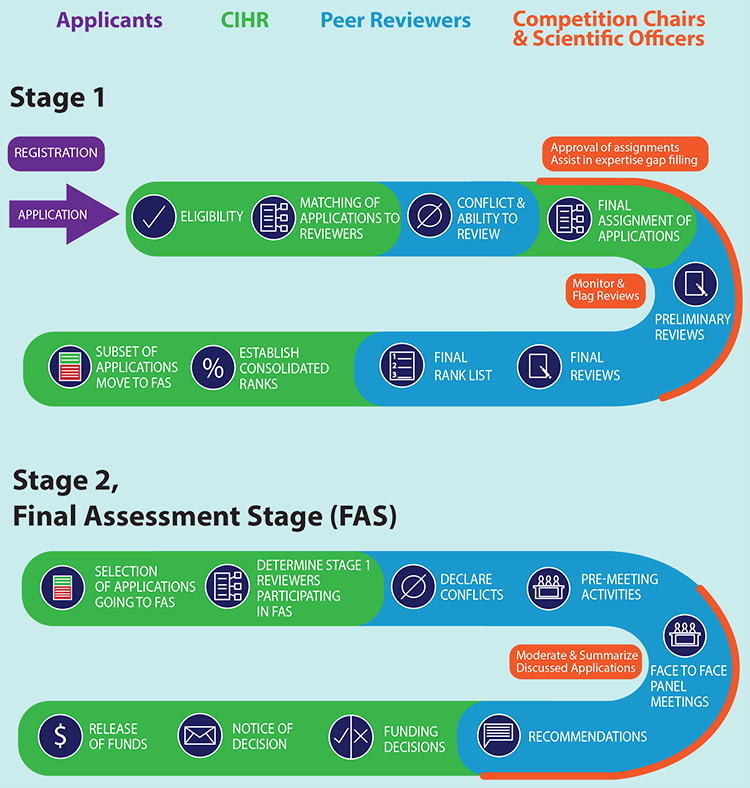

| Figure 4 | Schema of Project Grant Program Competition Cycle |

| Figure 5 | Design elements of the new programs and how they were intended to address identified challenges |

| Figure 6 | Distribution of application pressure and success rates in the CIHR Investigator-Initiated Grant programs, 2006-07 to 2015-16 |

| Figure 7 | Distribution of success rates of Investigator-Initiated Grant programs by primary pillar, 2006-07 to 2015-16 |

| Figure 8 | Distribution of success rates of Investigator-Initiated Grant programs by sex of NPI, 2006-07 to 2015-16 |

| Figure 9 | Distribution of success rates of Investigator-Initiated Grant programs by career stage of NPI, 2006-07 to 2015-16 |

| Figure 10 | Breakdown of new/early career investigator cohort by proportion of applications submitted and funded, 2015 Foundation Grant Competition |

| Figure 11 | Breakdown of mid/senior career investigator cohort by proportion of applications submitted and funded, 2015 Foundation Grant Competition |

| Figure 12 | Breakdown of proportion of applications submitted and funded, Spring 2016 Project Grant Competition |

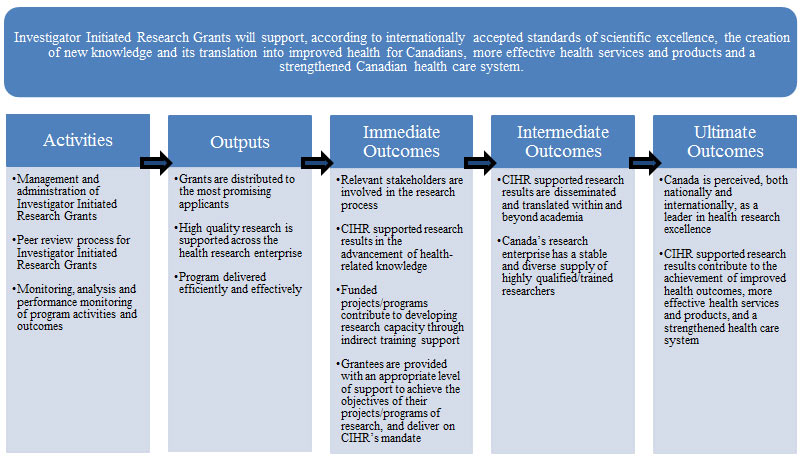

| Figure 13 | Draft program logic model for Investigator-Initiated Research at CIHR |

Tables

| Table 1 | Design element differences across Open Operating (OOGP), Foundation, and Project Grant Programs |

| Table 2 | Areas for action and corresponding CIHR response following CIHR Internal Reviews, 2015 |

| Table 3 | Enhancements implemented to the 2015 Foundation Grant “live pilot” following 2014 pilot |

| Table 4 | Action areas and requested changes to the 2016 Project Grant competition (Fall launch) following the July 13th, 2016 Working Meeting of CIHR and its research community |

| Table 5 | Recommendations from the Peer Review Working Group for the 2016 Project Grant competition |

| Table 6 | CIHR implementation of recommendations from the Peer Review Working Group for the CIHR Project Grant competition (Fall 2016) |

| Table 7 | Status of core College functions overseen by College Chairs |

| Table 8 | Draft performance measurement framework for Investigator-Initiated Research at CIHR |

1. Objective

The objective of this briefing paper is to synthesize existing CIHR evidence, analysis, and external links in a single document for the purposes of orienting the Peer Review Expert Panel to:

- the design of CIHR's reforms to its open suite of investigator-initiated research programs and peer review processes and how these reforms respond to the peer review challenges identified by CIHR;

- the extent to which these changes in program architecture and peer review relate to the challenges posed by the breadth of the CIHR mandate, the evolving nature of science, and the growth of interdisciplinary research;

- CIHR's mechanisms for ensuring peer review quality and impacts.

This document is not meant to be an evaluation of the reforms, nor is it intended to replace the interpretation of data and/or context that will be offered during the face-to-face Panel meetings on January 16-18, 2017. Rather it is intended to provide a description and evidence base to support a common understanding about the reforms and complement what the Panel will hear during their meetings.

2. Origins for the design, including rationale for the changes (PREP Q1, 2)

As stated in the CIHR Act, CIHR's mandate is to "excel, according to internationally accepted standards of scientific excellence, in the creation of new knowledge and its translation into improved health for Canadians, more effective health services and products and a strengthened Canadian health care system."Footnote 1

The Canadian Institutes of Health Research (CIHR) is the major Canadian Government funder of research in the health sector and classifies its research across four "pillars" of health research: biomedical; clinical; health systems services; and population health. CIHR invests approximately $1 billion (CAN) each year to support health research.Footnote 2 This investment supports both investigator-initiated and priority-driven research.

CIHR classifies investigator-initiated research as research where individual researchers and their teams develop proposals for health related research on topics of their own choosing. Just over half of CIHR's $1 billion budget are used to support investigator-initiated research.Footnote 2 The balance is spent on priority-driven research, which refers to research in areas identified as strategically important by the Government of Canada; in this case themed calls for research proposals are made.

Until 2014, the Open Operating Grants Program (OOGP)Footnote 3 was CIHR's primary mechanism through which investigator-initiated research was supported. The specific objectives of the OOGP were to contribute to the creation, dissemination and use of health-related knowledge, and to help develop and maintain Canadian health research capacity, by supporting original, high quality projects or teams/programs of research proposed and conducted by individual researchers or groups of researchers, in all areas of health.

In 2009, CIHR's Health Research Roadmap was released and this five-year plan introduced a bold vision to reform the peer review and open funding programs. Beginning in 2010 CIHR started the process of reforming its investigator-initiated research programs, including the OOGP, and the related peer review processes. These reforms were informed by significant contributions and also influenced by three main lines of evidence:

Data from a poll of the scientific community: a 2010 IPSOS Reid poll conducted by CIHR found strong support from the research community to fix a peer review system that was perceived as 'lacking quality and consistency'.

Figure 1. Stakeholder Satisfaction - Peer Review (Percent of respondents who provided an opinion), Results from 2010 IPSOS Reid Study

Long description

Survey conducted by Ipsos Reid (2010) showing CIHR stakeholder satisfaction in Peer Review. ("Satisfied" category includes very satisfied, somewhat satisfied and neutral; "Dissatisfied" category includes somewhat dissatisfied and very dissatisfied). Based on the percent of respondents who provided an opinion:

- 79% of peer reviewers were satisfied with the efficiency of the peer review process, while 17% of peer reviewers were dissatisfied with the efficiency of the peer review process

- 70% of peer reviewers were satisfied with the fairness of the peer review process, while 26% of peer reviewers were dissatisfied with the fairness of the peer review process

- 54% of applicants and grantees were satisfied with the quality of peer review judgements, while 44% of applicants and grantees were dissatisfied with the quality of peer review judgements

- 40% of applicants and grantees were satisfied with the consistency of peer review judgements, while 58% of applicants and grantees were dissatisfied with the consistency of peer review judgements

- 40% of institutional stakeholders were satisfied the consistency of peer review judgements, while 48% of institutional stakeholders were dissatisfied with the consistency of peer review judgements

A recommendation of CIHR's second International Review Panel in 2011:

'CIHR should consider awarding larger grants with longer terms for the leading investigators nationally. It should also consolidate grants committees to reduce their number and give them each a broader remit of scientific review, thereby limiting the load'.

Findings from the 2012 evaluation of CIHR's Open Operating Grant Program (OOGP) which recognized challenges in open funding across pillars of research and supported the need to reduce peer review and applicant burden.

CIHR conducted several rounds of consultations with its stakeholder communities prior to and throughout the reforms process.Footnote 4 The earliest consultations, which led to the proposed design, resulted in a number of challenges being identified with CIHR's existing funding architecture and peer review processes,Footnote 5 and the resulting re-design was intended to address those challenges. The challenges included:

- Funding program accessibility and complexity;

- Applicant burden/"churn";

- Application process / attributes do not capture the correct information;

- Insufficient support for new/early career investigators;

- Researchers and knowledge user collaborations not fully valued;

- Lack of expertise availability;

- Unreliability/inconsistency of reviews;

- Conservative nature of peer review;

- High peer reviewer workload.

CIHR moved to a new system of investigator–initiated grants, including a number of design elements intended to respond to these challenges. These changes were piloted and implemented over the 2010-2016 period and were designed to directly respond to the challenges identified through stakeholder input. Key points along this plan are outlined in Figure 2 below.

The new open suite of programs means that the majority of its investigator-initiated research funding is now being awarded through its Foundation Grant Program and Project Grant Program.Footnote 6 With this said, it is important to note that CIHR also funds awards and priority-driven grants through other funding mechanisms and peer review processes that are not discussed in this paper.

The objectives of the new open suite of programs are as follows:

- Foundation Grant Program (one competition per year) - designed to contribute to a sustainable foundation of health research leaders by providing long-term support for the pursuit of innovative, high-impact programs of research; and,

- Project Grant Program (two competitions per year) - designed to capture ideas with the greatest potential for important advances in health-related knowledge, the health care system, and/or health outcomes, by supporting projects with a specific purpose and defined endpoint. The reforms have been met with mixed reaction by Canada's health research community.

- The vision of the College of Reviewers (College) is to establish an internationally recognized, centrally-managed resource that engenders a shared commitment across the Canadian health research enterprise to support excellence in peer review of the diverse and emerging health research and knowledge translation activities that span the spectrum of health research. The College is intended to be a national resource that over time will serve the peer review needs of CIHR and its partners. The implementation of the College will ensure:

- A more stable base of experienced reviewers from Canada and abroad;

- Collaborative approaches to peer review across health research funding organizations;

- Inclusion of quality assurance mechanisms;

- Systematic recruitment processes;

- Ongoing education resources and mentorship programs; and,

- A valued recognition program.

Figure 2. Timeline of CIHR Reforms process

Long description

2009: Health Research Roadmap released.

2010:

- Review of health research practices conducted.

- Development and discussion of new program design concepts begins.

- CIHR Task Force established to oversee design/ implementation.

2011: CIHR's International Review Panel report published.

2012:

- The new design announced: Designing for the Future: The New Open Suite of Programs and Peer Review Process.

- Evaluation of the Open Operating Grants Program released

2009 – 2012: Consultations held through cross-country townhall meetings and other means, including engagement of: CIHR Institutes and their research communities, CIHR's University Delegates, Surveys, Chairs and Scientific Officers of Peer Review Committees and, CIHR Partners.

2013: 2014 Foundation Grant Funding Opportunity is announced.

2014:

- Establishment of Interim College of Reviewers Advisory Committee.

- First Foundation Grant "live pilot" competition launched.

2015:

- The First Project Grant Program "live pilot" competition is launched.

- The Second Foundation Grant "live pilot" competition is launched.

2016:

- CIHR announces inaugural College of Reviewers Chairs.

- Stakeholder engagement – July 13th Workshop with the Research Community

- Peer Review Working Group established (Chair: Dr. Paul Kubes).

- Implementation of recommendations of Peer Review Working Group.

- Interim Iterative Peer Review Process for Indigenous Health Research Applications in the Fall 2016 Project Grant Competition.

- CIHR announces Peer Review Expert Panel.

3. Overview of the Design (Panel Q1)

The purpose of this section is to provide an overview of the reports and processes that informed the new funding program design and how the design of the Foundation and Project Grant Programs relate to the former CIHR Open Operating Grants Program. The resulting application process to the Foundation Grant Program and Project Grant Program are illustrated in Figures 3 and 4 below.

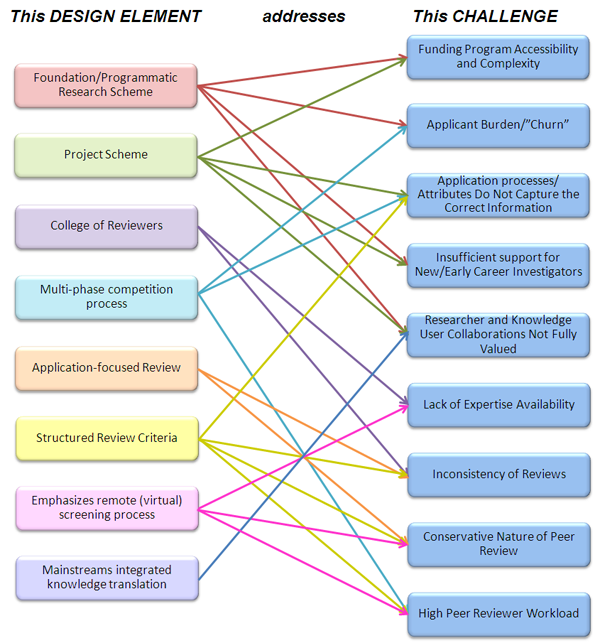

The Foundation and Project Grant Programs were designed to respond to the challenges outlined earlier. The specific design elements associated with these programs and how they respond to one or more of these challenges are below in Figure 5 and discussed more fully in the following seminal documents:

- Design Discussion Document - Proposed Changes to CIHR's Open Suite of Programs and Enhancements to the Peer Review Process. This document, released in March 2012, provides a description of CIHR's thinking on the newly proposed design. It defines a set of funding mechanisms for the new Open Suite of Programs and a set of design parameters that were tabled for implementation.

- What CIHR Heard: Analysis of Feedback on the Design Discussion Document. This document, released in August 2012, provides an account of the feedback received from CIHR's research community regarding the proposed changes to the Open Suite of Programs and peer review process. This feedback was obtained through cross-country consultations about the design.

- Designing for the Future: The New Open Suite of Programs and Peer Review Process. This document, released in December 2012, outlines the key elements of the design of the new Open Suite of Programs and peer review processes. It also signalled CIHR's commitment to a clear transition plan to the new design and CIHR's intent to an iterative design and implementation process, including pilot studies.

Figure 3. Schema of Foundation Grant Program Competition Cycle

Long description

The competition process begins at Stage 1 with the applicant submitting a registration followed by a structured application. CIHR conducts an eligibility check and matches applications to reviewers. The reviewers identify their conflicts and their ability to review for a subset of applications. CIHR establishes the assignment of applications to reviewers; approval of the assignments is done by the Competition Chairs. The Competition Chairs assist CIHR in filling expertise gaps by recommending additional reviewers. The reviewers conduct the preliminary reviews of their assigned applications; the review process is monitored by the Competition Chairs via the online discussions. The reviewers complete their final reviews and determine the final rank list of their assigned applications. CIHR then establishes the consolidated rank of all the applications and determines the subset of applications that move to Stage 2.

Applicants who move on to Stage 2 are invited to submit a Stage 2 structured application. CIHR will provide a calculated baseline budget amount that will be included in the Stage 2 application. This process triggers the same competition process as outlined above. CIHR once again calculates a consolidated ranking for each application; CIHR then compiles a list of all applications in the competition ranking from highest (“green zone”) to lowest (“red zone”) to determine which of the Stage 2 “grey zone” applications (i.e., those applications that are close to the funding cut-off and that demonstrate a high degree of variability in reviewer assessments) should be discussed at the Final Assessment Stage (FAS or Stage 3). The FAS review committee is composed of the Stage 2 Competition Chairs.

As part of the FAS, prior to the face-to-face meeting, reviewers identify their conflicts and ability to review for a subset of applications. FAS reviewers then have access to information on the applications assigned to them, including Stage 2 reviews, consolidated rankings, standard deviations, and the full applications. FAS reviewers use a binning system to differentiate between applications by assigning them to a “Yes” bin (to be considered for funding) or “No” bin (not to be considered for funding), and submit their recommendations to CIHR prior to the meeting.

At the face-to-face meeting, the multidisciplinary committee identifies, discusses, and votes on the “grey zone” (also known as Group B) applications they recommend for funding. In cases where a discrepancy in the budget still exists, the budget is reconciled by the Foundation Budget Subcommittee. After the Final Assessment Stage, CIHR reviews the recommendations from the FAS and the Foundation Budget Subcommittee to make its final funding decisions; a notice of decision is provided to all applicants.

Figure 4. Schema of Project Grant Program Competition Cycle

Long description

The competition process begins with the applicant submitting a registration followed by a full application. CIHR conducts an eligibility check and matches applications to peer reviewers. The reviewers identify their conflicts and their ability to review for a subset of applications. CIHR establishes the assignment of applications to reviewers; approval of the assignments is done by the Competition Chairs. The Chairs, in consultation with Scientific Officers, assist CIHR in filling expertise gaps by recommending additional reviewers. The reviewers conduct the preliminary reviews of their assigned applications; the review process is monitored by the Chairs and Scientific Officers. The reviewers complete their final reviews and determine the final rank list of their assigned applications. CIHR then establishes the consolidated rank of all the applications and determines the subset of applications that move to Final Assessment Stage (Stage 2) along with the Stage 1 reviewers who will participate in the face-to-face cluster-based panel meetings. The reviewers are asked to declare their conflicts on the applications to be assessed by their panels and complete pre-meeting activities. Reviewers discuss the applications during the face-to-face panel meetings. The Chairs moderate the panel discussions and the Scientific Officers take notes of the discussions. CIHR makes funding decisions based on the panels’ recommendations. Notices of decision are provided to all the applicants and the funds are released to successful applicants.

Figure 5. Design elements of the new programs and how they were intended to address identified challenges

Long description

This figure above shows how each of the proposed design elements for the New Open Suite of Programs and Peer Review Enhancements address the multiple challenges that have been identified by CIHR's stakeholders with the current competition and peer review processes. For instance,

The Foundation/Programmatic Research Scheme addresses:

- Funding Program Accessibility and Complexity

- Applicant Burden/"Churn"

- Insufficient support for new/early career investigators

- Researcher and Knowledge User collaborations not fully valued

The Project Scheme addresses:

- Funding Program Accessibility and Complexity

- Application process/attributes do not capture the correct information

- Insufficient support for new/early career investigators

- Researcher and Knowledge User collaborations not fully valued

The College of Reviewers addresses:

- Lack of expertise availability

- Inconsistency of reviews

The multi-phase competition process addresses:

- Applicant Burden/"Churn"

- Application process/attributes do not capture the correct information

- High Peer Reviewers Workload

Application-Focused Review addresses:

- Unreliability/Inconsistency of reviews

- High Peer Reviewers Workload

Structured Review Criteria addresses:

- Application process/attributes do not capture the correct information

- Inconsistency of Reviews

- Conservative Nature of Peer Review

- High Peer Reviewer Workload

The remote (virtual) screening process addresses:

- Lack of expertise availability

- Conservative Nature of Peer Review

- Inconsistency of reviews

Mainstreams integrated knowledge translation addresses:

- Researcher and Knowledge User collaborations not fully valued

As can be seen from Figure 5 (above) the design elements deliberately respond to multiple challenges. A description of how these design elements compare to the former Open Operating Grants Program is provided in Table 1. Table 1 reflects the state for the current competitions for the Foundation and Project Grant programs.

Table 1. Design element differences across Open Operating (OOGP), Foundation, and Project Grant Programs

| Element | OOGP | Foundation Grant Program | Project Grant Program |

|---|---|---|---|

| Funding program accessibility and complexity: In addition to the large volume OOGP, CIHR had created a number of small, purpose-built funding mechanisms that (as CIHR's 2011 International Review Panel notes) resulted in an increasingly complex mixture of programming over time. Researchers were challenged with trying to fit their research projects into existing (and sometimes inconsistent) program criteria, and were sometimes required to apply to multiple programs to support a single program of research. | |||

| Number of funding programs | Ran alongside over 10 bespoke open funding programs related to, for example, knowledge translation and commercialization. This sometimes required researchers to apply to multiple programs to support a single program of research. | A single funding program to support long-term programs of research which mainstreams knowledge translation and other areas once supported by specialized programs. | A single funding program supportive of research projects with a defined end point which mainstreams knowledge translation and other areas once supported by specialized programs. |

| Number of launches of the competitions per year | 2 competitions (>10 competitions for the bespoke funding programs) | 1 competition | 2 competitions |

| Applicant burden/"churn": There was an increasing trend for CIHR-funded researchers to submit multiple applications to open grant competitions to support a single program of research. With time spent writing and applying for multiple grants, researchers complained that there was little time left to conduct research. An increase in applicant churn over the past five years led CIHR's 2011 International Review Panel to recommend that "CIHR [should consider] awarding larger grants with longer terms for the leading investigators nationally". | |||

| Supportive of programs of research? | Yes, funded both programs and projects. No specialized criteria for programs. | Yes. Programs of research required. Programs of research are expected to include integrated, thematically-linked research, knowledge translation, and mentoring/training components. | No. Designed for projects with a defined end-point. |

| Mean and maximum grant duration | Mean duration of an OOGP Grant from 2006-07 to 2013-14 was 4 years, but there was no stated maximum. | Mean duration of a Foundation Grant from 2014-15 to 2015-16 was 6.5 years with a maximum of 5 year for new/early career investigators and a maximum of 7 years for mid/senior career investigators | Mean duration of a Project Grant in 2016-17 was 4 years, there is no stated maximum. |

| Structured application (e.g., sections, character limits, page limits) requirements? | Unstructured research module with up to 13-page limit and Canadian Common CV (CCV) module. | Yes, structured application with character limits and abbreviated CCV module. | Originally, structured application with character limits and abbreviated CCV module.

As of July 2016, changed to free form proposal (including figures and tables) with 10-page limit and enhanced CCV module. A one-page "response to past reviews" will also be included in the revised structure to give the applicant the opportunity to explain how the application was improved since the previous submission. |

| Limit on the number of applications/investigator? | No limit to the number of applications an individual could submit as a Nominated Principal Investigator, or to the number of applications for which an individual could be a Principal Investigator or a Co-Applicant, as long as these applications were different/non-overlapping. | An individual may hold only one Foundation Grant in the role of program leader at any one time. A Foundation Grant program leader is not eligible to apply to the Project Grant competition as a nominated principal investigator or principal investigator.

There is no restriction on Foundation Grant program leaders participating in the Project Grant competition as co-applicants or collaborators, or in any of CIHR's Priority Driven programs as nominated principal investigators or principal investigators. |

Originally, restrictions were placed on the number of applications/investigator across Foundation and Project Grant Programs to reduce applicant and reviewer burden. After receiving feedback from the research community, CIHR lifted the restriction that limits the number of applications that an individual can submit to the Project Grant Competition. Following the work of the Peer Review Working Group in July 2016, a 2 application per individual limit was re-instituted.

CIHR also lifted the restriction on individuals being able to apply to both the Foundation Grant or Project Grant competition. Researchers are able to apply for funding through both Foundation and Project Grant Programs. The following policies apply:

|

| Limit to the number of re-applications? | No. | No. | No. |

| Application process/attributes do not capture the correct information: The number of funding mechanisms resulted in CIHR having multiple application forms and/or processes. In managing this proliferation of funding mechanisms, the information captured in the applications and the peer review criteria were matched but unstructured, contributing to both what applicants addressed and how reviewers applied the criteria being inconsistent. | |||

| Application requirements tied to multi-stage application process? | No. All application details provided at once. | Yes, different application requirements for Stage 1 and Stage 2. A multi-phased competition process with short Stage 1 applications to reduce the amount of work required to complete an application. | No. All application details provided at once. |

| Support for new/early career investigators: CIHR recognizes the role that new/early career investigators play in creating a sustainable foundation for the Canadian health research enterprise. | |||

| Specialized peer review criteria for new/early career researcher applicants? | Evaluation criteria required reviewers to assess the career stage of the applicants. | Evaluation criteria required reviewers to assess the career stage of the applicants. In addition, there were detailed interpretation guidelines provided for review of new/early career researchers. | Evaluation criteria required reviewers to assess the career stage of the applicants. In addition, there were detailed interpretation guidelines provided for review of new/early career researchers. |

| Separate ranking of new/early career researcher applicants? | No. | Yes, for 2015 Foundation. New/Early Career Investigators were assessed separately and ranked against other New/Early Career Investigators at each stage of the competition.

For 2016 Foundation, cohorts were combined across stages of career because there was insufficient application pressure from new/early career investigators to have the cohort assessed and ranked separately. |

No. |

| Protected funding for new/early career investigator applicants? | No. | Yes, at least 15% of the total number of grants awarded to new/early career researchers. | Yes, up to $30M per competition of protected funding for new/early career researchers. |

| Researchers and knowledge user collaborations not fully valued: Over the years, CIHR had launched funding mechanisms that signalled its commitment to knowledge translation and began to build capacity in this area. Integrating knowledge translation brought researchers and knowledge users together to shape the research process, and was found to produce research findings that were more likely to be relevant to, and used by, knowledge users. However, CIHR's Open Operating Grants Program was not designed to actively incentivize partnerships and collaborations with knowledge users/decision makers. | |||

| Mainstreaming of integrated knowledge translation approaches | Knowledge users could not be the Principal Investigator, but they could be involved in other roles, including as co-investigator and collaborator, but this occurred relatively infrequently and led to peer review confusion/inconsistencies.. | Yes, Foundation and Project grant applications may include an integrated knowledge translation approach or may have a knowledge translation focus, with at least one knowledge-user and one researcher. Knowledge users may also be the Nominated Principal Investigator on either Foundation or Project Grants. CIHR defines a knowledge user as an individual who is likely to be able to use the knowledge generated through research to make informed decisions about health policies, programs and/or practices. A knowledge user can be, but is not limited to, a practitioner, policy-maker, educator, decision-maker, health care administrator, community leader, or an individual in a health charity, patient group, private sector organization or media outlet.

CIHR defines integrated knowledge translation as a way of doing research with researchers and knowledge users working together to shape the research process – starting with collaborations on setting the research questions, deciding the methodology, being involved in data collection and tools development, interpreting the findings and helping disseminate the research results. |

|

| Lack of expertise availability: As the nature and diversity of health research evolved and continues to evolve, there is a growing need for CIHR to recruit peer reviewers from a broader base of expertise to ensure all aspects and future impacts of health research are considered. Some researchers have expressed concerns that CIHR's current pool of experts may not possess the disciplinary expertise to review all types of applications. Researchers from multidisciplinary, emerging, and established fields have expressed difficulty in identifying the most appropriate CIHR peer review committees to review their research. As well, it is becoming increasingly difficult for CIHR to fit certain applications into discipline-based review committee mandates. | |||

| College of Reviewers | No. | Planned, in process of being implemented. | Planned, in process of being implemented. |

| Applications assigned to standing committees? | Yes. | No, application-focused review. | No, application-focused review. |

| Application-focused review approach (i.e., content of application determines reviewer recruitment and selection)? | No, applications were reviewed by standing committees, within which reviewers were assigned on basis of content of the application, with the periodic use of external reviewers. | Yes. | Yes. |

| Self-assessment of Conflicts of Interest | Yes. | Yes. | Yes. |

| Self-assessment of Ability to Review applications | Yes, global rating of ability to review (High, Medium, Low, No ability to review). | Yes, global rating of ability to review (High, Medium, Low, No ability to review).. | Yes, as of Fall 2016 Project Grant competition, ratings of ability to review the entire application or certain elements of the applications, on each of: the area of science, the methodology or the population being studied (fully review, partially review, or not review at all due to conflict or not enough expertise). |

| Profiles of reviewer expertise? | Yes, through use of unstandardized expertise key words. | Yes, standardized process with detailed reviewer profiles submitted by reviewers for retention by CIHR for matching purposes. | Yes, as of Fall 2016 Project Grant competition, a standardized process with detailed reviewer profiles submitted by reviewers for retention by CIHR for matching purposes. |

| Matching of applications to reviewers | CIHR staff made final assignment decisions in consultation with the Committee Chair and Scientific Officer. | CIHR staff make assignments, in consultation with the Virtual Chairs. | CIHR staff make assignments, which are then validated and approved by Competition Chairs. |

| Remote/virtual screening processes | No. | Yes, for Stage 1. | Yes, for Stage 1. |

| Unreliability/ inconsistency of reviews: Results from a survey conducted for CIHR's 2011 International Review on Stakeholder Satisfaction in Peer Review showed that, while CIHR's peer reviewers found the process to be fair and efficient, applicants and institutional stakeholders believed there was room for improvement. | |||

| Training for peer reviewers | No structured training, but general Peer Review Guide made available. | Yes, mandatory. Also detailed a Peer Review Manual for review of Foundation Grants. | Yes, optional. Also a detailed Peer Review Manual for review of Project Grants. |

| Number of reviewers per application | Typically 2 reviewers + a reader per application. | Average of 5 reviewers per application across Stages 1 and 2. | Average of 4 reviewers per application. |

| Mechanisms for discussion between reviewers | Yes, committee structure provided an opportunity to present and discuss reviews. | Yes, online at Stage 1 and 2. In-person at Final Assessment Stage. | Yes, in person at Final Assessment Stage (Stage 2). Project Grant Program was originally designed to have asynchronous online discussions amongst reviewers, however this was removed in the Fall 2016 Project Grant competition at the recommendation of a Peer Review Working Group. |

| Scoring or ranking of applications? | Scoring on a 5-point scale. The consensus score was determined between the Primary and Secondary reviewers. The consensus score became the benchmark against which all other committee member scores were provided (+/- 0.5 of the consensus score). | Ranking within reviewers' own assignments followed by a consolidated ranking across reviewers. | Ranking within reviewers' own assignments followed by a consolidated ranking across reviewers. |

| Review quality assurance process? | Informal. Occurred informally through presentation of reviews to committees and the role of the Scientific Officer.

A 2014 CIHR Audit examined review quality. The objective of the audit was to provide an assessment of the quality of the feedback applicants receive in relation to the evaluation criteria, as well as a baseline assessment of how peer reviewers adhere to the evaluation criteria provided by CIHR. |

Informal. Virtual Chairs have a responsibility for monitoring review quality. Formal review quality assurance framework under development in collaboration with the College of Reviewers. | Informal. Competitions Chairs and Scientific Officers have a responsibility for monitoring review quality. Formal review quality assurance framework under development in collaboration with the College of Reviewers. |

| Conservative nature of peer review: Based on the nature and the diversity of applications CIHR receives, CIHR witnessed an increase in new, emerging areas of health research over the years, including work across multiple research disciplines. Feedback from researcher and stakeholder communities suggested that existing committee structures, which had equal success rates and relatively unchanging mandates, favoured established approaches. In an environment where only a small proportion of applications were being funded, there was less incentive and comfort to accept riskier, unproven areas of research. These two factors lead to a conservative peer review system. | |||

| Anonymity of applicants to peer reviewers? | No. | No. | No. |

| Anonymity of peer reviewers to applicants? | No, names of peer reviewers involved in the competition released 60 days after Notices of Decisions issued. Reviewer names were not linked to the specific applications they reviewed but were linked to committees. | No, names of peer reviewers involved in the competition released 60 days after Notices of Decisions issued. Reviewer names are not linked to the specific applications they reviewed. | No, names of peer reviewers involved in the competition released 60 days after Notices of Decisions issued. Reviewer names are not linked to the specific applications they reviewed. |

| Innovation rewarded through peer review criteria? | Partially, through an unstructured and unweighted peer review criterion related to "originality". | Yes, through structured review criteria. | Yes, through structured review criteria. |

| High peer reviewer workload: The increase in number of applications received, including re-submissions, increased the number of reviewers CIHR needs to deliver on its mandate. The majority of reviewers were expected to travel to Ottawa to review more than 6,500 increasingly long applications within a short period of time, resulting in reviewer fatigue. | |||

| Multi-phased application process? | No. | Yes. | Yes. |

| Number of applications per reviewer | 3 as primary reviewer, 3 as secondary reviewer, 3 as reader | 10 as primary reviewer | 10 as primary reviewer |

| Number of weeks allotted for review (weeks between applications being given to reviewers and when reviews were due) | ~5 weeks | 2014 Foundation: 3.7 weeks at Stage 1 and 3.9 weeks at Stage 2

2015 Foundation: 2.4 weeks at Stage 1 and 3.4 weeks at Stage 2 |

4.4 weeks |

| Average number of hours spent doing peer review per application | 7.9 hours | 5.0 hours (across 3 stages of review) | 4.9 hours (across 2 stages of review) |

| Total number of hours spent doing peer review per reviewer | 30.3 hours | 24.8 hours | 15.1 hours |

4. Foundation and Project Grant Program Implementation Process (Panel Qs 2, 3, 4)

The purpose of this section is to provide an overview of the documents and processes that informed ongoing implementation of the design and related peer review processes.

Since 2013, CIHR has piloted specific design elements associated with the new programs, including structured applications, remote review, rating scale, and streamlined CV. These pilots were conducted in a 'live' manner (i.e., piloted during the course of routine program delivery across several programs). Pilots were conducted so that CIHR could monitor outcomes in an evidence-informed manner. The pilots were closely scrutinized to safeguard the reliability, consistency, fairness and efficiency of the competition and peer review processes. The results from pilots completed to date have been analyzed and consolidated into the following reports:

In 2015, CIHR commissioned various reviews of different aspects of the reforms implementation project. These were initiated by CIHR management to assess CIHR's internal systems and processes for the implementation of the reforms, and to allow for adjustments in a timely manner on projects of this complexity. The reports included:

- The Internal Audit Consulting Engagement Report looked at lessons learned from the Reforms Implementation Project. It was completed by the CIHR Internal Audit Group. The report focused on governance and administrative practices linked to the project management and internal reorganization in order to deliver the new Foundation and Project Grant programs. The report found that the reforms implementation project benefited from well-developed planning tools and that the pilots were rolled out on time. The report also found opportunities for improvement in the areas of information-sharing, communications, reporting, project planning, and stakeholder engagement, all of which are being addressed by CIHR.

- The Reforms CRM Project Independent Third Party Review by Interis Consulting was specifically designed to assess the implementation of the business systems required to support the new program delivery processes. CIHR sought expert advice to provide recommendations concerning implementing complex, transformative business systems; opportunities for improvements included clarifying roles and responsibilities as well as project schedules and scope. CIHR is currently taking steps to implement the report's recommendations such as establishing new governance committees to monitor scope and timelines for the projects.

- The CIHR Management Response, released in May 2016, addresses the recommendations in both reports. These two reviews allowed management to receive feedback as the new managerial and business systems were being implemented which allowed for course corrections. CIHR continues to implement the reports' recommendations and is undertaking the necessary steps to address project management challenges.

These reviews identified a number of internal strengths, most notably that:

- key planning activities were properly undertaken;

- document and planning tools were well developed;

- the pilots occurred during the specified timeframes, thanks to a dedicated project team; and,

- employee engagement in the pilots resulted in greater dedication and commitment during times of change.

The reviews also identified a number of opportunities for improvement, involving five areas:

- Governance;

- Communications and stakeholder engagement;

- Information for senior level committee decision-making;

- Project management and planning; and,

- Internal stakeholder engagement and impact on personnel.

CIHR accepted the recommendations of the reviews and, in response, is taking the actions listed below in Table 2.

Table 2. Areas for action and corresponding CIHR response following CIHR Internal Reviews, 2015

| Action Area | Response from CIHR |

|---|---|

| Governance | The overall governance and decision-making structure of the pilots and reforms implementation has been re-examined to ensure it is still appropriate to CIHR as it enters the final stage of the implementation. As part of this process, CIHR has examined its current governance culture, focusing on how decisions are challenged and planning assumptions are made. This has led to the creation of a new governance structure to better support IT infrastructure. The governance and decision-making structure will continue to be reviewed regularly moving forward. |

| Communications and stakeholder engagement | CIHR is reviewing its communications strategy and practices to enhance public communications related to the pilots and reforms implementation. This re-examination will reinforce strategic elements of the reforms implementation; further engage Scientific Directors and Institutes; and integrate experts from the Communications and Public Outreach branch, the Research, Knowledge Translation and Ethics portfolio, and the External Affairs and Business Development portfolio. This includes the creation of a new centralized Contact Centre, as well as a dedication to adopting new ways to communicate and work together, ensuring greater clarity and transparency. |

| Information for senior level committee decision-making | Overall reporting and governance operations surrounding the Reforms Implementation Steering Committee and Task Force have been re-examined. These operations have been improved to better support Committee roles and responsibilities, as defined in their Terms of Reference, and provide relevant, analyzed, and timely data for decision making. CIHR is also adjusting its change management processes to include a more fulsome analysis of operational resource and cost impacts, prior to proceeding with any change. The Steering Committee has been reconstituted, will meet bi-weekly, and have full oversight of the administrative, financial management, and project planning functions for the reforms. |

| Project management and planning | For future pilots, the Research, Knowledge Translation and Ethics portfolio and the Information Management Technology Services branch have established project management procedures to define business rules surrounding individual pilots. This includes an enhanced change management and control process. CIHR will also incorporate a more fulsome post mortem analysis of each pilot and leverage performance data, systems, and available budget information to better plan for future activities. |

| Internal stakeholder engagement and impact on personnel | CIHR will continue to survey staff to provide additional data on employee engagement. Additionally, CIHR is conducting exit interviews, follow-up discussions, or consultative sessions with individuals who have transitioned out of the pilots to maximize the knowledge transfer of lessons learned. CIHR will also re-examine and adjust current corporate priorities to adapt to the results of the aforementioned assessment. Finally, CIHR will continue to provide training to staff on the use of new IT applications. |

In 2016, CIHR outlined how the 2014 "live pilot" of the Foundation Grant Program would inform subsequent competitions. Table 3, taken from the executive summary of this report, outlines a number of enhancements to the 2015 Foundation Grant "live pilot" competition informed by the 2014 "live pilot" and related survey data from reviewers, applicants, and Competition Chairs.

Table 3. Enhancements implemented to the 2015 Foundation Grant "live pilot" following 2014 pilot.

| What CIHR Heard | Changes Implemented |

|---|---|

| Stage 1 | |

|

|

A small minority of applicants and reviewers reported:

|

|

|

|

|

Stage 2 | |

|

|

|

|

A majority of applicants and reviewers recommended that the weighting of the sub-criteria be adjusted as follows:

|

The weighting of the sub-criteria have been adjusted:

|

|

|

| Final Assessment Stage | |

|

Operational changes to improve the FAS review process are currently being considered, including:

|

| Ensuring High Quality Reviews | |

A small minority of reviewers suggested that:

|

|

|

|

On July 13, 2016, at the request of the Minister of Health, CIHR hosted a Working Meeting with members of the health research community to review and jointly address concerns raised regarding CIHR's peer review processes, particularly associated with the Project Grant Program. Table 4 outlines the key outcomes of that meeting and complements the full report of the meeting.

Table 4. Action areas and requested changes to the 2016 Project Grant competition (Fall launch) following the July 13th, 2016 Working Meeting of CIHR and its research community

| Action Area | Requested Changes to 2016 Project Grant Competition |

|---|---|

| Appropriate Review of Indigenous Health Research Applications |

|

| Applications |

|

| Stage 1: Triage |

|

| Stage 2: Face-to-Face Discussion |

|

| Establishment of a Peer Review Working Group |

|

The Peer Review Working Group was established as an outcome of the July 13th, 2016 Working Meeting between CIHR and representatives from its research community. Under the leadership of Dr. Paul Kubes, the Peer Review Working Group discussed each of the July 13th Working Meeting outcomes and made recommendations for action, outlined in Table 5. The CIHR response to these recommendations is outlined in Table 6. It should be noted that Dr. Kubes has an ongoing role with CIHR as the College of Reviewers Executive Chair.

Table 5. Recommendations from the Peer Review Working Group for the 2016 Project Grant competition

| July 13 Working Meeting Outcome | Peer Review Working Group Recommendation | Rationale |

|---|---|---|

| Applications | ||

| Applicants will be permitted to submit a maximum of two applications to each Project Grant competition. |

|

The rationale is to reduce the burden on reviewers (while most applicants submitted one or two applications, some NPIs submitted more, with one submitting seven applications in the last Project Grant competition). However, we know that some researchers may need to renew more than one grant, so a maximum of two applications per competition was deemed reasonable. |

| The existing page limits for applications will be expanded to 10 pages (including figures and tables) and applicants will be able to attach additional unlimited supporting material, such as references and letters of support. |

|

We have received a lot of feedback from the community on this point: some applicants wanted no changes to the application structure, as they felt that they were close to getting funded, while others wanted up to 12 pages. Still, others noted that 12 pages was too much of a change, as there is not optimal time to write a whole new application. It was also important to note that many reviewers liked the shorter applications but did feel that they needed more information. Overall, a 10-page application structure takes the needs of reviewers and applicants into account. As the CCV cannot accommodate the addition of information about leaves in its current form, applicants will be able to upload a PDF (no page limits) to supplement the CCV information. Whatever length of time an applicant has taken off from research in the past seven years (e.g., parental, bereavement, medical, or administrative leave) is the amount of time that they may append. For example, an applicant who took 1 year of maternity/parental leave within the past 7 years would be able to upload a PDF detailing 1 year of funding and publications beyond the 7 year limit. Drafting a rebuttal is an important scholarly exercise and incorporating it into the competition process was deemed appropriate by the group. |

| Stage 1 | ||

| Chairs will now be paired with Scientific Officers to collaboratively manage a cluster of applications and assist CIHR with ensuring that high quality reviewers are assigned to all applications. |

|

The Working Group felt strongly about having this human intervention early in the process to ensure that the right experts are assigned to each application.

In addition to drop-down menus of keywords, the Working Group recommended that applicants should also have the opportunity to use an optional "Other" textbox in ResearchNet to make recommendations regarding the type(s) of expertise necessary for evaluating their proposal, highlighting nuances specific to their research community as needed. The group felt that it was important for Chairs and Scientific Officers to receive this information as part of the expertise matching process in order to better evaluate the suitability of matches between applications and reviewers. Note: Instructions will be included in ResearchNet. |

| Each application will receive 4-5 reviews at Stage 1. |

|

There was much discussion and debate amongst the Working Group members regarding the value of having four versus five reviewers, or even dropping down to three. The Working Group deemed three reviewers to be insufficient given the possibility of a reviewer not submitting, which would result in an application only receiving two reviews. Having four reviewers balances reviewer burden with high quality decision-making. The group felt that having four reviewers was a manageable number—especially given the additional oversight measures being put in place to ensure quality review. |

| Applicants can now be reviewers at Stage 1 of the competition. However, they cannot participate in the cluster of applications containing their own application. |

|

This will ensure a greater pool of peer reviewers, without compromising quality and fairness. |

| Asynchronous online discussion will be eliminated from the Stage 1 process. |

|

Asynchronous online discussion has been removed from the competition process. |

| CIHR will revert to a numeric scoring system (rather than the current alpha scoring system) to aid in ranking of applications for the Project Grant competition. |

|

It was agreed that the alpha scoring system caused problems, but the former 0 to 4.9 scale also had issues. The Working Group concluded that the 0-100 scale will increase transparency and enhance understanding of the process for stakeholders, including the public and the research community. It is also important that the public and the government funding source understand that applicants with very high scores are not funded due to limitation of funds. A 4.3 score, for example, meant little to the public or government officials.

We heard from a number of reviewers that the structured review format from the last Project Grant competition actually hindered their ability to provide cohesive comments. While reviewers will still be expected to discuss the strengths and weaknesses of each application, they will not be broken down into separate compartments, giving reviewers the space to discuss each application more freely. Working Group members agreed that summarizing an application was important for the reviewer (as it demonstrates that they read and understood the application). |

| Stage 2: Face-to-Face Discussion | ||

| Approximately 40% of applications reviewed at Stage 1 will move on to Stage 2 for a face-to-face review in Ottawa. |

|

We acknowledge that the idea of not all applications being discussed face-to-face makes the community uncomfortable, but in discussing the sheer volume of anticipated applications, we realized that discussing 100% of the applications at the face-to-face committees would not be possible. |

| Stage 2 will include highly ranked applications and those with large scoring discrepancies. |

|

It is important that all applications deemed to be the most promising have the opportunity for fine-detailed discussion at the face-to-face meetings to ensure that the best grants are funded. The working group felt that it was important to make sure that grants move forward whether they are ranked in the top percentage across or within clusters. This will allow CIHR to evaluate which of these move-forward rules is best able to identify the grants that reviewers ultimately recommend for funding.

We recommend that CIHR analyze the predictive capacity of ranking within versus across clusters on the final ranking and/or funding status. |

| Chairs will work with CIHR to regroup and build dynamic panels, based on the content of applications advancing to Stage 2. |

|

The Working Group agreed that building the panels on a per-competition basis would result in a responsive review model. The Working Group was comfortable with a model that would involve approximately 20-30 panels at Stage 2, based on application pressure per competition. |

| Applications moving to the Stage 2 face-to-face discussion will be reviewed by three panel members. A ranking process across face-to-face committees will be developed to ensure the highest quality applications will continue to be funded. |

|

The two reviewers per application in Stage 2 will be chosen based on expertise needed, not on how they ranked grants in Stage 1, in order to minimize bias. In other words, the selection process will be blinded to how the reviewer ranked each grant.

We felt it was important that all reviewers be made aware when they are invited that they could be selected to attend the face-to-face meetings. Further, Stage 1 reviewers' names will be made available with their reviews at the face-to-face meetings. We saw this approach as a way to strengthen reviewer accountability throughout the two-stage process. The working group recognizes that there is a concern among some members of the research community that applications will not have their full complement of reviewers at the meeting and may be disadvantaged by only bringing two reviewers forward. However, it is the expectation that reviewers invited forward will present all of the reviewers and the Chair/SO will also be asked to play a role in this process. The working group therefore recommends that, following the fall grant cycle, CIHR analyze grants' final funding status as a function of the rankings assigned by the original four reviewers along with which two of the four went to the face-to-face meeting. The majority of members felt that equal success rates across clusters would be the fairest option given the limited funds currently available. Based on feedback from CIHR's Science Council, the group also took into account the fact that face-to-face meetings cost money and bringing reviewers in to review only one or two grants was not prudent. |

| Chairs, Scientific Officers, and Reviewers | ||

|

||

| Additional Recommendations | ||

|

||

Table 6. CIHR implementation of recommendations from the Peer Review Working Group for the CIHR Project Grant competition (Fall 2016 )

| July 13 working meeting participants and Peer Review Working Group recommendations | Actions taken from CIHR to implement the recommendations |

|---|---|

| Application | |

| Applicants may submit a maximum of two applications in the role of Nominated Principal Applicant (NPA) per Project Grant competition. | Implemented. |

The existing page limits for applications will be expanded to 10 pages (including figures and tables) and applicants will be able to attach additional unlimited supporting material, such as references and letters of support.

|

Implemented. |

| Applications will be assessed based on "significance and impact of the research" (25% of final score), "approaches and methods" (50% of the final score), and "expertise, experience and resources" (25% of final score). | Implemented. |

| The Common CV (CCV) for the Project Grant application will include publications from the past seven years and applicants will be able to upload a PDF to supplement the CCV information if they have taken leaves of absence in the past seven years. | Implemented. |

| A one-page rebuttal will also be included in the revised structure to give the applicant the opportunity to explain how the application was improved since the previous submission. | Implemented. |

| Stage 1 Review | |

| Chairs will now be paired with Scientific Officers to collaboratively manage a cluster of applications and assist CIHR with ensuring that high quality reviewers are assigned to all applications. | Implemented. |

| Reviewer assignments will be approved by the Chairs and Scientific Officers. In addition, Chairs and Scientific Officers will have the ability to remove or add reviewers after the reviewers have completed the new Conflict of Interest/ Ability to Review (CAR) assessment for a group of applications. | Implemented. |

| Applicants can make recommendations regarding what types of expertise are required to review their application. | Implemented. |

| Each application will be assigned to four (4) reviewers at Stage 1 | Implemented. |

| Applicants can now be reviewers at Stage 1 of the competition. However, they cannot participate in the cluster of applications containing their own application. | Implemented. Peer review members participate in accordance with the Conflict of Interest and Confidentiality Policy of the Federal Research Funding Organizations. |

| Asynchronous online discussion will be eliminated from the Stage 1 process. | Implemented. |

| CIHR will revert to a numeric scoring system (rather than the current alpha scoring system) to aid in ranking of applications for the Project Grant competition. | Implemented. |

| Stage 2: Face-to-Face Discussion | |

| Approximately 40% of applications reviewed at Stage 1 will move on to Stage 2 for a face-to-face review in Ottawa. | Implemented. |

| The working group recommended that Stage 1 reviewers' comments for applications that don't move on to Stage 2 be reviewed by chairs and SOs to ensure appropriate review. | Implemented. |

Stage 2 will include highly ranked applications and those with large scoring discrepancies.

|

Implemented. As per the recommendation, CIHR will monitor the review process to help inform refinements to ranking strategies moving forward. |

| Chairs will work with CIHR to regroup and build dynamic panels, based on the content of applications advancing to Stage 2. | Implemented. Competition clusters will be determined based on the number and types of applications submitted to the competition and may therefore vary from competition to competition. The clusters are expected to remain largely the same from Stage 1 to Stage 2. |

| Applications moving to Stage 2 will be reviewed by two of the original four reviewers from Stage 1 during face-to-face meetings within cluster-based panels in Ottawa. They will be expected to present their own and the reviews of other two Stage 1 reviewers at the meeting. | Implemented. |

| In order to increase accountability, reviewer names will accompany their reviews to the final assessment stage. | Implemented. |

| The Working Group has recommended ranking within each cluster for Stage 2, as opposed to a ranking across face-to-face committees. | Implemented. |

| Additional recommendations | |

| CIHR grantees will be strongly encouraged to review if invited. The Working Group feels that it is important for grant recipients to give back to the process, if invited. The College Chairs will continue this discussion. | Implemented. |

| Host a face-to-face meeting with all of the Chairs and Scientific Officers in the fall. The Working Group agreed with the proposed selection criteria to choose Chairs, and also felt that it was appropriate for the Chairs to help choose the Scientific Officers, but recommended that CIHR bring everyone together. CIHR is evaluating the feasibility of hosting this meeting for the 2016 competition. | Implemented. |

| Make peer review training mandatory for all reviewers. Completing the training – including a module on unconscious bias – should be a requirement for all reviewers, including seasoned senior investigators, Chairs and Scientific Officers. | All peer reviewers will be invited to complete training and participate in information sessions on the Project Grant review process, including a specific module on unconscious bias. |

| Invite early career investigators (ECIs) to observe the Stage 2 face-to-face peer review meetings. The Chairs in the College of Reviewers have made mentoring ECIs a priority. They will work with CIHR to find opportunities for mentorship—including observing peer review—and will discuss using the Project Grant competition as one of those opportunities, whether it takes place as part of the fall competition or future ones. | Partly implemented.

A list of reviewer criteria was defined for the Project Grant competition. The criteria apply to all career stages, and recruitment will include early career investigators to ensure they have the opportunity to acquire the required experience. CIHR, in consultation with the College of Reviewers Chairs, is developing additional mentorship strategies. Details about these strategies will be shared at a later date. |

| Consider a variety of mechanisms related to ensuring equity across different career stages and sex of applicants. The Peer Review Working Group strongly recommended that CIHR continue this conversation with its Science Council, as well. There was agreement that this important topic requires more in-depth consultation and analysis across all CIHR funding programs. | Discussions still under way. |

| There was unanimous support from the Working Group for equalizing success rates for early career investigators (ECIs) in the Project Grant program. Equalizing success rates means ensuring that the success rate for ECIs in a competition matches the overall competition success rate. The Working Group recommended that the additional 30 million dollars received in the last budget be used for this purpose, similar to how these additional funds were used in the first Project Grant competition. | Discussions still under way.

As noted in April 2016, however, $30M will be entirely dedicated to the ongoing and future Project Grant competitions with a focus on early career investigators. |

| Share the appropriate level of data from the Fall 2016 Project Grant competition after the results are released. The Working Group advised CIHR to share this competition data publicly in the spirit of transparency, but also to ensure that the data can be used by CIHR to shape important decisions in the future. | CIHR will share data from the Project Grant competitions, in accordance with privacy laws. (Note: The data from each competition will be shared after the results are released.) |

| CIHR will evaluate the changes implemented and make analyses available as they are conducted (e.g., data about the reviewer profile matching algorithm and its validation). It will be instrumental for future refinement, improvement, and transparency of Peer Review for CIHR to make this data publicly available, at the finest resolution possible, while still protecting privacy and confidentiality. | CIHR and its Science Council will carefully monitor and evaluate the competition and work with the necessary advisory bodies to make the necessary changes. CIHR will share those progress updates. |

| Recommendations for subsequent Project Grant competitions | |

| Adjust the CCV requirements for non-academic co-applicants as well as academic co-applicants who are not appointed at a Canadian institution. The Working Group proposed that such applicants may upload other documents, rather than requiring a CCV. | A plan will be put together to address this issue for future competitions. |

| Incorporate a mechanism between Stage 1 and Stage 2 to let applicants respond to reviewer comments. For those applications moving ahead to Stage 2, the Working Group proposed giving applicants the opportunity to submit a 1-page response to reviewer comments that could then be used as part of the Stage 2 deliberations. | It will be further explored for implementation for future competitions. |

5. Description of evidence to date (Panel Qs1, 2)

The purpose of this section is to provide a high-level summary of data related to the overall CIHR funding context.

Comparisons of success rates between Open Operating, Foundation, and Project Grant Programs

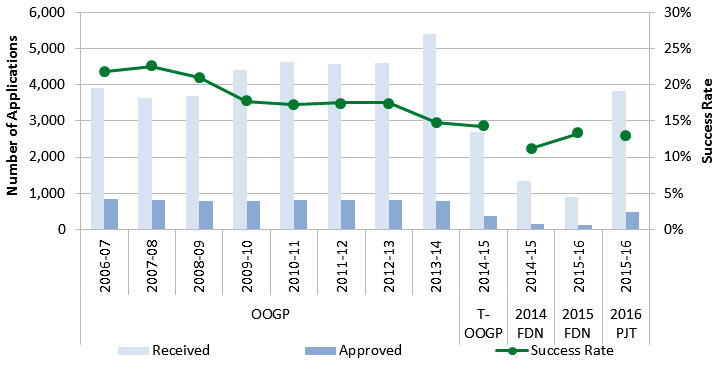

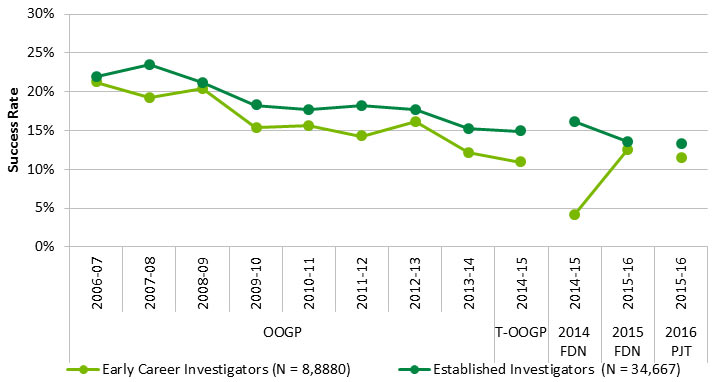

Figure 6 presents data on the number of applications received and approved and the success rates for its Open Operating Grants Program (OOGP), the Foundation Grant Program and the Project Grant Program. It can be seen that the success rates for these programs has declined over time and current success rates for the Investigator-Initiated programs sits at ~13%.

Figure 6. Distribution of application pressure and success rates in the CIHR Investigator-Initiated Grant programs, 2006-07 to 2015-16

Long description

| OOGP | T-OOGP | 2014 FDN | 2015 FDN | 2016 PJT | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2006-07 | 2007-08 | 2008-09 | 2009-10 | 2010-11 | 2011-12 | 2012-13 | 2013-14 | 2014-15 | 2014-15 | 2015-16 | 2015-16 | |

| Received (N = 43,547) | 3,894 | 3,625 | 3,680 | 4,416 | 4,636 | 4,578 | 4,586 | 5,389 | 2,682 | 1,343 | 905 | 3,813 |

| Approved (N = 7563) | 847 | 816 | 772 | 782 | 802 | 801 | 801 | 797 | 383 | 150 | 120 | 492 |

| Success Rate | 22% | 23% | 21% | 18% | 17% | 17% | 17% | 15% | 14% | 11% | 13% | 13% |

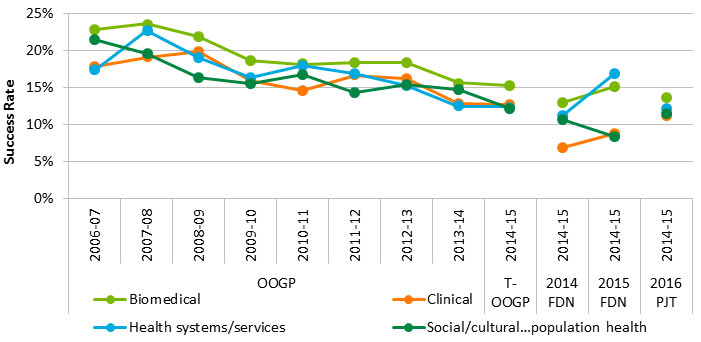

The success rate varies across several important areas for monitoring:

- CIHR's four pillars of health research (Figure 7)

- Sex of the Nominated Principal Investigator (Figure 8)

- Career stage of the Nominated Principal Investigator (Figure 9)

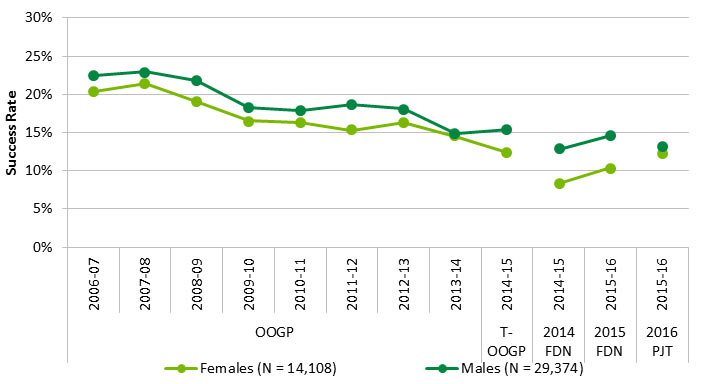

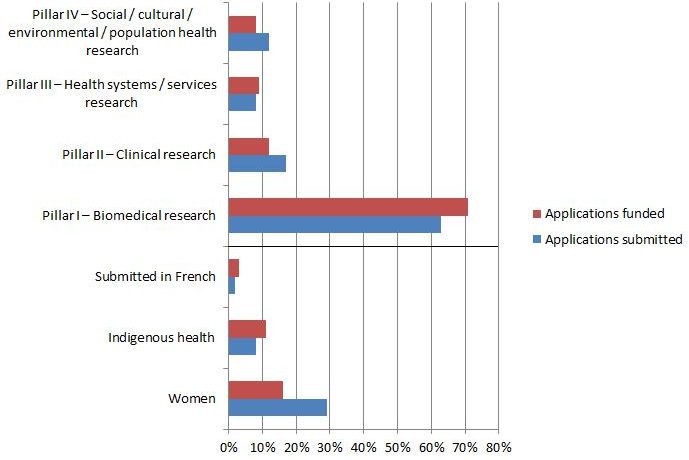

Figure 7 provides the overall decline in success rates which are evident in the previous graph, but also depicts the variability by pillar. Biomedical research has had historically higher success rates than all other pillars; however it seems that the Foundation Grant Program is shifting this pattern slightly, at least in the first two competitions held for the Program. Figure 8 depicts a similar pattern in success rates by sex, however depicts sex differences in Foundation Grant program success rates.

Figure 7. Distribution of success rates of Investigator-Initiated Grant programs by primary pillar, 2006-07 to 2015-16

Long description

| OOGP | T-OOGP | 2014 FDN | 2015 FDN | 2016 PJT | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Primary Pillar | 2006-07 | 2007-08 | 2008-09 | 2009-10 | 2010-11 | 2011-12 | 2012-13 | 2013-14 | 2014-15 | 2014-15 | 2015-16 | 2015-16 |

| Biomedical | 23% | 24% | 22% | 19% | 18% | 18% | 18% | 16% | 15% | 13% | 15% | 14% |

| Clinical | 18% | 19% | 20% | 16% | 15% | 17% | 16% | 13% | 13% | 7% | 9% | 11% |

| Health systems/services | 17% | 23% | 19% | 16% | 18% | 17% | 15% | 13% | 12% | 11% | 17% | 12% |

| Social, cultural, environmental, and population health | 21% | 20% | 16% | 16% | 17% | 14% | 15% | 15% | 12% | 11% | 8% | 12% |

| Not applicable/Specified | 50% | 7% | 13% | 0% | 0% | 9% | 21% | 13% | 11% | 0 | 0% | 0% |

Figure 8. Distribution of success rates of Investigator-Initiated Grant programs by sex of NPI, 2006-07 to 2015-16

Long description

| Sex of NPI | OOGP | T-OOGP | 2014 FDN | 2015 FDN | 2016 PJT | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2006-07 | 2007-08 | 2008-09 | 2009-10 | 2010-11 | 2011-12 | 2012-13 | 2013-14 | 2014-15 | 2014-15 | 2015-16 | 2015-16 | |

| Females (N = 14,108) | 20% | 21% | 19% | 17% | 16% | 15% | 16% | 15% | 12% | 8% | 10% | 12% |

| Males (N = 29,374) | 22% | 23% | 22% | 18% | 18% | 19% | 18% | 15% | 15% | 13% | 15% | 13% |

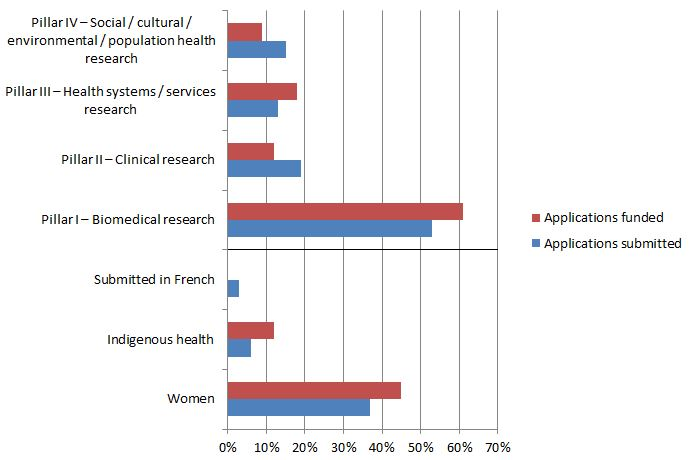

Figure 9 shows a persistent difference in the success rates for new/early career investigators when compared to established investigators (mid career and senior) in the OOGP. Within the new programs, which both have mechanisms for ensuring appropriate review of and protected funding for new/early career investigators, this gap seems to be closing.

Figure 9. Distribution of success rates of Investigator-Initiated Grant programs by career stage of NPI, 2006-07 to 2015-16

Long description

| Career Stage of NPI | OOGP | T-OOGP | 2014 FDN | 2015 FDN | 2016 PJT | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2006-07 | 2007-08 | 2008-09 | 2009-10 | 2010-11 | 2011-12 | 2012-13 | 2013-14 | 2014-15 | 2014-15 | 2015-16 | 2015-16 | |

| Early Career Investigators (N = 8,880) | 21% | 19% | 20% | 15% | 16% | 14% | 16% | 12% | 11% | 4% | 13% | 12% |

| Established Investigators (N = 34,667) | 22% | 23% | 21% | 18% | 18% | 18% | 18% | 15% | 15% | 16% | 14% | 13% |

Funding results: 2015 Foundation Grant Competition

The 2015 Foundation Grant Competition will support 120 research programs for a total of $292M over 7 years.

New/Early Career Investigators Cohort – 33 funded

Average grant size: $1,140,364

Median grant size: $1,067,334

Grant duration: 5 years

Mid-career/senior investigators – 87 funded

Average grant size: $3,282,939

Median grant size: $2,838,143

Grant duration: 7 years

The figures below provide a further breakdown of the 2015 Foundation Grant Competition results by applicant cohort (new/early career investigator cohort, Figure 10 and mid/senior career investigator cohort, Figure 11). Specifically these figures present data on the proportion of applications funded relative to the proportion submitted on a range of applicant profile variables. For the Foundation Grant Program, these cohorts are reviewed and ranked separately by design.

Figure 10. Breakdown of new/early career investigator cohort by proportion of applications submitted and funded, 2015 Foundation Grant Competition

Long description

| Application breakdown | Applications submitted | Applications funded |

|---|---|---|

| Women | 185 applications submitted (29%) | 14 applications funded (16%) |

| Indigenous health | 53 applications submitted (8%) | 10 applications funded (11%) |

| Submitted in French | 7 applications submitted (2%) | 3 applications funded (3%) |

| Pillar I – Biomedical research | 405 applications submitted (63%) | 62 applications funded (71%) |

| Pillar II – Clinical research | 108 applications submitted (17%) | 10 applications funded (12%) |

| Pillar III – Health systems / services research | 51 applications submitted (8%) | 8 applications funded (9%) |

| Pillar IV – Social / cultural / environmental / population health research | 81 applications submitted (12%) | 7 applications funded (8%) |

Figure 11. Breakdown of mid/senior career investigator cohort by proportion of applications submitted and funded, 2015 Foundation Grant Competition

Long description

| Application breakdown | Applications submitted | Applications funded |

|---|---|---|

| Women | 97 applications submitted (37%) | 15 applications funded (45%) |

| Indigenous health | 17 applications submitted (6%) | 4 applications funded (12%) |

| Submitted in French | 7 applications submitted (3%) | 0 applications funded (0%) |

| Pillar I – Biomedical research | 140 applications submitted (53%) | 20 applications funded (61%) |

| Pillar II – Clinical research | 51 applications submitted (19%) | 4 applications funded (12%) |

| Pillar III – Health systems / services research | 34 applications submitted (13%) | 6 applications funded (18%) |

| Pillar IV – Social / cultural / environmental / population health research | 40 applications submitted (15%) | 3 applications funded (9%) |

The 2015 Foundation Grant competition was supported by a three-stage competition and review process.

Stage 1

- A total of 432 individual reviewers participated in the peer review process which was monitored by 30 virtual chairs.

- The peer-review process resulted in total of 4,418 individual reviews.

- 14 applications (2%) had three reviewers assigned to them

- 104 applications (11%) had 4 reviewers assigned to them

- 792 applications (87%) had 5 reviewers assigned to them

- 265 applications were successful at stage 1 and were invited to submit a stage 2 application (260 stage 2 applications were submitted - 77 early/career investigators, 183 established investigators).

Stage 2

- A total of 171 individual reviewers participated in the peer review process which was monitored by 16 Virtual Chairs.

- The peer-review process resulted in total of 1,298 individual reviews.

- Two applications (1%) had 4 reviewers assigned to them

- 258 applications (99%) had 5 reviewers assigned to them

- 160 applications were successful at stage 2.

- Based on stage 2 results, CIHR identified the highest ranked applications to be considered for funding. These applications constituted the "green zone" and did not require further evaluation.

Final assessment stage

- The final assessment stage committee was comprised of 16 virtual chairs from stage 2, a Chair and a Scientific Officer.

- 96 applications with high consolidated rankings and significant variation in those rankings were discussed.

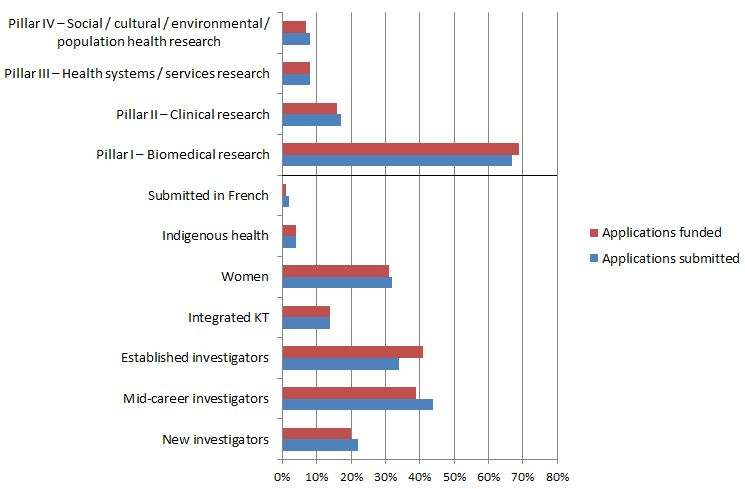

Funding Results: Spring 2016 Project Grant Competition

The 2016 Project Grant competition will support 491 research grants, plus an additional 127 bridge grants, for a total investment of $375,579,347 over five years. The 491 grants approved were awarded to 468 individual nominated principal investigators (23 NPIs were awarded two grants). Of the 491 grants, 98 were awarded to new/early career investigators. The average grant size/duration is approximately $791,000 over four years. The median grant size/duration is approximately $703,000 over five years.

Figure 12 presents the proportion of applications funded relative to the proportion submitted on a range of applicant profile variables.

Figure 12. Breakdown of proportion of applications submitted and funded, Spring 2016 Project Grant Competition

Long description

| Application breakdown | Applications submitted | Applications funded |

|---|---|---|

| New investigators | 856 applications submitted (22%) | 98 applications funded (20%) |

| Mid-career investigators | 1,662 applications submitted (44%) | 189 applications funded (39%) |

| Established investigators | 1,283 applications submitted (34%) | 204 applications funded (41%) |

| Integrated KT | 539 applications submitted (14%) | 65 applications funded (14%) |

| Women | 1,228 applications submitted (32%) | 150 applications funded (31%) |

| Indigenous health | 147 applications submitted (4%) | 17 applications funded (4%) |

| Submitted in French | 83 applications submitted (2%) | 6 applications funded (1%) |